Gavin has been focusing on the issue of Sybil attacks (civil resistance) recently. PolkaWorld reviewed Dr. Gavin Wood's keynote speech at Polkadot Decoded 2024 and wanted to explore some of Gavin's insights on how to prevent Sybil attacks.

What is a Sybil attack?

As you may know, I have been working on some projects. I am writing gray papers, focusing on the JAM project, and doing some code work in this direction. In fact, over the past two years, I have been thinking about a very critical issue that is very important in this field, that is, how to prevent Sybil attacks (civil resistance). This problem is everywhere. Blockchain systems are based on game theory, and when analyzing games, we usually need to limit the number of participants or manage the arbitrariness that participants may show.

When we design digital systems, we very much want to be able to determine if a particular endpoint — a digital endpoint, that is — is operated by a human. I want to be clear up front that I am not talking about identity here. Identity is certainly important, but it is not about determining the specific identity of a particular endpoint in the real world, but about distinguishing between this device and a device that is currently operated by a human. Beyond that, there is the additional question of: if the device is indeed operated by a human, can we give this person a pseudonym that can identify them in a specific context and be able to identify them again if they interact with us again using this device in the future

These types of digital systems, especially decentralized Web3 systems, are becoming increasingly important as we move from primarily interacting with other people (like in the 80s when I was born) to interacting with systems. In the 80s, people primarily interacted directly with each other; in the 90s, we started interacting with services over the phone, such as phone banking.

This is a significant change for us, while initially telephone banking consisted of a lot of human-operated call centers where we talked to people over the phone, eventually those systems evolved into the automated voice systems we have today. As the internet grew, that human interaction became less and less frequent, and we barely interacted with humans anymore in our daily services. Of course, this trend became even more pronounced with the rise of Web2 e-commerce. And Web3 has further solidified this - in Web3, you barely interact with humans anymore. The core idea of Web3 is to let you interact with machines, and even let machines interact with each other.

What is the point of studying Sybil attacks?

So what is the point of this, exactly? This is a fundamental element of any real society, and it is at the heart of many of our social systems, including commerce, governance, voting, opinion aggregation, and so on. All of these rely heavily on the ability to prevent Sybil attacks to build communities. Many of the mechanisms we take for granted in business are actually based on the assumption of preventing Sybil attacks. Whether it's fair and reasonable use, noise control, or community management, it's all built on this defense capability. Many things require us to confirm that an entity is a real human being. If someone behaves inappropriately, we may want to temporarily exclude them from the community. You can see this phenomenon in digital services, and of course, in the real world as well.

By preventing witch attacks, we can introduce some mechanisms to constrain behavior without setting entry barriers or sacrificing the accessibility of the system. For example, there are two basic ways to incentivize behavior. One is a "carrot and stick" strategy (i.e. a reward and punishment mechanism). The stick (punishment) approach is to require you to pay a deposit, and if you behave improperly, the deposit will be confiscated. Staking is a simple example of this. The carrot (reward) approach is to assume that you will behave well, and if you don't meet expectations, we will deprive you of some rights. This is actually the basic way most civil societies operate.

However, this approach cannot really be implemented without a Sybil-proof mechanism on the blockchain. In civil society, such a mechanism works because once someone is imprisoned, they cannot commit the same crime again, at least not while they are incarcerated. Freedom is inherent and in principle can be taken away by the government. I am not saying to imprison anyone on the chain, but I am saying that similar constraints cannot be implemented on the chain at present. This makes it difficult to disincentivize bad behavior by providing free services, rather than just encouraging good behavior. Business and promotion activities rely heavily on being able to confirm whether the person transacting is a real person.

This is a screenshot of a website I use occasionally. It is a very good whiskey that many people like very much and it is difficult to buy in its country of origin. In Europe, it's cheaper to buy it, but it looks like they're keeping the price low by limiting the amount an individual can buy. However, this is almost impossible to do in a true Web3 system.

There are also great difficulties with community building, airdrops, and identifying and distributing community members. In general, airdrops are very inefficient in terms of capital expenditures, because the goal of airdrops is to reach as many people as possible. When doing airdrops, to effectively distribute fairly, you need to identify individuals first and then give everyone the same amount. But in practice, there are all kinds of problems, such as different wallet balances. You can end up in a situation where the distribution curve becomes very unbalanced and shows great differences. The result is that most people are barely motivated enough.

Regarding the issue of "fair and reasonable use", although the impact is smaller now, if you use too much network resources, the system will usually only reduce your speed, although you can still continue to use the network.

Back in the past, about 10 to 15 years ago, if you used too many Internet resources, the Internet service provider might think that you were not using this unlimited network service reasonably. Therefore, they would basically stop your service completely instead of just reducing your speed as they do now. This practice allows them to provide nearly unlimited Internet services to most users because they can distinguish who is using resources reasonably by identifying users.

One of the foundations of Web2 is the advanced service model, which relies heavily on the ability to identify users. More than 20 years ago, the user identification mechanism may not be so complicated, but now the situation is very different. If you want to open an account, there are usually more than three mechanisms to confirm whether you are a real individual and whether you are a user they have not seen before. For example, if you try to register an Apple account without buying an iPhone, it is like a checkpoint. These companies are basically unwilling to give you an account. Of course, they advertise that you can get an account for free, but I don’t know what the AI in the background is doing. I tried 10 times before I finally succeeded. In the end, I still had to buy an iPhone.

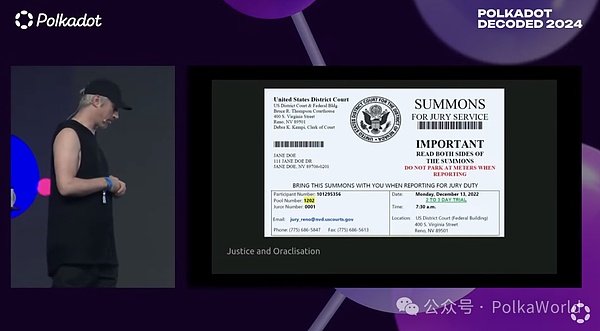

I think if we can better identify individuals, many processes like "Oracleization" will become easier.

A typical example of using "proof of human nature" to verify information in society is the jury system. When we need an impartial judge (i.e. Oracle) to decide whether someone is guilty or not, the system randomly selects an odd number of ordinary people from society to listen to the evidence and make a verdict. Similarly, in other areas of social life, such as representation and opinion gathering, representation is an important part of society and we manage representation through anti-Sybil attack means. Of course, due to the imperfect current civic infrastructure, this management method is often not ideal, especially when representation is confused with identity. Many times, when you want to vote, you need to prove your real identity, such as showing your driver's license or passport. But in fact, voting represents a part of your voting rights, not directly linking this vote to your personal identity.

How to prevent Sybil attacks? What are the current solutions?

So, how should this be done?

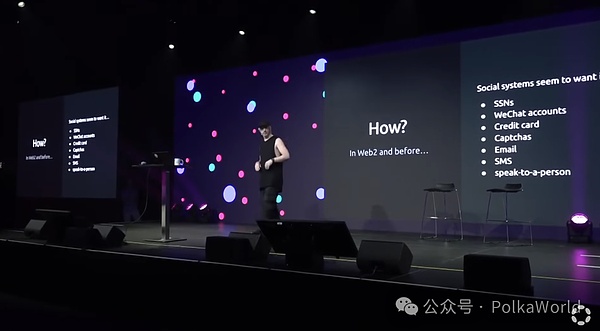

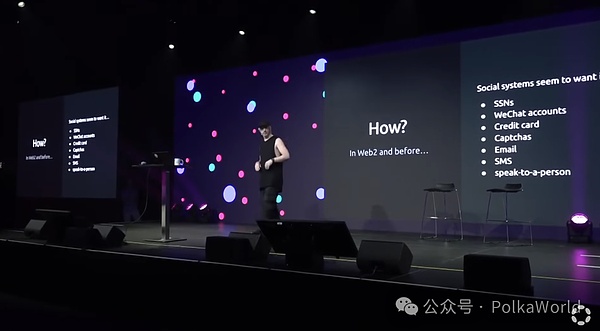

In Web 2 and before Web 2, there were many ways to implement identity authentication. In today's Web 2 systems, these methods are often used in combination. For example, if you want to create a new Google account, you may need to pass a verification code, and do email and SMS verification. Sometimes, SMS verification can replace talking to a real person. If you have ever been locked out of your Amazon account, you know what I am talking about. Basically, it's a complicated maze game until you find the right buttons and phone options and are finally able to talk to real customer service. For more complex anti-sybil attacks, we might use information like ID cards or credit cards.

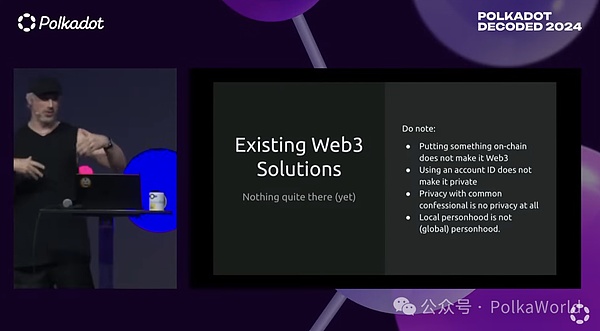

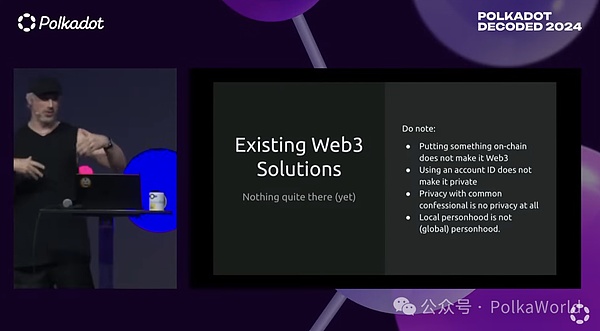

However, as we enter the world of Web 3, my research has not found any perfect solution that really satisfies me. There are a few candidates, but they vary widely in three areas: whether they are decentralized, whether they protect privacy, and whether they are truly resilient (i.e., resistant to attacks). Resilience is becoming a bigger and bigger problem. In fact, most systems face both problems. There is a system that I call the "common confession system," which is that you reveal your privacy to a specific authority, and this authority will have some information about you that you may not want to share with others. For example, you might scan your passport and submit it to an authority, and then this authority has everyone's passport information, and is in a strong position because they have all this information. The common confession system is not suitable for Web3.

In addition, you sometimes see some personalized systems similar to Web3, which rely on "common key management agencies". There is an agency with power, which determines who is a legitimate individual by holding the key. In other words, this agency has the power to decide who can be considered a "real user" in the system. Sometimes, these agencies even keep the keys for users, but more often, they only retain the power to decide who is a legitimate individual.

These all rely on centralized authorities to control users' privacy or identity information, which is contrary to the concept of decentralization and user autonomy of Web 3.

Putting something on the chain does not mean it is Web3. You can simply move Web2 policies or policies that rely on centralized authorities to chain, but doing so does not change the policy itself. It just means that the policy may be more resilient to execution, but the policy itself is still not Web3. Just because a name is a long hex string, it does not mean that it is necessarily private. If specific measures are not taken, such a string can still be associated with real-world identity information.

If a system relies on common "confession mechanisms", it is not a privacy-preserving solution. We have seen too many data breaches to make us understand that simply putting data behind a corporate file wall or in some trusted hardware cannot ensure security. A personalized solution suitable for Web3 does not require local individual identity or local community membership, but global individual identity, which is a completely different concept.

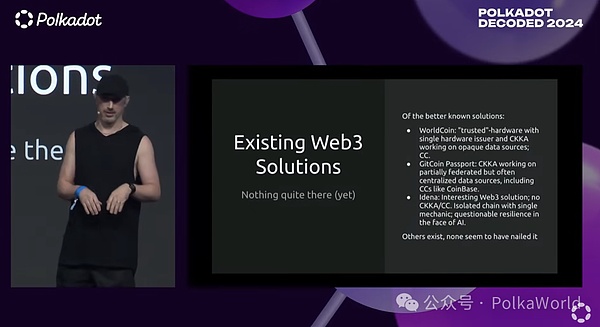

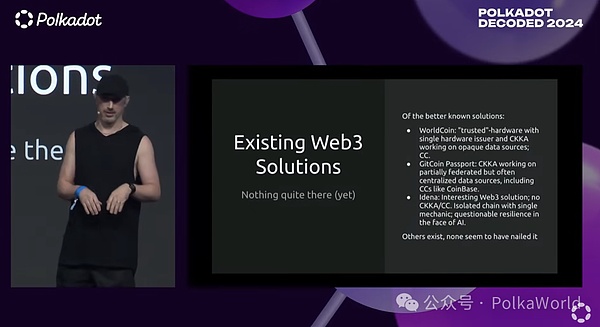

There are some systems that try to solve this problem, but they rely on a single piece of hardware and a common key management authority, so they are not really Web3 solutions. For example, the Worldcoin project tries to solve this problem through trusted hardware, but it uses a unified key management authority and centralized data sources, so it is not very consistent with the decentralized concept of Web3.

Another example is Gitcoin Passport, which is widely used in the Ethereum community and is a comprehensive platform for other identity and personalization solutions. It relies on a federal key management authority to identify individuals, but these data sources are often based on centralized authorities, including centralized institutions like CoinBase (CC).

Idena, an interesting Web3 solution, does not have a common key management authority or centralized authority. However, it is only a single mechanism, and it is unclear whether it is resilient enough in the face of the growing AI industry. So far, it has performed well, but the number of users is relatively small, only about a thousand users.

In general, there is no method that can completely solve this problem.

Gavin's views on solving Sybil attacks

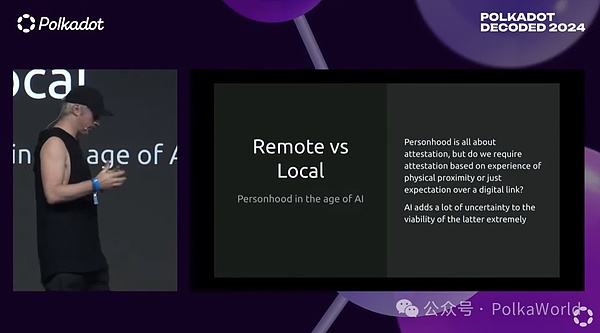

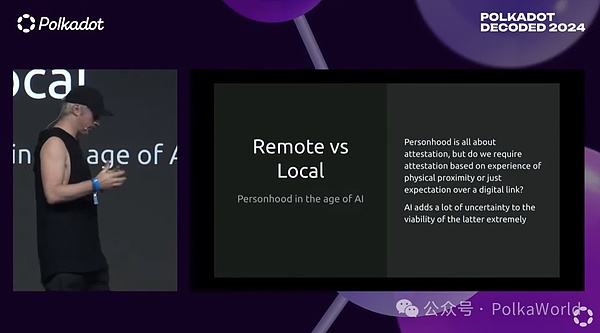

There are two ways to think about individual identity: one is remote and the other is local. Machines do not naturally understand "individual identity", and it is unlikely that we will see some cryptographic technology suddenly solve this problem. One might say that fingerprints or biometrics make humans unique, and machines can measure these, but it is difficult for purely digital systems to prove this. The system that probably comes closest to this goal is Worldcoin, but it is also just a machine that can verify in a way that is not easily hackable.

So, we need to understand that individual identity is more about authentication. It involves how elements within a digital system verify that other elements are real individuals. So the question is, what is the basis for this authentication? Is it physical contact, or other suspicion? Do we believe that an account is a real individual because we have met this person and when we met, we believed that he had no contact with others, so we can infer that he is the only individual in a specific environment, or just because we saw something on the screen and there is other evidence to support his individual identity?

When we talk about remote authentication (that is, authentication without direct, non-physical evidence), AI (artificial intelligence) may cause some problems. And if we rely on physical evidence, practicality may become a problem. So we are caught between these two limitations. However, I think that through innovation and imagination, we can still find some feasible solutions.

So what do we need to do?

So, what do we need? What is our plan?

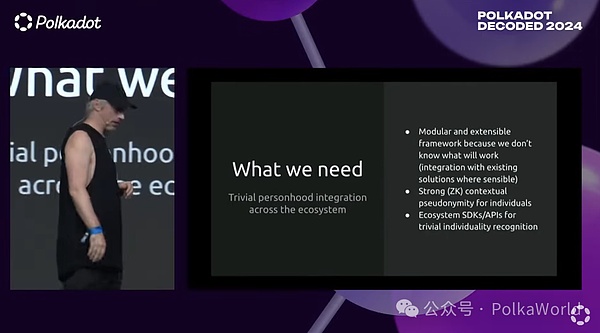

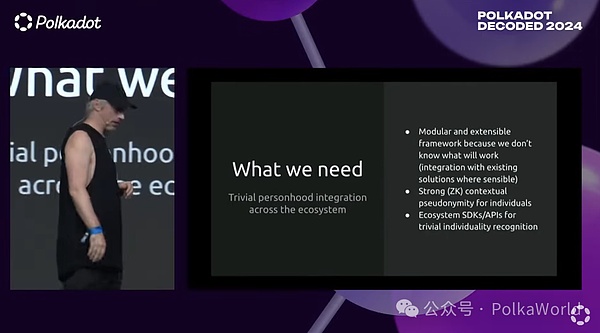

I think the key to making Polkadot more useful in the real world (not just in DeFi, NFTs, and virtual blockchains) is to find a simple way to identify individuals.Identification here does not mean determining who the person is, such as saying "I know this is Gavin Wood", but identifying "this is a unique individual". I don't think there will be a single solution, so we need a modular and extensible framework.

First, existing and reasonable solutions (such as Idena) can be integrated. Secondly, the system should not be limited by the ideas of a single person, and should not rely solely on a single person's imagination of what mechanism may work. This should be open to some extent, allowing everyone to contribute solutions.

Second, we need strong contextualized pseudonymity. Actually, I originally wrote about anonymity, and to some extent I do mean anonymity, anonymity with your real-world identity. But at the same time, we also want pseudonymity so that in any particular context, you can not only prove that you are a unique individual, but when you use the system again in the same context, you can prove that you are the same unique individual before.

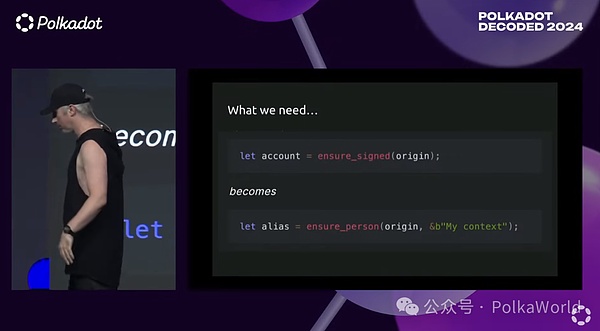

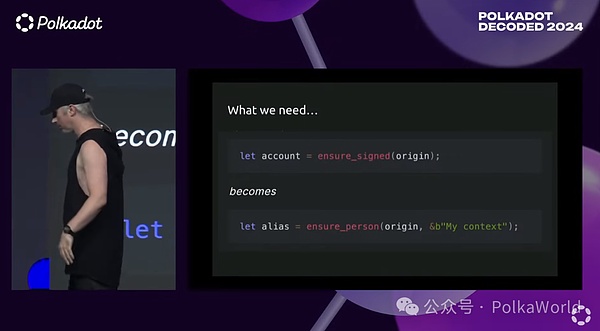

Finally, we need strong SDKs and APIs to make this feature as easy to use as any other feature in Substrate or Polkadot smart contracts, or in the upcoming JAM ecosystem. It has to be easy to use. For example, to be specific, I don't know how many people here have written Frame code, but when writing a new blockchain, you often see a line of code let account = ensure_signed (origin). What this line of code does is get the origin of the transaction and see if it's from an account, and if so, tell me what that account is. But an account is not the same as a person, a person may use one or more accounts, and likewise, a script may use one or more accounts. Accounts by themselves don't tell us anything about an individual's identity, at least not on their own. So if we want to make sure that a transaction is coming from a real person, not just one of a million accounts, we need to be able to replace this line of code with another line of code let alias = ensure_person (origin, &b"My context").

There are two benefits to this that are worth noting.

First, we're not just asking if this is an account signing the transaction, we're asking if this is a person signing the transaction. This makes a huge difference in what we can do.

Second, it's important that different operations have different contexts, and that we implement anonymity and pseudonym protection within those contexts. When the context changes, the pseudonym changes, and there's no way to link pseudonyms in different contexts to each other, or to link the pseudonym to the person behind it. These are completely anonymous pseudonym systems, and that makes them a very important tool in blockchain development, especially when developing systems that are useful in the real world.

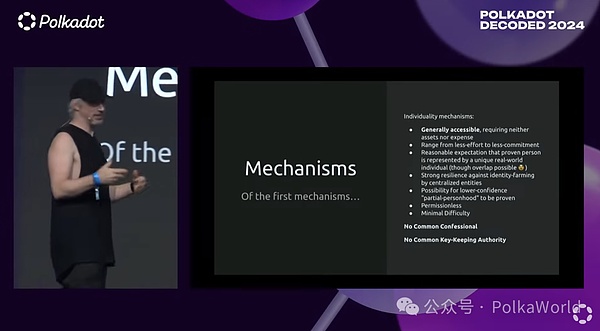

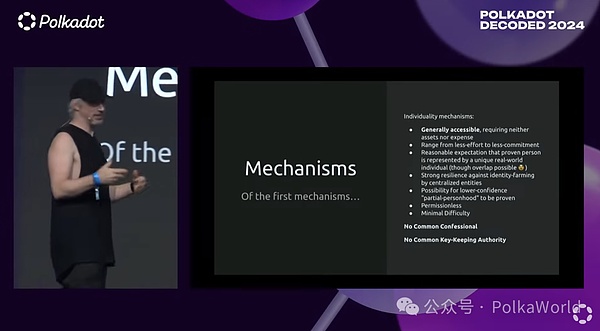

So what are some of the constraints that we might put on the mechanisms that actually identify individuals? First, the mechanism must be widely accessible. If it only allows a subset of the population to participate, it won't be very useful. It shouldn't require assets, and it shouldn't require expensive fees, at least not exorbitant fees.

Inevitably, there will be tradeoffs between different mechanisms. I don't think there's going to be a one-size-fits-all solution. But some tradeoffs are acceptable, and some are not. Resilience, decentralization, and sovereignty should not be compromised, but some mechanisms may require less effort but more commitment, while others may require more effort but less commitment. We should have a reasonable expectation that the individual verified by the system (i.e., an account linked to a person, or a pseudonym) is indeed a unique real-world individual.

Different mechanisms may overlap in how they measure individual identities in a decentralized Web3 system in a resilient and non-authoritative way. This means that in practice we cannot be perfect, but there should be no orders of magnitude error, and the difference should be significantly less than an order of magnitude. In addition, the system must be extremely resistant to identity abuse to prevent a small number of people or organizations from trying to obtain a large number of individual identities.

Critically, the system must have safeguards to prevent this. There may be mechanisms that can provide relatively low confidence scores on individual identities, which is a higher goal. Some mechanisms may achieve this, some may not, and some may be binary, either we believe that the account is a unique individual, or we don't. There are also mechanisms that might say we are 50% sure, but it's also possible that this individual has two accounts and we are 50% sure of both accounts.

Of course, all of this must be permissionless and it must not be difficult to implement. I shouldn't need to emphasize this, but there should be no common confession mechanism or common key management authority in the system.

What are the benefits of doing this?

So why do this? What are the benefits?

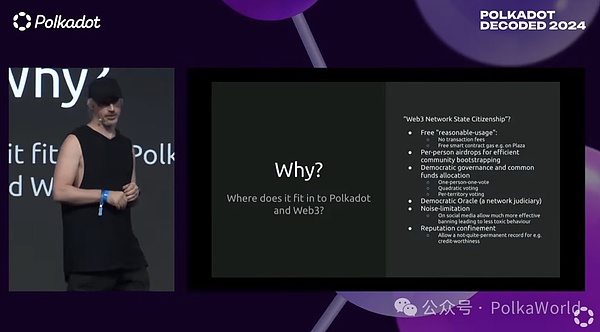

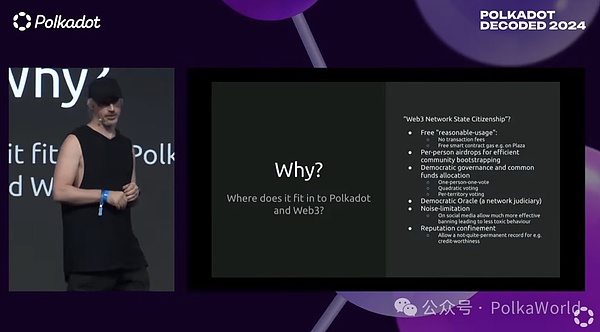

We've talked about some of the ways that society uses or relies on individual identities. But how can this be implemented on-chain? We can start to imagine a Polkadot system where there is no transaction fee to pay, that is, reasonable use is free. Imagine a “Plaza” chain, which, if you’re not familiar with it, is basically an enhanced version of Asset Hub that has smart contract functionality and can utilize a staking system.

If we imagine a Plaza chain like this, we can imagine a scenario where we don’t have to pay gas fees. Gas is free as long as you use it within reasonable limits. Of course, if you write scripts or do a lot of transactions, then you need to pay fees because it’s beyond the scope of the average individual’s rights. Imagine that these systems begin to be open to the public for free, and we can launch communities in a targeted and efficient manner through airdrops and other means. At the same time, we can also imagine more advanced Polkadot governance methods.

Now, I’m not particularly convinced by the idea of “one person, one vote”. It is necessary in some cases to ensure legitimacy, but it usually doesn’t lead to particularly good results. However, we can consider some other voting methods, such as quadratic voting, or regional voting. In certain representative elements, one person, one vote can be very inspiring.

We can also imagine a jury-like oracle system where parachains and smart contracts can use local secondary oracle systems, perhaps for price predictions, perhaps for handling disputes between users. But they can also say that if necessary, we will use a "grand jury" or "supreme court" system, where members are selected from known random individuals to make decisions and help resolve disputes, and give some small rewards. Since these members are randomly selected from a large, impartial group, we can expect this approach to provide a resilient and reliable dispute resolution method.

You can imagine noise limiting systems, especially decentralized social media integrations in social media integrations, to help manage spam and bad behavior. In DeFi, we can imagine reputation limiting systems similar to credit scores, but perhaps more focused on whether you have been found to have not paid on time, so that the system can provide services similar to freemium models.

Okay, that's the first part of this talk, I hope it helps you.

JinseFinance

JinseFinance