Author: LINDABELL Source: ChainFeeds

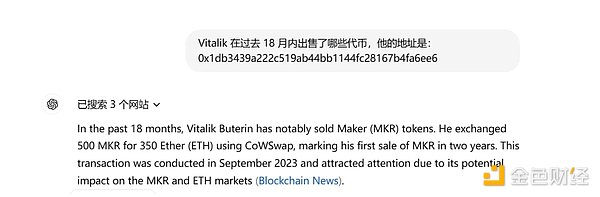

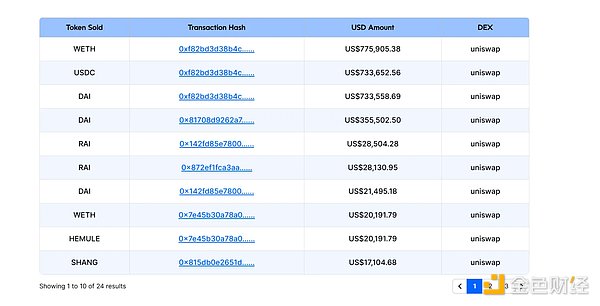

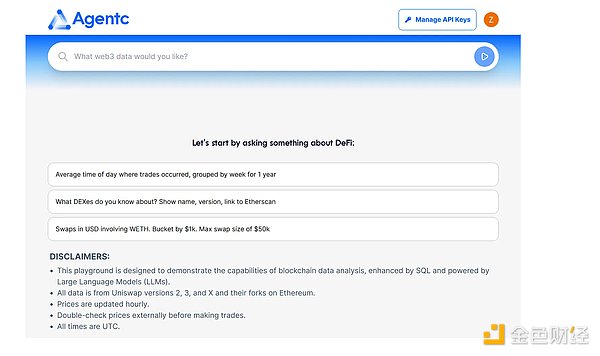

In 2022, OpenAI launched ChatGPT driven by the GPT-3.5 model, which has since ushered in waves of AI narratives. However, although ChatGPT can effectively handle problems in most cases, its performance may still be limited when specific domain knowledge or real-time data is required. For example, when asked about Vitalik Buterin's token trading records in the past 18 months, it cannot provide reliable and detailed information. To this end, The Graph core development team Semiotic Labs combined The Graph indexing software stack and OpenAI to launch the Agentc project, which can provide users with cryptocurrency market trend analysis and transaction data query services.

When Agentc was asked about Vitalik Buterin's token transaction records in the past 18 months, it provided a more detailed answer. However, The Graph's AI layout is not limited to this. In the white paper "The Graph as AI Infrastructure", it stated that the goal is not to launch a specific application, but to make full use of its advantages as a decentralized data indexing protocol to provide developers with tools to build Web3 native AI applications. To support this goal, Semiotic Labs will also open source Agentc's code base, allowing developers to create AI dapps with similar functions to Agentc, such as NFT market trend analysis agents and DeFi trading assistant agents.

The Graph Decentralized AI Roadmap

The Graph was launched in July 2018 and is a decentralized protocol for indexing and querying blockchain data. Through this protocol, developers can use open APIs to create and publish data indexes called subgraphs, enabling applications to efficiently retrieve on-chain data. To date, The Graph has supported more than 50 chains, hosted more than 75,000 projects, and processed more than 1.26 trillion queries.

The Graph can handle such a large amount of data, which is inseparable from the support of the core team behind it, including Edge & Node, Streamingfast, Semiotic, The Guild, GraphOps, Messari and Pinax. Among them, Streamingfast mainly provides cross-chain architecture technology for blockchain data streams, while Semiotic AI focuses on applying AI and cryptography to The Graph. The Guild, GraphOps, Messari and Pinax each focus on areas such as GraphQL development, indexing services, subgraph development and data stream solutions.

The Graph's layout of AI is not a new idea. As early as March last year, The Graph Blog published an article outlining the potential of using its data indexing capabilities for artificial intelligence applications. In December last year, The Graph released a new roadmap called "New Era", planning to add AI-assisted queries for large language models. With the recent release of the white paper, its AI roadmap has become clearer. The white paper introduces two AI services: Inference and Agent Service, which allow developers to integrate AI capabilities directly into the front end of the application, and the entire process is supported by The Graph.

Inference Service: Support for multiple open source AI models

In traditional inference services, models make predictions on input data through centralized cloud computing resources. For example, when you ask ChatGPT a question, it will infer and return the answer. However, this centralized approach not only increases costs, but also poses censorship risks. The Graph hopes to solve this problem by building a decentralized model hosting market, giving dApp developers more flexibility in deploying and hosting AI models.

The Graph gives an example in the white paper, showing how to create an application to help Farcaster users understand whether their posts will receive a lot of likes. First, use The Graph's subgraph data service to index the number of comments and likes on Farcaster posts. Next, the neural network is trained to predict whether a new Farcaster comment will be liked, and the neural network is deployed to The Graph's Inference Service. The resulting dApp can help users write posts that get more likes.

This approach enables developers to easily leverage The Graph's infrastructure, host pre-trained models on The Graph network, and integrate them into applications through API interfaces, so that users can directly experience these features when using dApps.

In order to provide developers with more choices and flexibility, The Graph's Inference Service supports most existing popular models. It wrote in the white paper, "In the MVP stage, The Graph's Inference Service will support a set of selected popular open source AI models, including Stable Diffusion, Stable Video Diffusion, LLaMA, Mixtral, Grok and Whisper, etc." In the future, any open model that has been sufficiently tested and indexer operated can be deployed in The Graph Inference Service. In addition, in order to reduce the technical complexity of deploying AI models, The Graph provides a user-friendly interface that simplifies the entire process, allowing developers to easily upload and manage their AI models without worrying about infrastructure maintenance.

And in order to further enhance the performance of the model in specific application scenarios, The Graph also supports fine-tuning of the model for specific data sets. But it should be noted that fine-tuning is usually not performed on The Graph. Developers need to fine-tune the model externally and then deploy these models using The Graph's inference service. In order to encourage developers to make their fine-tuned models public, The Graph is developing incentive mechanisms, such as reasonably allocating query fees between model creators and indexers that provide models.

In terms of verifying the execution of reasoning tasks, The Graph provides a variety of methods, such as trusted authority, M-of-N consensus, interactive fraud proofs, and zk-SNARKs. These four methods have their own advantages and disadvantages. Trusted authority relies on trusted entities; M-of-N consensus requires multiple indexers to verify, which increases the difficulty of cheating while also increasing the computational and coordination costs; interactive fraud proofs are more secure, but not suitable for applications that require fast responses; and zk-SNARKs are more complex to implement and are not suitable for large models.

The Graph believes that developers and users should have the right to choose the appropriate security level according to their needs. Therefore, The Graph plans to support multiple verification methods in its reasoning service to adapt to different security needs and application scenarios. For example, in situations involving financial transactions or important business logic, it may be necessary to use more secure verification methods, such as zk-SNARKs or M-of-N consensus. For some low-risk or entertainment applications, you can choose lower-cost and simpler verification methods, such as trusted authorities or interactive fraud proofs. In addition, The Graph also plans to explore privacy-enhancing technologies to improve model and user privacy issues.

Agent Service: Helping developers build autonomous AI-driven applications

Compared to the Inference Service, which mainly runs trained AI models for inference, the Agent Service is more complex and requires multiple components to work together to enable these agents to perform a series of complex and automated tasks. The Graph's Agent Service value proposition is to integrate the construction, hosting, and execution of Agents into The Graph and provide services by the Indexer Network.

Specifically, The Graph will provide a decentralized network to support the construction and hosting of Agents. When the Agent is deployed on The Graph network, The Graph Indexer will provide the necessary execution support, including indexing data, responding to on-chain events and other interactive requests.

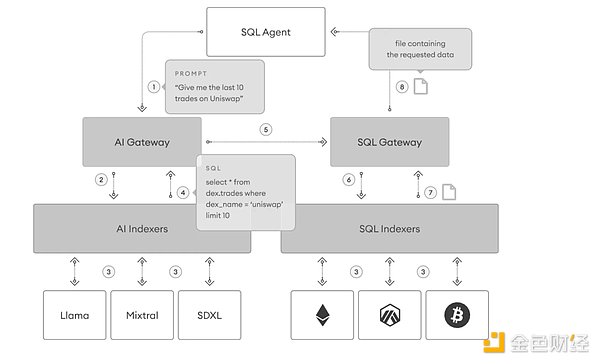

As mentioned above, The Graph core development team Semiotic Labs has launched an early Agent experimental product, Agentc, which combines The Graph's indexing software stack and OpenAI. Its main function is to convert natural language input into SQL queries, making it convenient for users to directly query real-time data on the blockchain and present the query results to users in an easy-to-understand form. Simply put, Agentc focuses on providing users with convenient cryptocurrency market trend analysis and transaction data query. All its data comes from Uniswap V2, Uniswap V3, Uniswap X and its forks on Ethereum, and the price is updated every hour.

In addition, The Graph also stated that the LLM model used by The Graph has an accuracy rate of only 63.41%, so there is a problem of incorrect response. To solve this problem, The Graph is developing a new large language model called KGLLM (Knowledge Graph-enabled Large Language Models).

KGLLM can significantly reduce the probability of generating false information by using the structured knowledge graph data provided by Geo. Each statement in the Geo system is supported by on-chain timestamps and voting verification. After integrating Geo's knowledge graph, agents can be applied to a variety of scenarios, including medical regulations, political developments, market analysis, etc., thereby improving the diversity and accuracy of agent services. For example, KGLLM can use political data to provide policy change recommendations for decentralized autonomous organizations (DAOs) and ensure that they are based on current and accurate information.

KGLLM advantages also include:

Use of structured data: KGLLM uses a structured external knowledge base. Information is modeled in the form of a graph in the knowledge graph, making the relationship between data clear at a glance, so querying and understanding data becomes more intuitive;

Relational data processing capabilities: KGLLM is particularly suitable for processing relational data. For example, it can understand the relationship between people, the relationship between people and events, etc. And it uses a graph traversal algorithm to find relevant information by jumping multiple nodes in the knowledge graph (similar to moving on a map). In this way, KGLLM can find the most relevant information to answer questions;

Efficient information retrieval and generation: Through the graph traversal algorithm, the relationships extracted by KGLLM are converted into prompts that the model can understand in natural language. Through these clear instructions, the KGLLM model can generate more accurate and relevant answers.

Prospects

As the "Google of Web3", The Graph uses its advantages to make up for the current data shortage of AI services and simplifies the project development process for developers by introducing AI services. With the development and use of more AI applications, the user experience is expected to be further improved. In the future, The Graph development team will continue to explore the possibility of combining artificial intelligence with Web3. In addition, other teams in its ecosystem, such as Playgrounds Analytics and DappLooker, are also designing solutions related to proxy services.

Joy

Joy

Joy

Joy Hui Xin

Hui Xin Catherine

Catherine Alex

Alex Hui Xin

Hui Xin Alex

Alex Aaron

Aaron Davin

Davin Aaron

Aaron Aaron

Aaron