Source: Machine Heart

On the third working day of OpenAI's 12-day update, the blockbuster release is finally here!

Just as everyone expected in the comment area before the live broadcast, the official version of the video generation model Sora finally appeared!

It has been nearly 10 months since the release of Sora on February 16 this year.

Now, netizens can finally experience Sora's powerful video generation capabilities!

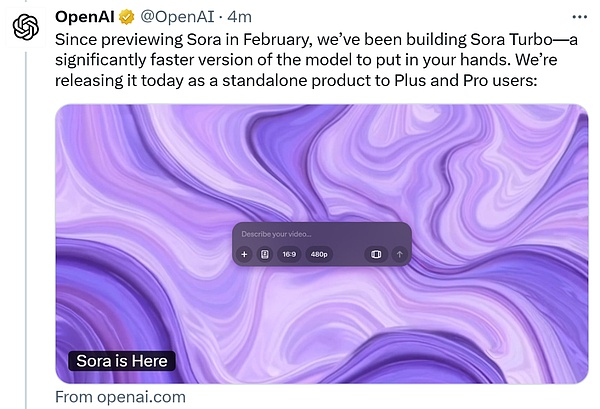

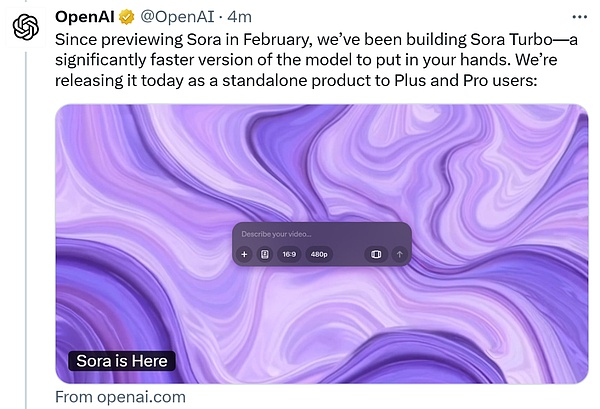

At the same time, OpenAI has developed a new version of Sora, Sora Turbo, which is much faster than the preview model in February. Today, this version will be available as a standalone product to ChatGPT Plus and Pro users.

According to today's live broadcast, Sora users can generate videos with 1080p resolution, up to 20 seconds, widescreen, vertical or square. And users can use resources to expand, remix and merge, or generate new content based on text. OpenAI has developed a new interface that makes it easier to prompt Sora with text, images and videos, and the storyboard tool allows users to accurately specify the input of each frame.

We can start by looking at a few examples of generated videos:

Hint: The footage is foggy and the colors are contrasting, capturing the feel of a low-visibility shot, providing a sense of immediacy and chaos. This scene shows shaky footage from the perspective of a sailor on a 17th-century pirate ship. The horizon shakes violently as waves crash against the wooden hull, making it difficult to discern details. Suddenly, a giant sea monster appears out of the raging sea. Its huge, slippery tentacles reach out dangerously, and its slimy appendages wrap around the ship with terrifying force. The field of view changes dramatically as the sailors scramble to confront the terrifying sea creature. The atmosphere is very tense, and the groaning of the ship and the roar of the sea can be heard amid the chaos.

Hint: Rockefeller Center is full of golden retrievers! Everywhere you look, there are golden retrievers. It's a winter wonderland in New York at night, complete with a giant Christmas tree. Taxis and other New York elements can be seen in the background

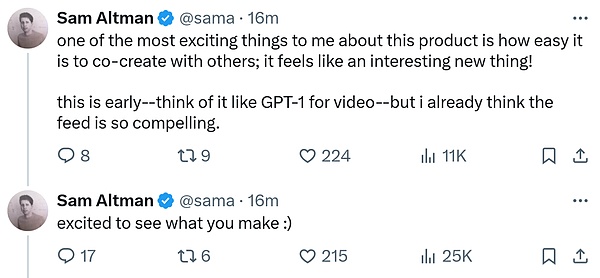

Sam Altman said that what excites him most is the convenience of co-creating with others, which feels like a fun new thing. You can think of Sora as a video version of GPT-1.

OpenAI research scientist Noam Brown said that Sora is the most intuitive demonstration of the power of scale.

For the release of Sora, some netizens said that this is the best Christmas gift, and some said that Sora will be a game changer.

By text, pictures or videos,bring your imagination to life

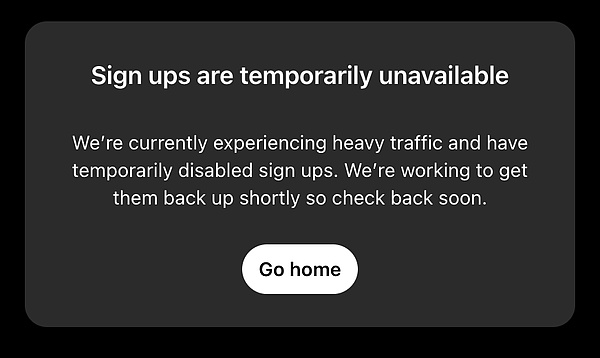

With excitement, Synced also wants to try out Sora! Unfortunately, there are too many people who want to try it out, so I can’t log in:

Experience address: https://sora.com/onboarding

Let’s first show readers the capabilities of Sora as officially released.

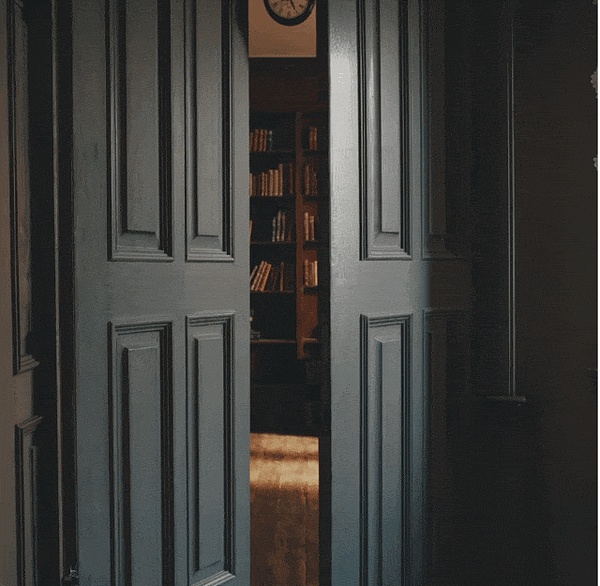

Use Remix to replace, remove, or reimagine elements in your video

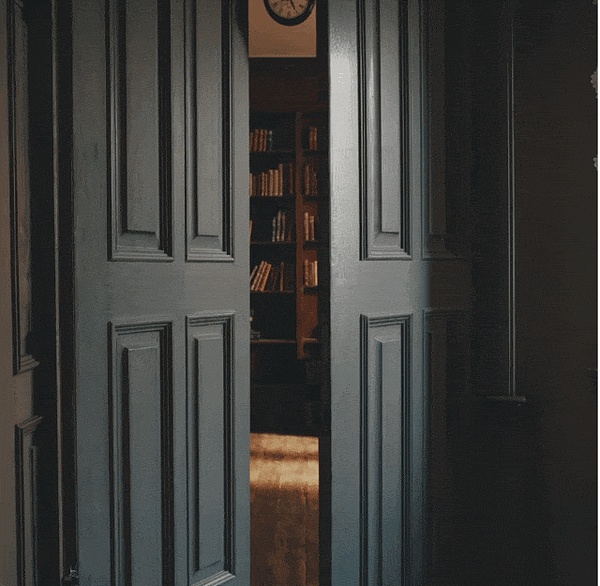

Open the door to the library

Replace the door with a French door

The scene outside the door is replaced with a lunar landscape

The scene outside the door is replaced with a lunar landscape

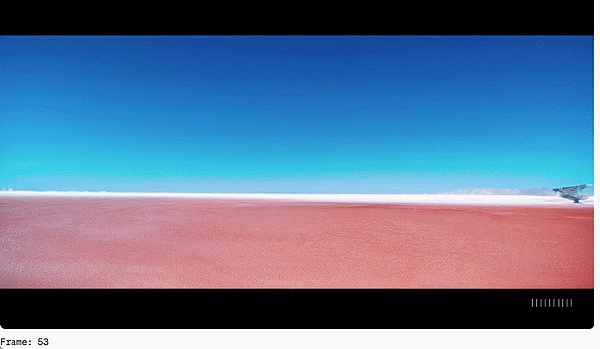

Re-cut: Find and isolate the best frames, then extend them in either direction to complete the scene

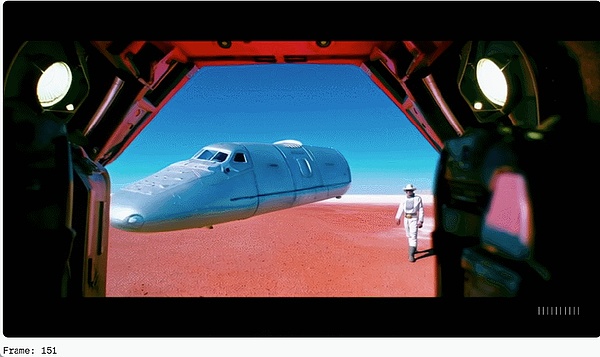

Storyboard: Organize and edit unique sequences of videos on a timeline

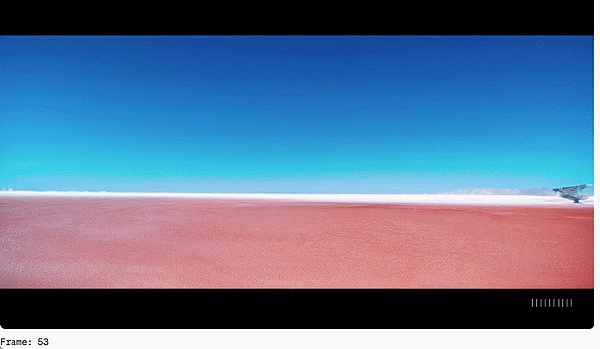

The scene for the first 114 frames of the video is "a vast red landscape with a spaceship docked in the distance."

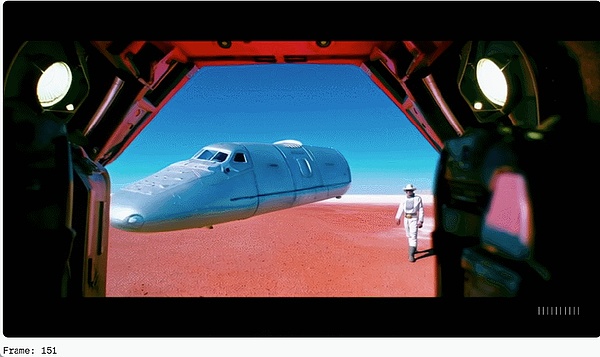

Next, the scene for frames 114-324 of the video can be transformed to: "Looking out from the inside of the spaceship, a space cowboy stands in the center of the frame."

Finally, the video content can be described as "a close-up of the astronaut's eyes, framed by a mask made of knitted fabric."

Loop: Use Loop to edit and create seamlessly repeating videos

Blend: Merge two videos into a seamless clip

Style presets: Use "Presets" to create and share styles that inspire your imagination

More amazing videos generated by Sora also require the imagination of netizens to create.

Sora official version system card

In February of this year, when Sora was first released, OpenAI published a technical report on Sora.

OpenAI believes that extending the video generation model is a promising way to build a universal simulator for the physical world.

Today, with the official release of Sora, OpenAI also released the Sora System Card. Interested developers can dig into the technical details.

Address: https://openai.com/index/sora-system-card/

Sora is OpenAI's video generation model, designed to take text, image, and video inputs and generate new videos as outputs. Users can create videos up to 1080p resolution (up to 20 seconds) in a variety of formats.

Sora is built on the basis of the DALL・E and GPT models, and aims to provide people with tools for creative expression.

Sora is a diffusion model that generates new videos starting from a base video that looks like static noise, gradually transforming it by removing the noise in multiple steps. By feeding the model multiple frames of predictions at once, Sora solves the challenging problem of ensuring that the subject of the picture remains unchanged even if it temporarily leaves the field of view. Similar to the GPT model, Sora uses a transformer architecture, which unleashes excellent scaling performance.

Sora uses the recaptioning technique from DALL・E 3, which involves generating highly descriptive captions for visual training data. As a result, Sora is able to more faithfully follow the user's text instructions in the generated video.

In addition to being able to generate videos based on text instructions alone, the model is also able to take existing static images and generate videos from them, accurately animating the image content and paying attention to details. The model can also take existing videos and extend them or fill in missing frames. Sora is the foundation for models that can understand and simulate the real world, and OpenAI believes that Sora will be an important milestone on the road to AGI.

On the data side, as OpenAI described in a February technical report, Sora draws inspiration from large language models, which acquire generalist capabilities by training on internet-scale data. LLMs set a new paradigm in part by innovating in their use of tokens. The researchers cleverly unified the multiple modalities of text — code, math, and various natural languages.

In Sora, OpenAI considered how models that generate visual data could inherit the benefits of this approach. Large language models have text tokens, while Sora has visual patches. Prior research has shown that patches are effective representations for models of visual data. OpenAI finds patches to be scalable and effective representations for models trained to generate various types of videos and images.

At a high level, OpenAI converts videos to patches by first compressing them into a lower-dimensional latent space and then decomposing the representation into spatiotemporal patches.

Sora has been trained on a variety of datasets, including public data, proprietary data obtained through partners, and custom datasets developed in-house:

Publicly available data. This data is mainly collected from industry-standard machine learning datasets and web crawlers.

Proprietary data from data partners. OpenAI establishes partnerships to obtain non-public data. For example, it cooperates with Shutterstock Pond5 to build and provide AI-generated images. OpenAI also commissions the creation of datasets that suit its needs.

Artificial data. Feedback from AI trainers, red teamers, and employees.

For more details, readers can check out the system card introduction.

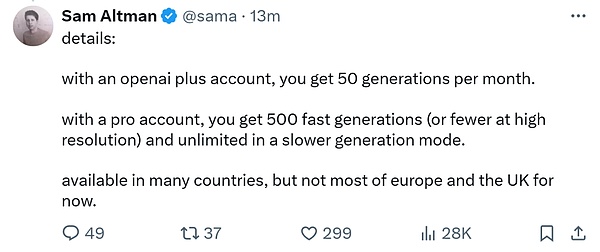

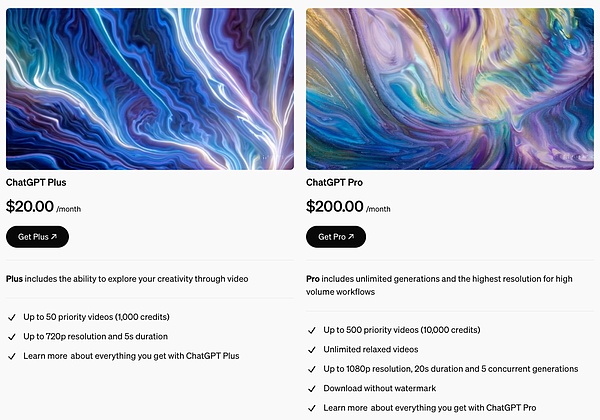

Price Benefits

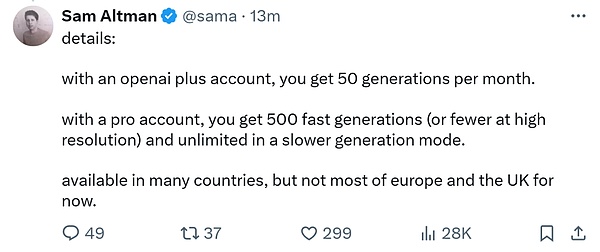

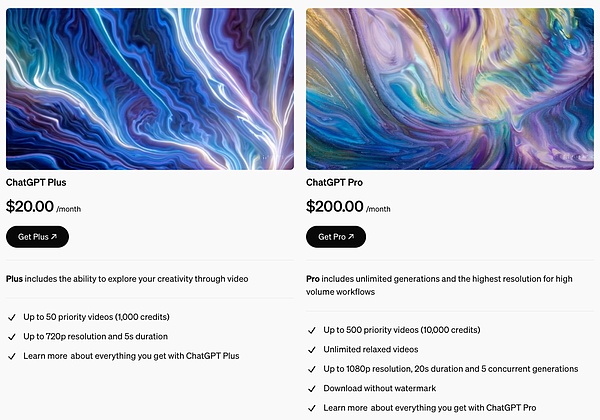

Of course, with the official release of Sora, OpenAI also announced the usage price. It seems that it is not cheap:

The video generation benefits that ChatGPT Plus users who pay $20 a month can enjoy include:

The video generation benefits that ChatGPT Pro users who pay $200 a month can enjoy include:

Up to 500 priority videos (10000 points)

Unlimited relaxed videos

Resolution up to 1080p, duration 20 seconds, can generate 5 concurrent videos

Download without watermark

After all, we have been looking forward to it for so long. Are you going to go for it?

JinseFinance

JinseFinance