Author: Wu Yue, Geek web3

Today, with the rapid iteration of blockchain technology, performance optimization has become a key issue. The Ethereum roadmap has clearly centered on Rollup, and the characteristic of EVM serial processing of transactions is a shackle that cannot meet the high-concurrency computing scenarios in the future.

In the previous article - 《From Reddio to the Optimization Road of Parallel EVM》, we briefly outlined Reddio's parallel EVM design ideas. In today's article, we will have a deeper interpretation of its technical solutions and its combination scenarios with AI.

Since Reddio's technical solution uses CuEVM, a project that uses GPU to improve the execution efficiency of EVM, we will start with CuEVM.

CUDA Overview

CuEVM is a project that uses GPU to accelerate EVM. It converts Ethereum EVM opcodes into CUDA Kernels for parallel execution on NVIDIA GPUs. The parallel computing capabilities of GPUs are used to improve the execution efficiency of EVM instructions. N card users may often hear the word CUDA - Compute Unified Device Architecture, which is actually a parallel computing platform and programming model developed by NVIDIA. It allows developers to use the parallel computing capabilities of GPUs for general computing (such as mining in Crypto, ZK operations, etc.), not just limited to graphics processing.

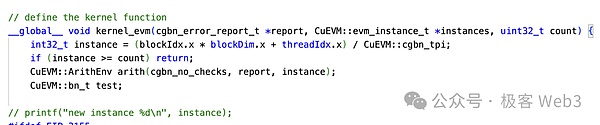

As an open parallel computing framework, CUDA is essentially an extension of the C/C++ language, and any low-level programmer familiar with C/C++ can quickly get started. A very important concept in CUDA is Kernel, which is also a C++ function.

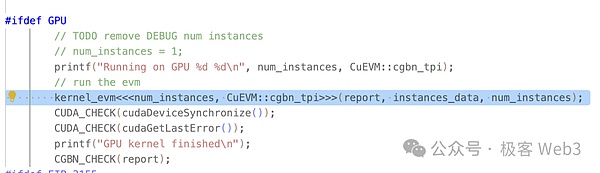

But unlike regular C++ functions that are executed only once, these kernel functions will be executed N times in parallel by N different CUDA threads when called with the startup syntax <<<...>>>.

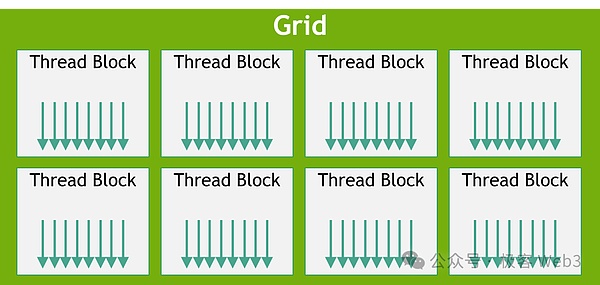

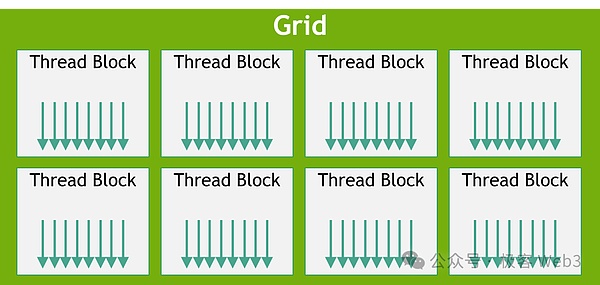

Each CUDA thread is assigned an independent thread ID, and a thread hierarchy is used to allocate threads into blocks and grids to facilitate the management of a large number of parallel threads. With NVIDIA's nvcc compiler, we can compile CUDA code into programs that can run on the GPU.

Basic workflow of CuEVM

After understanding a series of basic concepts of CUDA, you can look at the workflow of CuEVM.

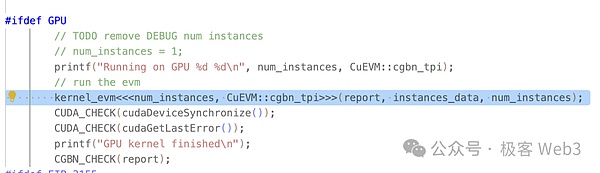

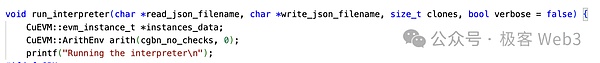

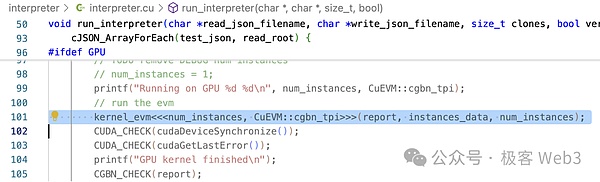

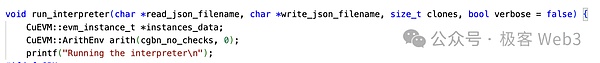

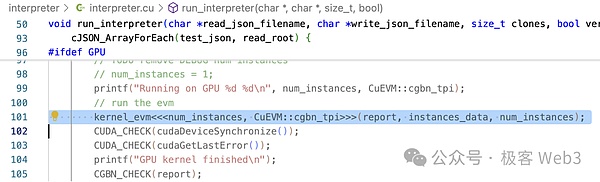

The main entrance of CuEVM is run_interpreter, from which the transactions to be processed in parallel are input in the form of json files. As can be seen from the project use cases, the input is all standard EVM content, and developers do not need to process or translate it separately.

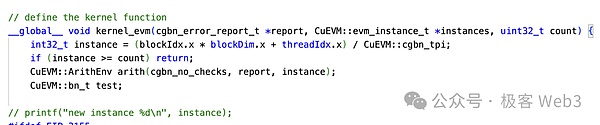

In run_interpreter(), it can be seen that it calls the kernel_evm() kernel function using the <<…>> syntax defined by CUDA. As we mentioned above, kernel functions are called in parallel on the GPU.

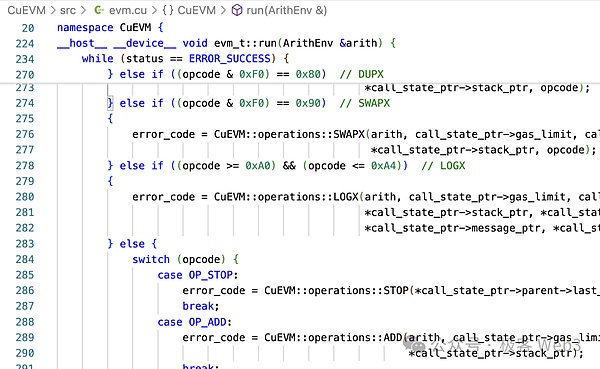

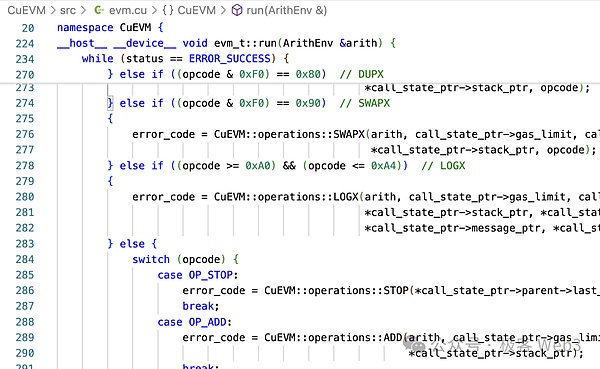

In the kernel_evm() method, evm->run() is called. We can see that there are a lot of branch judgments to convert EVM opcodes into CUDA operations.

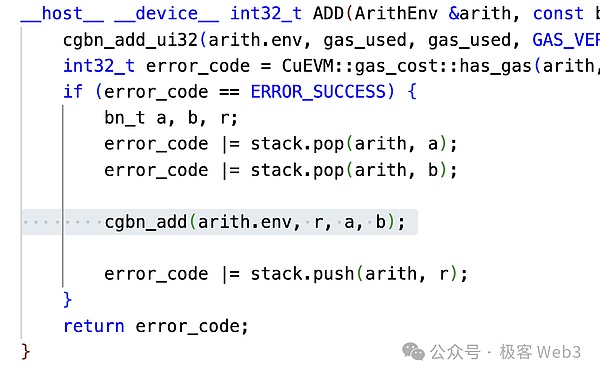

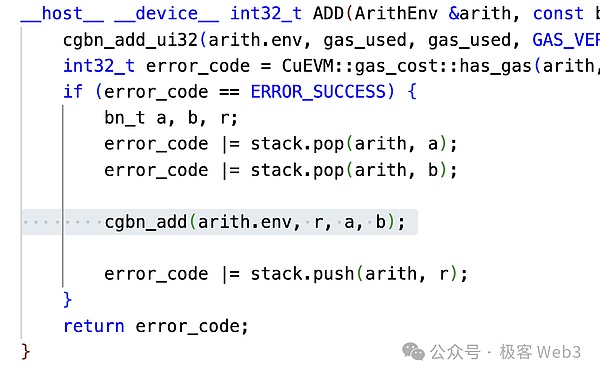

Take the addition opcode OP_ADD in EVM as an example, we can see that it converts ADD into cgbn_add. And CGBN (Cooperative Groups Big Numbers) is CUDA's high-performance multi-precision integer arithmetic library.

These two steps convert EVM opcodes into CUDA operations. It can be said that CuEVM is also the implementation of all EVM operations on CUDA. Finally, the run_interpreter() method returns the result of the operation, that is, the world state and other information.

So far, the basic operation logic of CuEVM has been introduced.

CuEVM has the ability to process transactions in parallel, but the purpose of the CuEVM project (or the main use case) is to do Fuzzing testing: Fuzzing is an automated software testing technology that observes the program's response by inputting a large amount of invalid, unexpected or random data into the program to identify potential errors and security issues.

We can see that Fuzzing is very suitable for parallel processing. CuEVM does not deal with issues such as transaction conflicts, which is not its concern. If you want to integrate CuEVM, you also need to handle conflicting transactions.

We have already introduced the conflict handling mechanism used by Reddio in the previous article 《From Reddio to the Optimization Road of Parallel EVM》, so we will not repeat it here. After Reddio sorts the transactions using the conflict handling mechanism, it can send them to CuEVM. In other words, the transaction sorting mechanism of Reddio L2 can be divided into two parts: conflict handling + CuEVM parallel execution.

Layer2, Parallel EVM, AI’s Crossroads

As mentioned above, parallel EVM and L2 are just the starting point for Reddio, and its future roadmap will clearly integrate with the AI narrative. Reddio, which uses GPUs for high-speed parallel transactions, is naturally suitable for AI computing in many aspects:

GPUs have strong parallel processing capabilities and are suitable for performing convolution operations in deep learning. These operations are essentially large-scale matrix multiplications, and GPUs are optimized for such tasks.

The thread hierarchy of GPUs can match the correspondence between different data structures in AI computing, and improve computing efficiency and mask memory latency through thread over-allocation and Warp execution units.

Computing intensity is a key indicator for measuring AI computing performance. GPU improves the performance of matrix multiplication in AI computing by optimizing computing intensity, such as introducing Tensor Core, to achieve an effective balance between computing and data transmission.

So how do AI and L2 combine?

We know that in the architectural design of Rollup, there are not only sorters in the entire network, but also some roles like supervisors and forwarders to verify or collect transactions. They essentially use the same client as the sorter, but they have different functions. In traditional Rollup, the functions and permissions of these secondary roles are very limited. For example, the role of watcher in Arbitrum is basically passive, defensive and public welfare, and its profit model is also questionable.

Reddio will adopt the architecture of a decentralized sorter, and miners will provide GPUs as nodes. The entire Reddio network can evolve from a pure L2 to a comprehensive network of L2+AI, which can well implement some AI+blockchain use cases:

AI Agent's interactive basic network

With the continuous evolution of blockchain technology, AI Agent has great potential for application in blockchain networks. Let's take AI Agents that perform financial transactions as an example. These intelligent agents can make complex decisions and perform trading operations autonomously, and can even react quickly under high-frequency conditions. However, when processing such intensive operations, L1 is basically unable to carry a huge transaction load.

As an L2 project, Reddio can greatly improve the parallel processing capability of transactions through GPU acceleration. Compared with L1, L2, which supports parallel execution of transactions, has a higher throughput and can efficiently handle a large number of high-frequency trading requests from AI Agents, ensuring the smooth operation of the network.

In high-frequency trading, AI Agents have extremely demanding requirements for transaction speed and response time. L2 reduces the verification and execution time of transactions, thereby significantly reducing latency. This is critical for AI Agents that need to respond in milliseconds. By migrating a large number of transactions to L2, the congestion problem of the main network is also effectively alleviated. This makes the operation of AI Agents more economical and efficient.

With the maturity of L2 projects such as Reddio, AI Agents will play a more important role on the blockchain and promote innovation in the combination of DeFi and other blockchain application scenarios with AI.

Decentralized computing power market

Reddio will adopt the architecture of a decentralized sorter in the future. Miners use GPU computing power to determine the sorting rights. The performance of the GPUs of the overall network participants will gradually improve with competition, and can even reach the level used for AI training.

Build a decentralized GPU computing power market to provide lower-cost computing power resources for AI training and reasoning. From small to large computing power, from personal computers to computer room clusters, all levels of GPU computing power can join the market to contribute their idle computing power and earn income. This model can reduce the cost of AI computing and allow more people to participate in AI model development and application.

In the decentralized computing power market use case, the sorter may not be mainly responsible for the direct operation of AI. Its main functions are to process transactions and coordinate AI computing power in the entire network. As for computing power and task allocation, there are two modes:

Top-down centralized allocation. Because of the sorter, the sorter can allocate the computing power requests received to nodes that meet the requirements and have a good reputation. Although this allocation method theoretically has the problems of centralization and unfairness, in fact, its efficiency advantages far outweigh its disadvantages, and in the long run, the sorter must meet the positive-sum of the entire network to develop in the long run, that is, there are implicit but direct constraints to ensure that the sorter will not be too biased.

Bottom-up spontaneous task selection. Users can also submit AI computing requests to third-party nodes. In specific AI application fields, this is obviously more efficient than submitting them directly to the sorter, and it can also prevent the sorter's review and bias. After the operation is completed, the node will synchronize the operation result to the sorter and upload it to the chain.

We can see that in the L2 + AI architecture, the computing power market has extremely high flexibility, and can aggregate and settle power from two directions to maximize resource utilization.

On-chain AI reasoning

At present, the maturity of open source models is sufficient to meet diverse needs. With the standardization of AI reasoning services, it is possible to explore how to put computing power on the chain to achieve automated pricing. However, this requires overcoming many technical challenges:

Efficient request distribution and recording: Large model reasoning has high latency requirements, and an efficient request distribution mechanism is very critical. Although the amount of request and response data is large and private, it is not suitable to be made public on the blockchain, but it is also necessary to find a balance between recording and verification - for example, by storing hashes.

Verification of computing power node output: Did the node really complete the specified computing task? For example, the node falsely reported the calculation result of the small model instead of the large model.

Smart contract reasoning: It is necessary to combine AI models with smart contracts for calculation in many scenarios. Since AI reasoning is uncertain and cannot be used in all aspects of the chain, the logic of future AI dApps is likely to be partially located off-chain and partially located in on-chain contracts. The on-chain contracts limit the validity and numerical legitimacy of the inputs provided off-chain. In the Ethereum ecosystem, when combined with smart contracts, it is necessary to face the inefficient seriality of EVM.

But in Reddio's architecture, these are relatively easy to solve:

The sorter's distribution of requests is far more efficient than L1, which can be considered equivalent to the efficiency of Web2. As for the location and retention of data, various inexpensive DA solutions can be used to solve this problem.

The calculation results of AI can ultimately be verified by ZKP for their correctness and goodwill. The characteristic of ZKP is that the verification is very fast, but the generation of proof is slow. And the generation of ZKP can also be accelerated by GPU or TEE.

Solidty → CUDA → GPU, the EVM parallel main line, is the basis of Reddio. So on the surface, this is the simplest problem for Reddio. At present, Reddio is working with Eliza of AiI6z to introduce its module into Reddio, which is a direction worth exploring.

Summary

Overall, the fields of Layer2 solutions, parallel EVM and AI technology seem to be unrelated, but Reddio has cleverly combined these major innovation fields by making full use of the computing characteristics of GPU.

By leveraging the parallel computing features of GPUs, Reddio has improved transaction speed and efficiency on Layer2, thereby enhancing the performance of Ethereum's second layer. Integrating AI technology into blockchain is a novel and promising attempt. The introduction of AI can provide intelligent analysis and decision-making support for on-chain operations, thereby achieving more intelligent and dynamic blockchain applications. This cross-domain integration has undoubtedly opened up new paths and opportunities for the development of the entire industry.

However, it should be noted that this field is still in its early stages and still requires a lot of research and exploration. The continuous iteration and optimization of technology, as well as the imagination and actions of market pioneers, will be the key driving force for this innovation to mature. Reddio has taken an important and bold step at this intersection, and we look forward to seeing more breakthroughs and surprises in this integration field in the future.

Weatherly

Weatherly