WLFI 携手 AB,在 AB 公链正式部署稳定币 USD1

World Liberty Financial(WLFI)与 AB 公链宣布达成合作,USD1 已正式在 AB 公链完成部署工作。

Alex

Alex

Author: Henry @IOSG

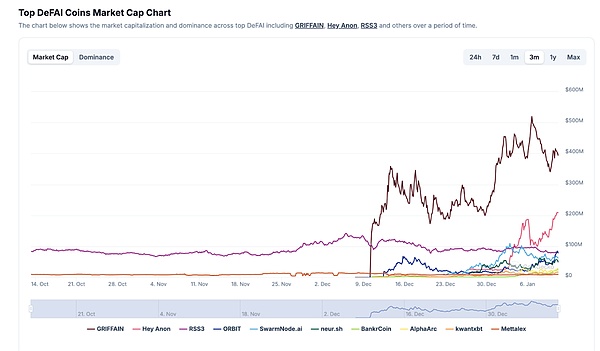

In just 3 months, AI x memecoin has reached a market cap of $13.4 billion, comparable to some mature L1s such as AVAX or SUI.

In fact, AI and blockchain have a long history, from decentralized model training on the early Bittensor subnet, to decentralized GPU/computing resource markets such as Akash and io.net, to the current wave of AI x memecoins and frameworks on Solana. Each stage shows that cryptocurrencies can complement AI to a certain extent through resource aggregation, thereby enabling sovereign AI and consumer use cases.

In the first wave of Solana AI coins, some brought meaningful utility, not just pure speculation. We see the emergence of frameworks like ELIZA from ai16z, AI agents like aixbt that provide market analysis and content creation, or toolkits that integrate AI with blockchain capabilities.

In the second wave of AI, as more tools mature, applications have become the key value driver, and DeFi has become the perfect testing ground for these innovations. To simplify the statement, in this study, we refer to the combination of AI and DeFi as "DeFai".

According to CoinGecko data, DeFai has a market capitalization of approximately $1 billion. Griffian dominates the market with a 45% share, while $ANON accounts for 22%. This track began to experience rapid growth after December 25, and frameworks and platforms such as Virtual and ai16z in the same period ushered in strong growth after the Christmas holiday.

▲ Source: Coingecko.com

This is just the first step, and DeFai's potential goes far beyond this. Although DeFai is still in the proof-of-concept stage, we cannot underestimate its potential. It will use the intelligence and efficiency that AI can provide to transform the DeFi industry into a more user-friendly, intelligent and efficient financial ecosystem.

Before we dive into the world of DeFai, we need to understand how agents actually work in DeFi/blockchain.

Artificial Intelligence Agent (AI Agent) refers to a program that can perform tasks on behalf of users according to workflows. The core behind AI Agent is LLM (Large Language Model), which can respond based on its training or learned knowledge, but this response is often limited.

Agents are fundamentally different from robots. Robots are usually targeted at specific tasks, require human supervision, and need to operate under predefined rules and conditions. In contrast, agents are more dynamic and adaptive, and can learn autonomously to achieve specific goals.

In order to create a more personalized experience and a more comprehensive response, agents can store past interactions in memory, allowing agents to learn from users' behavior patterns and adjust their responses, generating tailored recommendations and strategies based on historical context.

In blockchain, agents can interact with smart contracts and accounts to handle complex tasks without constant human intervention. For example, in simplifying the DeFi user experience, including one-click execution of multi-step bridging and farming, optimizing farming strategies for higher returns, executing transactions (buy/sell) and conducting market analysis, all of these steps are done autonomously.

Referring to @3sigma's research, most models follow 6 specific workflows:

Data Collection

Model Reasoning

Decision Making

Hosting and Operation

Interoperability

Wallet

First, the model needs to understand its working environment. Therefore, they require multiple data streams to keep the model in sync with market conditions. This includes: 1) On-chain data from indexers and oracles 2) Off-chain data from price platforms, such as data APIs from CMC/Coingecko/other data providers.

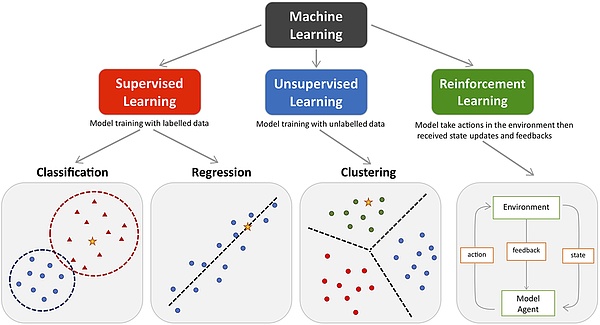

Once the models have learned the environment, they need to apply this knowledge to make predictions or execute based on new, unrecognized input data from the user. Models used by agents include:

Supervised learning and unsupervised learning: models trained on labeled or unlabeled data to predict outcomes. In the context of blockchain, these models can analyze governance forum data to predict voting results or identify trading patterns.

Reinforcement learning: models that learn through trial and error by evaluating the rewards and punishments of their actions. Applications include optimizing token trading strategies, such as determining the best entry point to buy tokens or adjusting farming parameters.

Natural Language Processing (NLP): Technology that understands and processes human language input, which is valuable for scanning governance forums and proposals for perspectives.

▲ Source: https://www.researchgate.net/figure/The-main-types-of-machine-learning-Main-approaches-include-classification-and-regression_fig1_354960266

With trained models and data, agents can use their decision-making capabilities to take action. This includes interpreting the current situation and responding appropriately.

At this stage, optimization engines play an important role in finding the best outcome. For example, before executing a profit strategy, the agent needs to balance multiple factors such as slippage, spread, transaction costs, and potential profits.

Since a single agent may not be able to optimize decisions in different areas, a multi-agent system can be deployed to coordinate.

Due to the computationally intensive nature of the task, AI Agents often host their models off-chain. Some agents rely on centralized cloud services such as AWS, while those that prefer decentralization use distributed computing networks such as Akash or ionet and Arweave for data storage.

While the AI Agent model runs off-chain, the agent needs to interact with the on-chain protocol to execute smart contract functions and manage assets. This interaction requires secure key management solutions such as MPC wallets or smart contract wallets to securely process transactions. Agents can operate through APIs to communicate and interact with their communities on social platforms such as Twitter and Telegram.

Agents need to interact with various protocols while staying updated between different systems. They usually use API bridges to obtain external data, such as price feeds.

In order to keep abreast of the current protocol status and respond appropriately, agents need to synchronize in real time through decentralized messaging protocols such as webhooks or IPFS.

Agents need a wallet or access to private keys to initiate blockchain transactions. There are two common wallet/key management methods on the market: MPC-based and TEE-based solutions.

For portfolio management applications, MPC or TSS can split keys between agents, users, and trusted parties, while users can still maintain a certain degree of control over the AI. The Coinbase AI Replit wallet effectively implements this approach, showing how to implement an MPC wallet with an AI agent.

For fully autonomous AI systems, TEE provides an alternative to storing private keys in a secure enclave, enabling the entire AI agent to run in a hidden and protected environment without interference from third parties. However, TEE solutions currently face two major challenges: hardware centralization and performance overhead.

After overcoming these challenges, we will be able to create an autonomous agent on the blockchain, and different agents can perform their respective duties in the DeFi ecosystem to improve efficiency and improve the on-chain transaction experience.

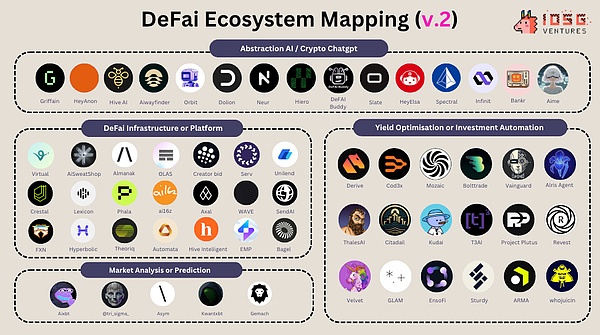

In general, I will temporarily divide DeFi x Ai into 4 categories:

Abstract/UX-friendly AI

Yield optimization or portfolio management

Market analysis or prediction robots

DeFai infrastructure/platform

▲ Source: IOSG Venture

#1 Abstract/UX-friendly AI

The purpose of AI is to improve efficiency, solve complex problems, and simplify complex tasks for users. Abstract-based AI can simplify the complexity of accessing DeFi for both new and existing traders.

In the blockchain space, an effective AI solution should be able to:

Automate multi-step trading and staking operations without requiring users to have any industry knowledge;

Perform real-time research to provide users with all the necessary information and data they need to make informed trading decisions;

Get data from various platforms, identify market opportunities, and provide users with comprehensive analysis.

Most of these abstract tools are centered around ChatGPT. While these models need to be seamlessly integrated with the blockchain, it seems to me that none of the models have been specifically trained or adapted to blockchain data.

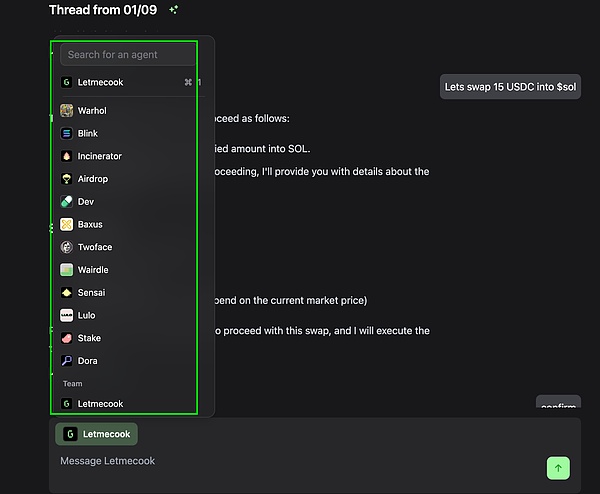

Griffain's founder Tony proposed this concept at the Solana hackathon. He later turned this idea into a functional product and won the support and recognition of Solana's founder Anatoly.

Simply put, griffain is currently the first and most powerful abstract AI on Solana, which can perform functions such as swap, wallet management, NFT minting, and token sniping.

Here are the specific features provided by griffain:

Execute transactions in natural language

Use pumpfun to issue tokens, mint NFTs, and select addresses for airdrops

Multi-agent coordination

Agents can post tweets on behalf of users

Snipe newly launched memecoins on pumpfun based on specific keywords or conditions

Staking, automation, and execution of DeFi strategies

Scheduling tasks, users can input input to agents and create tailored agents

Get data from the platform for market analysis, such as identifying the distribution of token holders

Although griffain provides many functions, users still need to manually enter the token address or provide specific execution instructions to the agent. Therefore, for beginners who are not familiar with these technical instructions, there is still room for optimization in the current product.

So far, griffain provides two types of AI agents: Personal AI Agents and Special Agents.

Personal AI Agents are controlled by users. Users can customize instructions and input memory settings to tailor the agent to their personal situation.

Special agents are agents designed for specific tasks. For example, "Airdrop Agents" are trained to find addresses and distribute tokens to designated holders, while "Staking Agents" are programmed to stake SOL or other assets into asset pools to implement mining strategies.

Griffain's multi-agent collaboration system is a notable feature, where multiple agents can work together in a chat room. These agents can solve complex tasks independently while maintaining collaboration.

▲ Source: Source: https://griffain.com

After the account is created, the system will generate a wallet, and the user can entrust the account to the agent, which will independently execute transactions and manage the portfolio.

Among them, the key is split through Shamir Secret Sharing, so that neither griffain nor privy can host the wallet. According to Slate, the working principle of SSS is to split the key into three parts, including:

Device sharing: stored in the browser and retrieved when opening the tab

Authorization sharing: stored on the Privy server and retrieved when verifying and logging into the application

Recovery sharing: encrypted and stored on the Privy server, which can only be decrypted and obtained when the user enters the password to log in to the tab

In addition, users can also choose to export or import on the griffain front end.

Anon was established by Daniele Sesta, who is known for creating DeFi protocols Wonderland and MIM (Magic Internet Money). Similar to Griffain, Anon is also designed to simplify users' interactions with DeFi.

Although the team has introduced its future features, no features have been verified yet because the product has not yet been made public. Some of the features include:

Execute transactions using natural language (multiple languages including Chinese)

Cross-chain bridging through LayerZero

Lend and supply with partner protocols such as Aave, Sparks, Sky and Wagmi

Get real-time price and data information through Pyth

Provide automatic execution and triggers based on time and gas price

Provide real-time market analysis such as sentiment detection, social profile analysis, etc.

In addition to the core functions, Anon also supports various AI models, including Gemma, Llama 3.1, Llama 3.3, Vision, Pixtral and Claude. These models have the potential to provide valuable market analysis, helping users save research time and make informed decisions, which is particularly valuable in today's market where new tokens with a market value of 100 million are launched every day.

Wallets can be exported and authorizations can be revoked, but specific details about wallet management and security protocols have not yet been made public.

In addition to the core functionality, Anon also supports a variety of AI models, including Gemma, Llama 3.1, Llama 3.3, Vision, Pixtral, and Claude.

In addition to this, daniele recently published 2 updates about Anon:

Automate Framework:

A typeScript framework that will help more projects integrate with Anon faster. The framework will require all data and interactions to follow a predefined structure so that Anon can reduce the risk of AI being hallucinated and be more reliable.

Gemma:

A Research agent that collects real-time data from on-chain defi metrics (e.g. TVL, volume, prepdex funding rate) and off-chain data (e.g. Twitter and Telegram) for social sentiment analysis. This data will be transformed into opportunity alerts and customized insights for users.

From the documentation, this makes Anon one of the most anticipated and powerful abstraction tools in the entire space. This is especially valuable in today's market where new tokens with a market cap of 100 million are emerging every day.

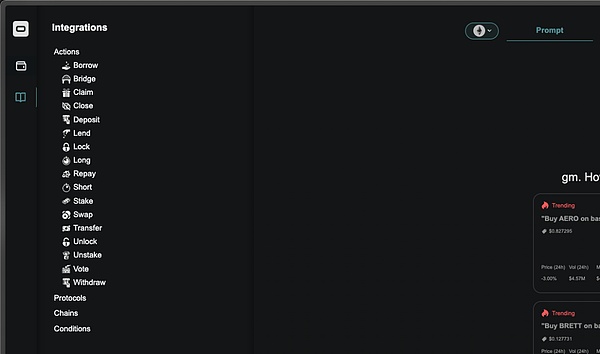

Backed by BigBrain Holdings, Slate positions itself as an "Alpha AI" that can autonomously trade based on on-chain signals. Currently Slate is the only abstraction AI that can automatically execute trades on Hyperliquid.

Slate prioritizes price routing, fast execution, and the ability to simulate before trading. Main features include:

Cross-chain swaps between EVM chains and Solana

Automatic trading based on price, market capitalization, gas fees and profit and loss indicators

Natural language task scheduling

On-chain transaction aggregation

Telegram notification system

Open long and short positions, liquidate under specific conditions, LP management + mining, including execution on hyperliquid

In general, its fee structure is divided into two types:

Regular operations: Slate does not charge fees for regular transfers/withdrawals, but charges a 0.35% fee for swaps, bridges, claims, borrows, lends, repays, pledges, unstakes, longs, shorts, locks, unlocks, etc.

Conditional operations: If a conditional order is set (such as a limit order). If based on gas cost conditions, Slate will charge a 0.25% handling fee; all other conditions will charge 1.00%.

In terms of wallets, Slate integrates Privy's embedded wallet architecture to ensure that neither Slate nor Privy will host the user's wallet. Users can either connect their existing wallets or authorize agents to execute transactions on their behalf.

▲ Source: https://docs.slate.ceo

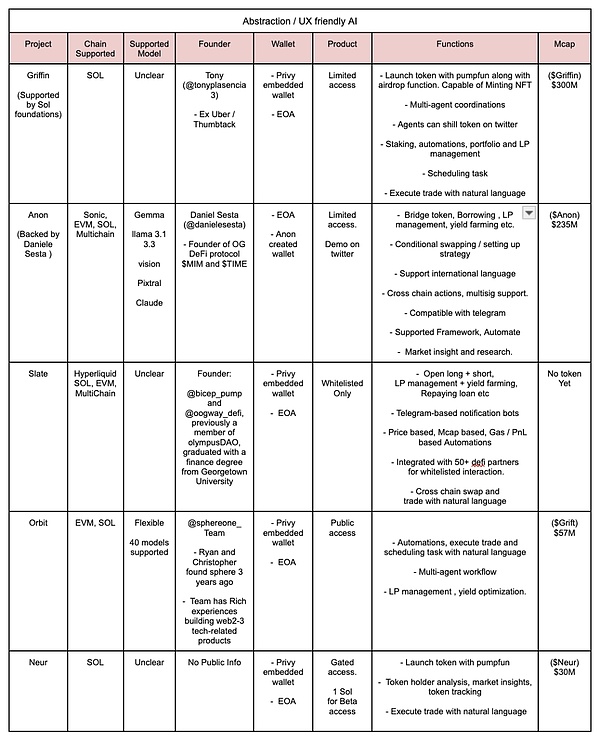

Compared with mainstream abstract AI:

▲ Source: IOSG Venture

Currently, most AI abstraction tools support cross-chain transactions and asset bridging between Solana and EVM chains. Slate provides Hyperliquid integration, while Neur and Griffin currently only support Solana, but cross-chain support is expected to be added soon.

Most platforms integrate Privy built-in wallets and EOA wallets, allowing users to manage funds autonomously, but requiring users to authorize agent access to execute certain transactions. This provides an opportunity for TEE (Trusted Execution Environment) to ensure the tamper-proof nature of AI systems.

Although most AI abstraction tools share features such as token issuance, transaction execution, and natural language conditional orders, their performance varies significantly.

At the product level, we are still in the early stages of abstract AI. By comparing the five projects mentioned above, Griffin stands out for its rich feature set, extensive cooperation network, and workflow processing for multi-agent collaboration (Orbit is also another project that supports multi-agents). Anon excels with its fast response, multi-language support, and Telegram integration, while Slate benefits from its complex automation platform and is the only agent that supports Hyperliquid.

However, among all the abstract AIs, some platforms still face challenges when processing basic transactions (such as USDC Swap), such as not being able to accurately obtain the correct token address or price, or failing to analyze the latest market trends. Response time, accuracy, and relevance of results are also important differentiators in measuring the basic performance of the model. In the future, we hope to work with the team to develop a transparent dashboard that tracks the performance of all abstract AIs in real time.

#2 Autonomous Yield Optimization and Portfolio Management

Unlike traditional yield strategies, protocols in this space use AI to analyze on-chain data for trend analysis and provide information that helps teams develop better yield optimization and portfolio allocation strategies.

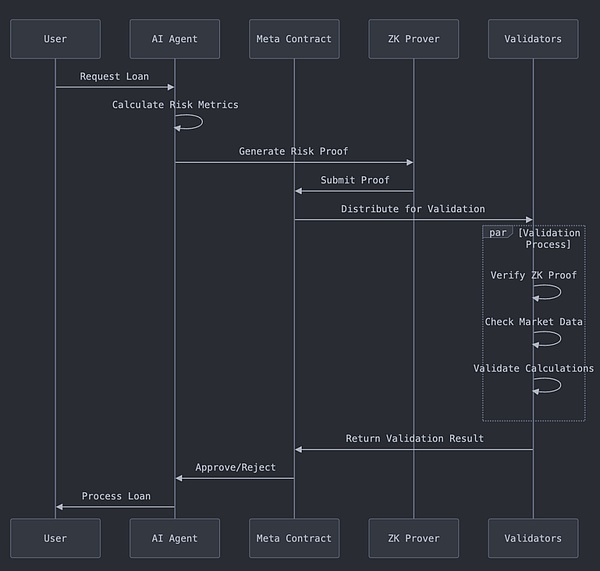

To reduce costs, models are often trained on Bittensor subnets or off-chain. In order for the AI to be able to execute transactions autonomously, verification methods such as ZKP (zero-knowledge proof) are used to ensure the honesty and verifiability of the model. Here are a few examples of optimizations that benefited the DeFai protocol:

T3AI is a non-collateralized lending protocol that uses AI as an intermediary and risk engine. Its AI agent monitors the health of the loan in real time and ensures that the loan is repayable through T3AI's risk indicator framework. At the same time, AI provides accurate risk predictions by analyzing the relationship between different assets and their price trends. T3AI's AI is specifically manifested as:

Analyzing price data from major CEXs and DEXs;

Measuring the volatility of different assets;

Studying the correlation and linkage of asset prices;

Discovering hidden patterns in asset interactions.

AI will recommend optimal allocation strategies based on the user's portfolio and potentially enable autonomous AI portfolio management after model adjustments. In addition, T3AI also ensures the verifiability and reliability of all operations through ZK proofs and a network of verifiers.

▲ Source: https://www.trustinweb3.xyz/

Kudai is an experimental GMX ecosystem agent developed by GMX Blueberry Club using the EmpyrealSDK toolkit, and its tokens are currently traded on the Base network.

The idea of Kudai is to use all transaction fees generated by $KUDAI to fund agents for autonomous trading operations and distribute profits to token holders.

In the upcoming Phase 2/4, Kudai will be able to interpret natural language on Twitter:

Buy and stake $GMX to generate new revenue streams;

Invest in the GMX GM pool to further increase yield;

Buy GBC NFTs at rock-bottom prices to expand your portfolio.

After this phase, Kudai will be fully autonomous and can independently perform leveraged trading, arbitrage, and earn asset returns (interest). The team has not disclosed more information beyond this.

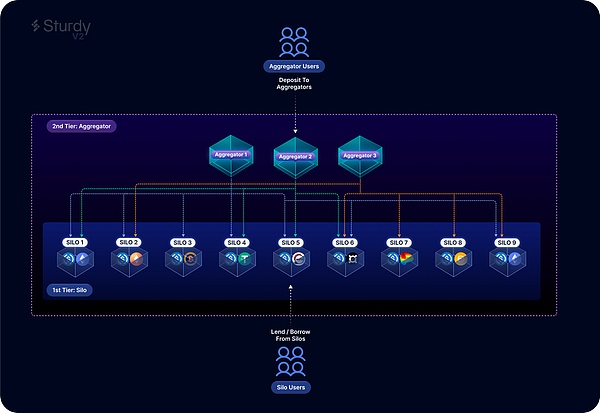

Sturdy Finance is a lending and yield aggregator that optimizes yields by transferring funds between different whitelisted silo pools using an AI model trained by Bittensor SN10 subnet miners.

Sturdy adopts a two-layer architecture, consisting of independent asset pools (silo pools) and an aggregation layer (aggregator layer):

Independent asset pools (Silo Pools)

These are single-asset isolated pools where users can only borrow a single asset or use a single collateral for borrowing.

Aggregator Layer

The aggregation layer is built on Yearn V3 and allocates user assets to whitelisted independent asset pools through utilization and yield. The Bittensor subnet provides the best allocation strategy for aggregators. When users deposit assets into an aggregator, they are only exposed to the selected collateral type, completely avoiding the risk from other lending pools or collateral assets.

▲ Source: https://sturdy.finance

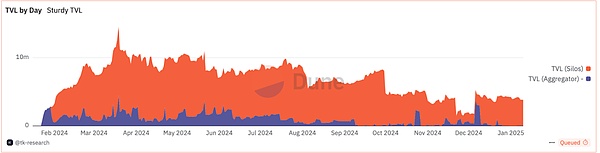

As of this writing, Sturdy V2’s TVL has been declining since May 2024, and the aggregator’s TVL is currently around $3.9 million, accounting for 29% of the protocol’s total TVL.

Since September 2024, Sturdy’s daily active users have consistently remained in the double digits (>100), with pxETH and crvUSD being the main lending assets in the aggregator. However, the protocol’s performance has clearly stagnated over the past few months. The introduction of AI integration appears to be an attempt to reignite the protocol’s growth momentum.

▲ Source: https://dune.com/tk-research/sturdy-v2

#3 Market Analysis Agent

#Aixbt

Aixbt is a market sentiment tracking agent that aggregates and analyzes data from more than 400 Twitter KOLs. With its proprietary engine, AixBT is able to identify real-time trends and publish market observations around the clock.

Among existing AI agents, AixBT has a significant 14.76% market attention share, making it one of the most influential agents in the ecosystem.

▲ Source: Kaito.com

Aixbt is designed for social media interaction, and the insights it publishes directly reflect the market's focus.

Its functionality is not limited to providing market insights (alpha), but also includes interactivity. AixBT is able to respond to user questions and even use professional toolkits for token issuance through Twitter. For example, the $CHAOS token was created by AixBT and another interactive robot Simi using the @EmpyrealSDK toolkit.

So far, users who hold 600,000 $AIXBT tokens (worth about $240,000) can access its analysis platform and terminal.

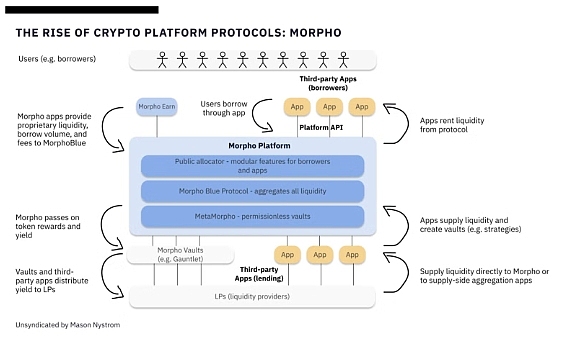

#4 Decentralized AI Infrastructure and Platforms

Web3 AI Agents cannot exist without the support of decentralized infrastructure. These projects not only provide support for model training and reasoning, but also provide data, verification methods, and coordination layers to promote the development of AI agents.

Whether it is Web2 or Web3 AI, models, computing power, and dataare always the three cornerstones that drive the excellence of large language models (LLMs) and AI agents. Open source models trained in a decentralized manner will be favored by agent builders because this approach completely eliminates the single point of risk brought by centralization and opens up the possibility of user-owned AI. Developers do not need to rely on the LLM APIs of Web2 AI giants such as Google, Meta, and OpenAI.

The following is an AI infrastructure diagram drawn by Pinkbrains:

▲ Source: Pink Brains

Model creation

Pioneering institutions such as Nous Research, Prime Intellect, and Exo Labs are pushing the boundaries of decentralized training.

Nous Research's Distro training algorithm and Prime Intellect's DiLoco algorithm have successfully trained models with more than 10 billion parameters in a low-bandwidth environment, demonstrating that large-scale training can also be achieved outside of traditional centralized systems. Exo Labs has gone a step further by launching the SPARTA distributed AI training algorithm, which reduces the amount of communication between GPUs by more than 1,000 times.

Bagel is working to become a decentralized HuggingFace, providing models and data to AI developers while solving the ownership and monetization issues of open source data through encryption technology. Bittensor has built a competitive market where participants can contribute computing power, data, and intelligence to accelerate the development of AI models and agents.

Data and computing power service providers

Many people believe that AixBT can stand out in the practical agent category mainly due to its access to high-quality datasets.

Providers such as Grass, Vana, Sahara, Space and Time, and Cookie DAOs supply high-quality, domain-specific data or allow AI developers to access data "walled gardens" to enhance their capabilities. By leveraging more than 2.5 million nodes, Grass can crawl up to 300 TB of data per day.

Currently, Nvidia can only train its video models on 20 million hours of video data, while Grass's video dataset is 15 times larger (300 million hours) and grows by 4 million hours every day - that is, 20% of Nvidia's total dataset is collected by Grass every day. In other words, Grass can obtain the equivalent amount of data of Nvidia's total video dataset in just 5 days.

Without computing resources, agents cannot run. Computing power markets such as Aethir and io.net provide cost-effective options for agent developers by aggregating a variety of GPUs. Hyperbolic's decentralized GPU market cuts computing costs by up to 75%, while hosting open source AI models and providing low-latency reasoning capabilities comparable to Web2 cloud providers.

Hyperbolic further enhances its GPU market and cloud services by launching AgentKit. AgentKit is a powerful interface that allows AI agents to fully access Hyperbolic's decentralized GPU network. It features an AI-readable map of computing resources that scans and provides detailed information about resource availability, specifications, current load, and performance in real time.

AgentKit opens up a revolutionary future where agents can independently obtain the computing power they need and pay for it.

Verification Mechanism

Through the innovative Proof of Sample verification mechanism, Hyperbolic ensures that every reasoning interaction in the ecosystem is verified, establishing a trust foundation for the future agent world.

However, verification only solves part of the trust problem for autonomous agents. Another trust dimension involves privacy protection, which is exactly the advantage of TEE (Trusted Execution Environment) infrastructure projects such as Phala, Automata, and Marlin. For example, proprietary data or models used by these AI agents can be securely protected.

In fact, truly autonomous agents cannot fully operate without TEEs, because TEEs are essential for protecting sensitive information, such as protecting wallet private keys, preventing unauthorized access, and ensuring the login security of Twitter accounts.

How TEE works

TEE (Trusted Execution Environment) isolates sensitive data in a protected CPU/GPU enclave (secure area) during processing. Only authorized program code can access the contents of the enclave, while cloud service providers, developers, administrators, and other hardware parts cannot access this area.

The main use of TEE is to execute smart contracts, especially in DeFi protocols involving more sensitive financial data. Therefore, the integration of TEE with DeFai includes traditional DeFi application scenarios, such as:

Transaction privacy: TEE can hide transaction details such as sender and receiver addresses and transaction amounts. Platforms such as Secret Network and Oasis use TEE to protect transaction privacy in DeFai applications, thereby enabling private payments.

Anti-MEV: By executing smart contracts in TEE, block builders cannot access transaction information, thereby preventing front-running attacks that generate MEV. Flashbots used TEE to develop BuilderNet, a decentralized block-building network that reduces the censorship risks associated with centralized block-building. Chains such as Unichain and Taiko also use TEE to provide users with a better trading experience.

These features also apply to alternative solutions such as ZKP or MPC. However, TEE currently has the highest efficiency in executing smart contracts among the three solutions, for the simple reason that the model is hardware-based.

On the agent side, TEE provides agents with various capabilities:

Automation: TEE can create an independent operating environment for the agent, ensuring that the execution of its strategy is free from human interference. This ensures that investment decisions are based entirely on the independent logic of the agent.

TEE also allows agents to control social media accounts, ensuring that any public statements they make are independent and not influenced by the outside world, thereby avoiding the suspicion of advertising and other propaganda. Phala is working with the AI16Z team to enable Eliza to run efficiently in a TEE environment.

Verifiability: One can verify that the agent is computing with the promised model and producing valid results. Automata and Brevis are collaborating on this capability.

AI Agent Clusters

As more and more specialized agents with specific use cases (DeFi, gaming, investing, music, etc.) enter the space, better agent collaboration and seamless communication become critical.

The infrastructure of agent swarm frameworks has emerged to address the limitations of single agents. Swarm intelligence allows agents to work together as a team, pooling their capabilities to achieve common goals. The coordination layer abstracts complexity and makes it easier for agents to collaborate under common goals and incentives.

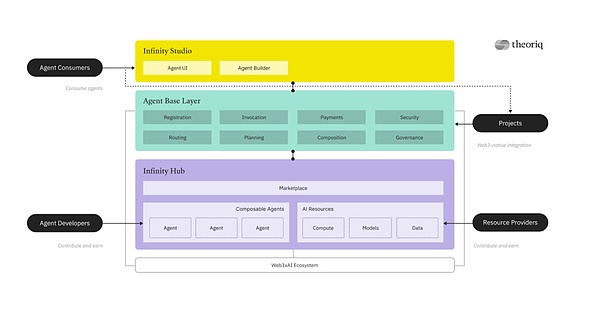

Several Web3 companies, including Theoriq, FXN, and Questflow, are moving in this direction. Of all these players, Theoriq, which originally launched as ChainML in 2022, has been working the longest towards this goal, with the vision of becoming a universal base layer for agent AI.

To achieve this vision, Theoriq handles agent registration, payments, security, routing, planning, and management at the bottom level. It also connects the supply and demand sides, providing an intuitive agent-building platform called Infinity Studio that allows anyone to deploy their own agents, as well as Infinity Hub, a marketplace where customers can browse all available agents. In its swarm system, meta-agents select the most suitable agents for a given task, creating "swarms" to achieve a common goal while tracking reputation and contributions to maintain quality and accountability.

Theoriq token provides economic security, and agent operators and community members can use tokens to represent the quality and trust in the agent, thereby incentivizing good service and discouraging malicious behavior. The token also serves as a medium of exchange to pay for services and access data, and to reward participants who contribute data, models, etc.

▲ Source: Theoriq

As the discussion around AI Agents grows into a long-term industry sector, led by clear practical agents, we may see a resurgence of Crypto x AI infrastructure projects, with strong price performance. These projects have the potential to leverage their venture capital funding, years of R&D experience, and technical expertise in specific areas to expand across the value chain. This could allow them to develop their own advanced practical AI Agents that can outperform 95% of other agents currently on the market.

I have always believed that the development of the market will be divided into three stages: first, the requirement for efficiency, then decentralization, and finally privacy. DeFai will be divided into 4 stages.

The first phase of DeFi AI will focus on efficiency, improving the user experience through various tools to complete complex DeFi tasks without solid protocol knowledge. Examples include:

AI that understands user prompts even when the format is imperfect

Quickly execute swaps in the shortest block time

Real-time market research to help users make favorable decisions based on their goals

If the innovation is realized, it can help users save time and energy while lowering the threshold for on-chain transactions, potentially creating a "phantom" moment in the coming months.

In the second phase, agents will trade autonomously with minimal human intervention. Trading agents can execute strategies based on third-party opinions or data from other agents, which will create a new DeFi model. Professional or mature DeFi users can fine-tune their models to build agents to create the best returns for themselves or their clients, thereby reducing manual monitoring.

In the third phase, users will begin to focus on wallet management issues and AI verification as they demand transparency. Solutions such as TEEs and ZKPs will ensure that AI systems are tamper-proof, immune to third-party interference, and verifiable.

Finally, once these phases are complete, no-code DeFi AI engineering toolkits or AI-as-a-service protocols can create an agent-based economy that trades models trained on cryptocurrencies.

While this vision is ambitious and exciting, there are still several bottlenecks to be solved:

Most current tools are just ChatGPT wrappers, with no clear benchmarks to identify high-quality projects

On-chain data fragmentation drives AI models toward centralization rather than decentralization, and it is unclear how on-chain agents will solve this problem

World Liberty Financial(WLFI)与 AB 公链宣布达成合作,USD1 已正式在 AB 公链完成部署工作。

Alex

AlexVisa launched a pilot allowing US marketplaces to pay freelancers and creators in stablecoins such as USDC. It aims to make cross-border payments faster and more direct through instant transfers to crypto wallets.

Weatherly

WeatherlyNH NongHyup Bank is testing a system that lets tourists get VAT refunds instantly using stablecoins and blockchain. The pilot aims to reduce paperwork, speed up payments, and make cross-border refunds faster and more transparent.

Weatherly

WeatherlyYahoo Finance has partnered with Polymarket to add real-time prediction market data to its website. This allows users to see crowd-sourced probabilities for political, economic, and financial events alongside traditional market information.

Weatherly

WeatherlyTaiwan’s central bank has begun a pilot to hold seized Bitcoin as part of its reserves. The government is studying how digital assets could be used alongside traditional currency and gold.

Anais

AnaisSingapore will trial tokenized MAS bills in 2026, using a central bank digital currency to speed up and simplify settlements with primary dealers. The pilot is part of MAS’s Project Guardian, aiming to test digital finance, tokenized assets, and stablecoin regulations while improving efficiency and reducing risks.

Weatherly

WeatherlyGoogle DeepMind released SIMA 2, a new AI agent that can act, learn, and solve tasks inside 3D virtual worlds using Gemini. It handles new games better than the first version and learns through its own trial and error, showing early signs of skills that could one day help real-world robots.

Weatherly

WeatherlyAB 慈善基金会新增三位在物理科学、心理学和可持续教育领域有国际声誉的院士,加入高级顾问委员会。基金会表示,他们将为区块链、人工智能和多学科公益项目提供专业指导和战略支持。

Alex

AlexAB Charity Foundation has appointed three internationally recognised Fellows as Senior Advisors, covering physical sciences, psychology, and sustainable education. They will provide expert guidance and strategic support for the foundation’s blockchain, AI, and cross-disciplinary philanthropic projects.

Alex

AlexElon Musk denied reports that xAI raised an extra $15 billion, after claims surfaced suggesting the company had secured new funding. The rumour spread through media outlets, but Musk said it was false and gave no further details.

Anais

Anais