Instagram will soon start testing blurring nudes in direct messages, according to an announcement from Meta on Wednesday. It will use “on-device machine learning” to detect nudes, and is aimed at stopping sextortion schemes that target teenagers, the company wrote.

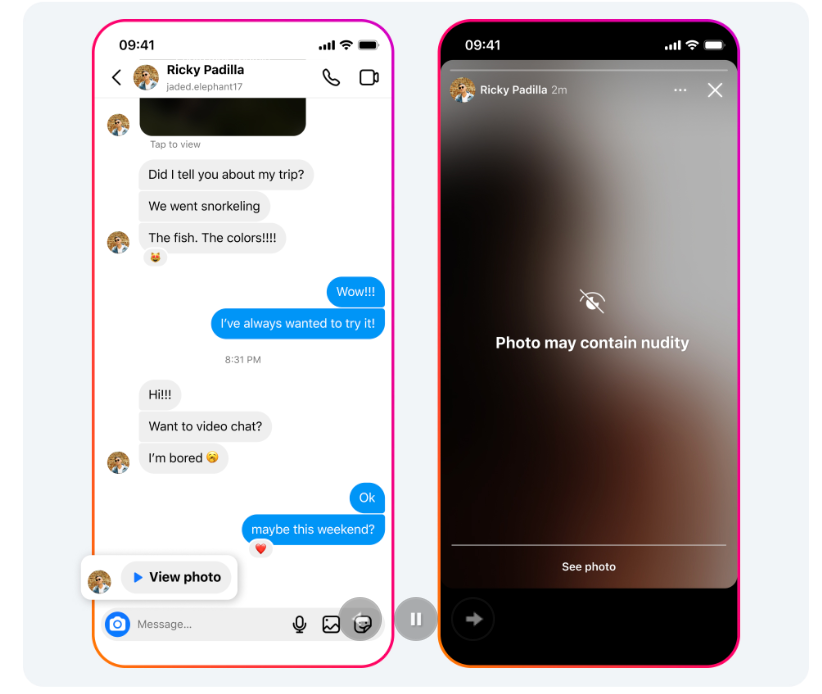

The “nudity protection” feature will detect nudes in direct messages and automatically blur them, both when you’re getting a nude sent to you and when you’re attempting to send one to someone else. Users will also see a popup message that says, “Take care when sharing sensitive photos. The photo is blurred because nudity protection is turned on. Others can screenshot or forward your photos without you knowing. You can unsend a photo if you change your mind, but there's a chance others have already seen it.”

Because the processing is performed on device, Meta says its detection will still work on end-to-end encrypted chats, with Meta not having access to the images themselves unless a user reports them.

AN EXAMPLE OF HOW THE FEATURE WILL LOOK TO USERS. IMAGE VIA META

The feature will be turned on by default for users under the age of 18, and adults will get a notification “encouraging” them to turn it on. “This feature is designed not only to protect people from seeing unwanted nudity in their DMs, but also to protect them from scammers who may send nude images to trick people into sending their own images in return,” the announcement says.

“When someone receives an image containing nudity, it will be automatically blurred under a warning screen, meaning the recipient isn’t confronted with a nude image and they can choose whether or not to view it,” Meta wrote. “We’ll also show them a message encouraging them not to feel pressure to respond, with an option to block the sender and report the chat.”

The announcement said Meta will start testing these features “soon.”

Meanwhile, Meta lets AI-generated fake influencers that steal real women’s images run rampant on Instagram and can’t stop even the most obvious catfish romance scams happening on the platform—and on Facebook, people are constantly being tricked by AI-generated content into believing it’s real.

Instagram’s nude detection feature is similar to one previously announced by Apple. The company’s “Sensitive Content Warning” also works by analyzing photos on a user’s device, according to Apple’s documentation. Apple faced a huge backlash when it originally announced a feature that would go even further, and scan photos uploaded to iCloud for CSAM. Apple killed that plan in December 2022.

Meta has made controversial attempts to detect sexual abuse imagery in the past: In 2017 and into 2018, it tested a non-consensual imagery prevention system that asked users to send their own nudes to Facebook to stop them from spreading.

XingChi

XingChi