Author: Weimar

Talent and energy are becoming the high ground towers that technology companies must seize in the AI battle.

"This AI talent war is the craziest talent war I have ever seen!" Musk said bluntly on Twitter.

On May 28, the AI startup founded by the Tesla CEO, xAI, announced on its official website that it had raised $6 billion to build a supercomputer, which Musk called a "supercomputing factory." Admittedly, this requires more talent. Musk even said that if xAI did not make an offer, people would be poached by Open AI.

In this big model battle of artificial intelligence where no one can see the exact shape of the future, investing in a reliable team is obviously the most powerful guarantee for investment companies. This is also an important reason why this talent war is intensifying.

However, “Really good talents usually don’t take the initiative to look for jobs, so you need to dig out those talents you like.” OpenAI founder Sam Altman mentioned this in his article in his early years.

Information gap is the key to winning or losing in this talent war.

Our first talent map focuses on the field that technology giants have invested heavily in - embodied intelligence.

If the future of this AI war is difficult to predict, embodied intelligence may be one of the ultimate forms. Nvidia CEO Huang Renxun even said that the next wave of AI will be embodied intelligence.

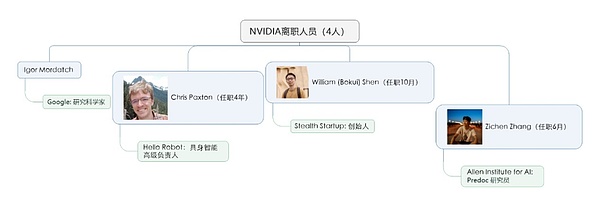

Silicon Rabbit tried to sort out the embodied intelligence talent maps of major American companies, two AI Huangpu Military Schools - Google and Nvidia, as well as the Chinese bosses in them, which may provide a reference for readers who want to start a business or invest in them.

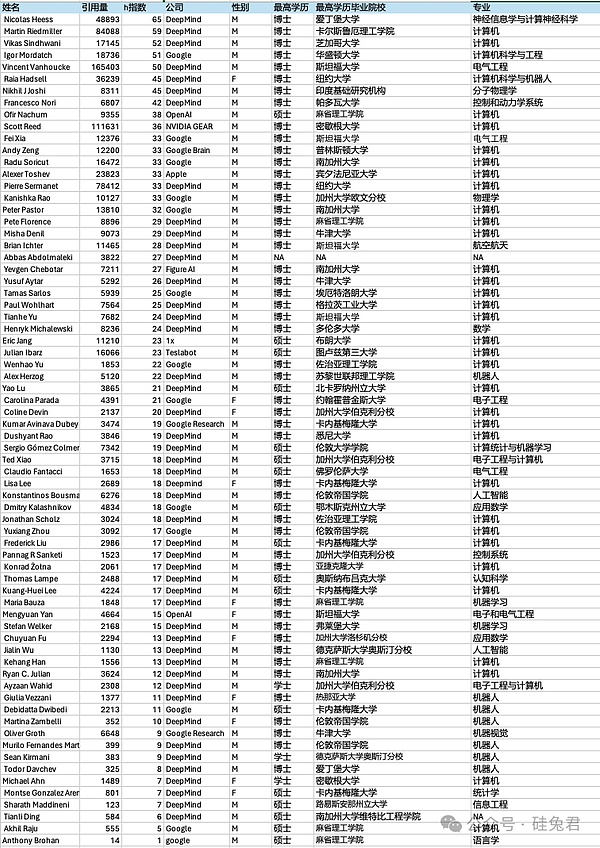

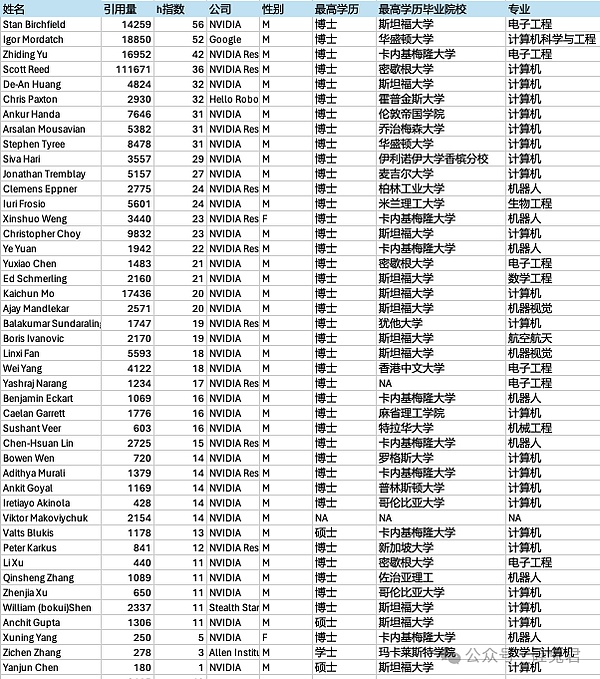

「1」Based on the key embodied intelligence papers and projects of Google and NVIDIA, a total of 114 industry leaders were sorted out, of which Google accounted for 60%, NVIDIA accounted for 40%, and there were more men (90%) and fewer women (10%).

「2」8% of the researchers have academic levels comparable to those of the American Academy of Sciences. 59% of the researchers are at a high level.

「3」78% of the researchers have a doctorate degree as their highest academic level, 18% are postgraduates, and only 4% are undergraduates.

「4」Chinese people account for about 27% of the embodied intelligence researchers at Google and NVIDIA.

「5」Stanford sent the most embodied intelligence experts to Google and Nvidia, followed by CMU and MIT. The three schools sent about 1/3 of the talents.

For detailed data and resumes of Chinese experts, see below?

「1」

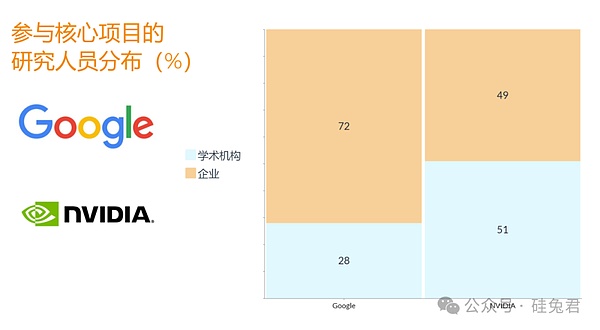

A total of 248 researchers participated in the embodied intelligence research of Google and Nvidia. Excluding 62 researchers who did not have a profile in Google Scholar, among the remaining 186 researchers, 60% were from the industry and 40% were from the academic community.

Specifically, Google has stronger independent research capabilities, while Nvidia has made use of research resources from multiple top schools. 45 university researchers participated in Nvidia's robot research, accounting for half (51%); in contrast, Google's proportion is less than one-third (27 people, 28%).

「2」

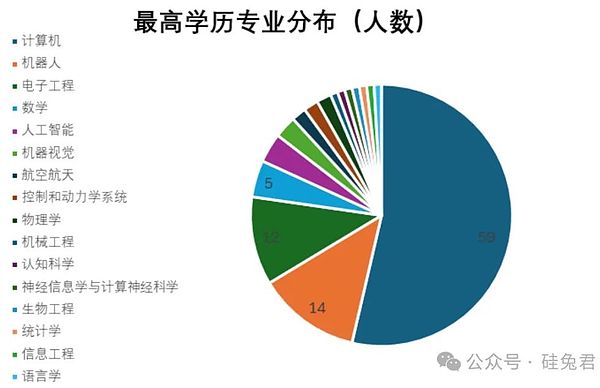

Focusing on industry talents, among the 114 researchers at Google and Nvidia, men account for about 90%, Chinese account for about 27%, and doctoral degrees account for about 78%.

In terms of gender ratio and ethnicity, Google and Nvidia are slightly different. Google seems to be more friendly to women, with 11 female scientists joining, while Nvidia has only 2.

Nvidia has a higher proportion of Chinese, accounting for 40%, while this proportion is only 20% at Google.

「3」

Stanford has sent the most embodied intelligence leaders to Google and Nvidia, followed by CMU and MIT. The three schools have sent about 1/3 of the talents.

The 114 researchers graduated from 51 universities. Among them, Stanford University has 16 people, Carnegie Mellon University has 14 people, and Massachusetts Institute of Technology has 7 people. These three schools account for about one-third of the number of people, while most other schools have only one student.

The vast majority of researchers come from American institutions, but there are also two schools in Europe that have made a significant impact in the field of embodied intelligence: Imperial College London and Oxford University in the UK, with a total of 8 researchers graduating from these two schools. Oxford University has accumulated rich experience in deep learning, and Google has cooperated with Oxford University after acquiring DeepMind, introducing experts in the field of deep learning. For example, the research and development team of AlphaGo includes 3 professors at Oxford University and 4 former researchers from Oxford University.

「4」

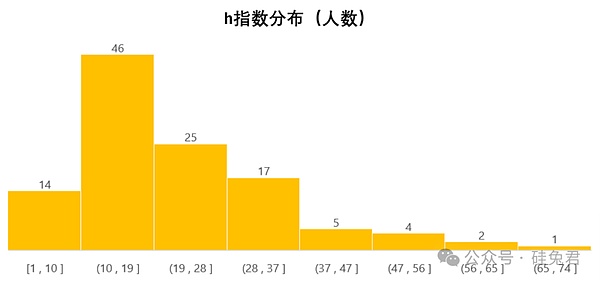

8% of industry researchers have academic levels comparable to those of members of the US Academy of Sciences. 59% of industry researchers are at a high level. Google researchers have stronger academic capabilities than Nvidia.

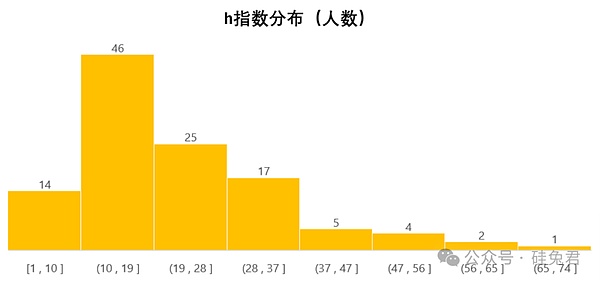

We use citations and the "h" index to measure academic level. The "h" index is the highest number of published papers with at least the same number of citations (h) by an author. For example, if someone's h-index is 20, it means that among the papers he has published, each of them has been cited at least 20 times.

Generally speaking, an h-index above 10 can be considered a high level, and an h-index of 18 is a high level, while the general requirement for becoming a member of the American Academy of Sciences is above 45.

The h-index of these 114 corporate researchers shows their strong research level: 89% of them have an h-index greater than 10, 59% have an h-index greater than 18, and 8% have an h-index even greater than 45.

Further comparison of the academic level of Google and Nvidia will reveal that Google researchers have a significantly higher influence than Nvidia. For example, the average number of citations and the average h-index of Google corporate researchers are 12596 and 23, while the data of Nvidia are 6418 and 21.

「5」

About 1/10 of the embodied intelligence researchers at Google and NVIDIA left to join other companies.

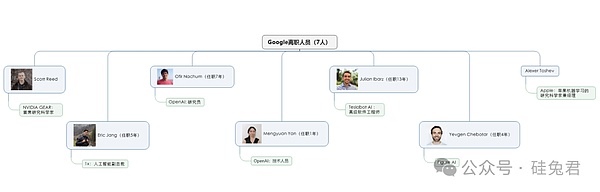

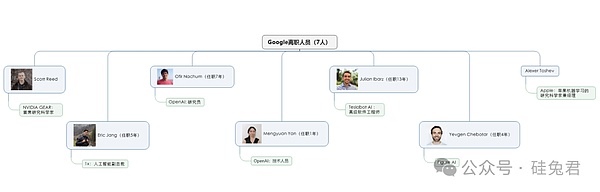

Seven out of 70 Googlers left, accounting for 10%. Currently working at companies such as NVIDIA, Apple, Tesla, 1x, OpenAI, and Figure AI, generally speaking, fewer people have left Google, and the vast majority of them work at Google DeepMind.

Among them, Scott Reed joined Google DeepMind in 2016 to work on control and generative models, and later joined NVIDIA as the chief research scientist of the GEAR team.

Note: Google researchers who left and where they are going

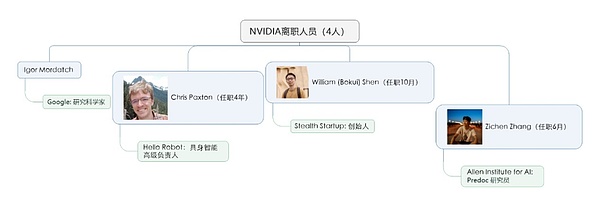

Four of Nvidia's 44 embodied intelligence researchers left, accounting for 9%. One went to Google, one went to the Allen Institute for AI, and the other two chose to start their own businesses.

Igor Mordatch's research interests include machine learning, robotics, and multi-agent systems. He was a research scientist at OpenAI and a visiting researcher at Stanford University and Pixar Animation Studios. He co-organized the OpenAI Scholar Mentoring Program and served as a mentor and teaching assistant for AI4All, Google CS Research Mentoring Program, and Girls Inc. After leaving Nvidia, he worked as a research scientist at Google DeepMind. He has published about 123 articles, with a Google Scholar h-index of 51 and 18,752 citations.

Note: Nvidia researchers who left and where they are going

「6」

Of the researchers who have the academic level of “members of the U.S. National Academy of Sciences” (h-index greater than 45), Google has 6 and Nvidia has 1. They are (in order of index):

Google

Nicolas Heess

DeepMind research scientist.

In 2011, he published the paper "Learning a Generative Model of Images by Factoring Appearance and Shape" while pursuing a PhD in neuroinformatics and computational neuroscience at the University of Edinburgh. After graduation, he has worked at DeepMind ever since.

His early research focused on machine vision, machine learning, graphics/augmented reality/games, and he is currently an honorary professor at the Department of Computer Science at UCL, UK.

Published about 224 articles, Google Scholar h-index 65, cited 48917 times.

Martin Riedmiller

DeepMind research scientist.

Studied computer science at the University of Karlsruhe (now Karlsruhe Institute of Technology) in Germany from 1986 to 1996 and obtained a doctorate. After graduation, he taught in academia while starting a business.

From 2002 to 2015, he served as a professor at the University of Dortmund, University of Osnabrueck, and University of Freiburg, leading the Machine Learning Lab; from 2010 to 2015, he founded Cognit - Lab for learning machines in Baden, Germany.

In 2015, he joined Google DeepMind full-time.

His research areas focus on artificial intelligence, neural networks, reinforcement learning, etc. He has published about 188 articles, with a Google Scholar h-index of 59 and 84,113 citations.

Vikas Sindhwani

Google DeepMind Research Scientist, leading a research team focused on solving planning, perception, learning and control problems in the field of robotics.

He holds a PhD in Computer Science from the University of Chicago and a Bachelor of Engineering Physics from the Indian Institute of Technology (IIT) Bombay.

He was in charge of the Machine Learning Group at IBM T.J. Watson Research Center in New York from 2008 to 2015. He joined Google DeepMind in 2015 and has been working there ever since.

Serves as an editorial board member of Transactions on Machine Learning Research (TMLR) and IEEE Transactions on Pattern Analysis and Machine Intelligence; has been an area chair and senior program committee member of NeurIPS, International Conference on Learning Representations (ICLR), and Knowledge Discovery and Data Mining (KDD).

His research interests range from the core mathematical foundations of statistical machine learning to the end-to-end design of building large-scale, safe, and healthy artificial intelligence systems.

He has won the Best Paper Award in Uncertainty in Artificial Intelligence (UAI-2013) and the 2014 IBM Pat Goldberg Memorial Award; and was a finalist for the ICRA-2022 Outstanding Planning Paper Award and the ICRA-2024 Best Paper Award in Robotic Operations.

Published about 137 articles, Google Scholar h-index 52, and cited 17,150 times.

Vincent Vanhoucke

Distinguished Scientist at Google DeepMind and Senior Director of Robotics, he has worked at Google for over 16 years.

He holds a PhD in Electrical Engineering from Stanford University (1999-2003) and an Engineer’s Degree from the Ecole Centrale Paris.

He led the vision and perception research at Google Brain and was responsible for the speech recognition quality team at Google Voice Search. He co-founded the Conference on Robot Learning.

His research covers a wide range of areas including distributed systems and parallel computing, machine intelligence, machine perception, robotics, and speech processing. Published about 64 articles, Google Scholar h-index 50, cited 165519 times.

Raia Hadsell

Senior Director of Research and Robotics at DeepMind, VP of Research.

Joined in 2014.

After receiving a bachelor's degree in religion and philosophy from Reed College (1990-1994), he completed his doctoral research at New York University with Yann LeCun (2003-2008), focusing on machine learning using conjoined neural networks (commonly known as "ternary loss" today), face recognition algorithms, and mobile robotics research using deep learning in the wild. The paper "Learning Long-range vision for offroad robots" won the Outstanding Paper Award in 2009.

Postdoctoral research at the Robotics Institute of Carnegie Mellon University, working with Drew Bagnell and Martial Hebert, and then became a research scientist in the Vision and Robotics Group at SRI International in Princeton, New Jersey (2009-2014).

After joining DeepMind, the research focus has been on some fundamental challenges in the field of artificial general intelligence, including continual learning and transfer learning, deep reinforcement learning for robotics and control problems, and neural models of navigation. Is the founder and editor-in-chief of a new open journal TMLR, an executive committee member of CoRL, a member of the European Laboratory for Learning Systems (ELLIS), and one of the founding organizers of NAISys (Neuroscience and Artificial Intelligence Systems). Serves as an advisor to CIFAR and has served on the executive committee of WiML (Women in Machine Learning).

Published about 107 articles, Google Scholar h-index 45, cited 36265 times.

Nikhil J Joshi

Limited information, received a master's degree in physics from the Indian Institute of Technology, India, and a doctorate in molecular physics from the Tata Institute of Fundamental Research, India. Joined Google Brain as a software developer in 2017, and previously worked in several companies. Google Scholar h-index 45, cited 8320 times.

NVIDIA

Stan Birchfield

Chief Research Scientist and Senior Research Manager at NVIDIA.

Joined in 2016, focusing on the intersection of computer vision and robotics, including learning, perception, and AI-mediated reality and interaction.

Received a Ph.D. in Electrical Engineering from Stanford University in 1999, with a minor in Computer Science.

After graduation, joined Quindi Corporation, a Bay Area startup, as a research engineer to develop intelligent digital audio and video algorithms.

From 2013 to 2016, he joined Microsoft and was responsible for developing computer vision and robotics applications and ground-truth navigation systems, and led the development of the automatic camera switching function.

Google Scholar h-index 56, citations 14,315 times.

「7」

Some Chinese leaders in the industry

There are 31 Chinese among these 114 corporate researchers, and 12 of them are highlighted, including 4 from Google, 6 from Nvidia, and 1 each from OpenAI and 1x.

Google

Fei Xia (Xia Fei)

Senior Research Scientist at Google DeepMind.

Graduated from Tsinghua University in 2016 and received his Ph.D. from the Department of Electrical Engineering at Stanford University in 2021.

During his Ph.D. studies, he did research internships with Dieter Fox at NVIDIA, Alexander Toshev at Google, and Brian Ichter. After completing his Ph.D. at Stanford University, he joined Google's Robotics team in the fall of 2021.

Research interests include large-scale and transferable robotic simulation, learning algorithms for long-term tasks, and the combination of geometric and semantic representations of the environment. His recent research direction is to use foundation models in the decision-making process of intelligent agents.

Academic achievements include 5 papers accepted at the ICRA 2023 conference and 4 papers accepted at the CoRL 2022 conference.

Representative works include GibsonEnv, iGibson, SayCan, etc. iGibson develops large-scale interactive environments for robot learning, and uses a combination of imitation learning and model predictive control (MPC) in robot control strategies. Google Scholar h-index is 33 and the number of citations is 12478.

Andy Zeng

Senior Research Scientist at Google DeepMind.

Received a double bachelor's degree in computer science and mathematics from UC Berkeley, and a Ph.D. in computer science from Princeton University. After graduating with a Ph.D. in 2019, he joined Google Brain, focusing on machine learning, vision, language, and robot learning.

Research interests include robot learning, enabling machines to interact with the world intelligently and improve themselves over time.

Academic achievements include papers published in various conferences such as ICRA, CVPR, CoRL, etc.

Important projects involved include PaLM-E.

Google Scholar h-index is 32 and citations are 12207.

Tianhe Yu

Research scientist at Google DeepMind.

Received a bachelor's degree with highest honors in computer science, applied mathematics, and statistics from UC Berkeley in 2017, and received a Ph.D. in computer science from Stanford University in 2022, with Chelsea Finn as his advisor.

Joined Google Brain after graduating with a Ph.D. in 2022, focusing on machine learning, vision, language, and robot learning.

Research interests include machine learning, perception, control, especially offline reinforcement learning (i.e. learning from static datasets), multi-task, and meta-learning. Recently, he is exploring the use of basic models in decision-making problems.

Academic achievements include papers published in various conferences, such as ICRA, CVPR, CoRL, etc.

Important projects involved include PaLM-E.

Google Scholar h-index is 25, with 7726 citations.

Yuxiang Zhou

Senior Research Engineer at Google DeepMind.

Studied for a Master's and PhD in Computer Science at Imperial College London, UK, from 2010 to 2018, with Professor Stefanos Zafeiriou as his supervisor.

From September 2017 to March 2018, he did a research internship in deep reinforcement learning and robotics at Google Brain & DeepMind, and joined Google DeepMind in December 2018 as a research engineer.

Research topics include solving robotics, third-person imitation learning, and dense shape research of statistical deformation models.

Google Scholar h-index is 17 and the number of citations is 3099.

NVIDIA

Linxi Fan (范林熙)

NVIDIA senior research scientist and head of GEAR Lab.

PhD from Stanford Vision Lab under Prof. Fei-Fei Li.

Internships at OpenAI (with Ilya Sutskever and Andrej Karpathy), Baidu AI Lab (with Andrew Ng and Dario Amodei), and MILA (with Yoshua Bengio).

Research explores the frontiers of multimodal grounded models, reinforcement learning, computer vision, and large-scale systems.

Pioneered the creation of Voyager (the first AI agent to play Minecraft proficiently and continuously guide its functionality), MineDojo (open agent learning by watching 100,000 Minecraft YouTube videos), Eureka (a 5-fingered robotic hand that performs extremely dexterous tasks such as pen rotation), and VIMA (one of the first multimodal grounded models for robotic manipulation). MineDojo won the Outstanding Paper Award at NeurIPS 2022.

Google Scholar h-index is 18 and citations are 5619.

Chen-Hsuan Lin

Senior Research Scientist at NVIDIA.

Graduate with a BS in Electrical Engineering from National Taiwan University. Received a PhD in Robotics from Carnegie Mellon University with Simon Lucey, supported by an NVIDIA Graduate Fellowship.

Interned at Facebook AI Research and Adobe Research.

Worked on computer vision, computer graphics, and generative AI applications. Interested in solving problems involving 3D content creation, including 3D reconstruction, neural rendering, generative models, etc.

The research won the TIME magazine's 2023 Best Invention Award.

Google Scholar h-index is 15 and the number of citations is 2752.

De-An Huang (Huang De'an)

NVIDIA research scientist, professional fields are computer vision, robotics, machine learning, bioinformatics.

Received a Ph.D. in computer science from Stanford University, with advisors Fei-Fei Li and Juan Carlos Niebuhrs. During his master's degree at Carnegie Mellon University, he worked with Kris Kitani, and during his undergraduate studies at National Taiwan University, he worked with Yu-Chiang Frank Wang.

He was an intern for Dieter Fox at NVIDIA Seattle Robotics Lab, Vignesh Ramanathan and Dhruv Mahajan at Facebook Applied Machine Learning, Zicheng Liu at Microsoft Redmond Research, and Leonid Sigal at Disney Research in Pittsburgh.

Google Scholar h-index is 32, with 4848 citations.

Kaichun Mo (莫凯淳)

NVIDIA Research scientist at the Seattle Robotics Lab led by Professor Dieter Fox.

Received a PhD in Computer Science from Stanford University, with Professor Leonidas J. Guibas as his advisor. Previously affiliated with the Geometric Computing Group and the Artificial Intelligence Laboratory at Stanford University. Prior to joining Stanford in 2016, he received a bachelor's degree in Computer Science from the ACM Class at Shanghai Jiao Tong University (PS: The direct doctoral rate of the Shanghai ACM Honors Class is as high as 92%, and it has won the ACM International College Student Programming Contest global championship three times, and has trained 640 computer "strongest brains"). GPA is 3.96/4.30 (ranked 1/33).

Expertise is in 3D computer vision, graphics, robotics, and 3D deep learning, with a particular focus on object-centric 3D deep learning and structured visual representation learning for 3D data.

Google Scholar h-index is 20, with 17654 citations.

Xinshuo Weng

NVIDIA Research Scientist, working with Marco Pavone.

She received her PhD in Robotics (2018-2022) and her MS in Computer Vision (2016-17) from Carnegie Mellon University, working with Kris Kitani. She received her undergraduate degree from Wuhan University.

She also worked with Yaser Sheikh at Facebook Reality Lab as a research engineer, helping build "realistic telepresence".

Research interests are in generative models and 3D computer vision for autonomous systems. Covers tasks such as object detection, multi-object tracking, re-identification, trajectory prediction, and motion planning. Developed 3D multi-object tracking systems such as AB3DMOT which has >1,300 stars on GitHub.

Google Scholar h-index of 23 and 3,472 citations.

Zhiding Yu (虞之鼎)

Principal Research Scientist and Head of the Machine Learning Research Group at NVIDIA.

Received a PhD in Electrical and Computer Engineering from Carnegie Mellon University in 2017 and a Master's degree in Electrical and Computer Engineering from the Hong Kong University of Science and Technology in 2012. Graduated from the Joint Electrical Engineering (Feng Bingquan Experimental Class) of South China University of Technology in 2008.

Research interests focus on deep representation learning, weakly supervised/semi-supervised learning, transfer learning, and deep structured prediction, as well as their applications in vision and robotics problems.

Winner of the Domain Adaptive Semantic Segmentation track in WAD Challenge@CVPR18. Won the Best Paper Award at WACV15.

Google Scholar h-index is 42 and citations are 17064.

OpenAI

Mengyuan Yan

Technician.

Received a bachelor's degree in physics from Peking University in 2014 and a Ph.D. in electrical and electronic engineering from Stanford University in 2020.

Member of the Interactive Perception and Robot Learning Lab (IPRL), which is part of the Stanford AI Lab, with mentors Jeannette Bohg and Leonidas Guibas.

Research areas include computer vision, machine learning, robotics, and generative models.

Published 28 articles, Google scholar h-index 15, cited 4664 times.

1X Technologies

Eric Jang

Vice President of AI.

Graduated from Brown University with a master's degree in computer science in 2016.

Worked at Google from 2016 to 2022 as a senior research scientist in robotics.

Research focused on applying machine learning principles to robotics, developing Tensor2Robot, an ML framework used by the Robotics Operations team and Everyday Robots (until TensorFlow 1 was deprecated); co-leader of the Brain Moonshot team, which produced SayCan.

Left Google Robotics in April 2022 and joined 1X Technologies (formerly Halodi Robotics), leading the team to complete two important tasks, one of which was to achieve autonomy for the humanoid robot EVE through an end-to-end neural network.

First author of 7 papers, co-authored more than 15 papers, Google scholar h-index of 23, and citations of 11213. Wrote a book "AI is Good for You" to tell the history and future of artificial intelligence.

「8」

Targeting Google and NVIDIA’s embodied intelligence talents through key research papers and experimental projects.

Google focuses on basic model research, and the key research releases in which its embodied intelligence talents participate include:

SayCan: Able to decompose high-level tasks into executable subtasks.

Gato: Tokenize multimodal data and input it into the Transformer architecture.

RT-1: Input robot trajectory data into the Transformer architecture to obtain discrete action tokens.

PaLM-E: Further improves multimodal performance based on the PaLM general model.

RoboCat: Combining the multimodal model Gato with the robot dataset enables RoboCat to process language, images, actions and other tasks in simulated and physical environments.

RT-2: It is a combination of the RT-1 model and the PaLM-E model, which enables the robot model to evolve from VLM to VLA.

RT-X: On the basis of maintaining the original architecture, it comprehensively improves the five capabilities of embodied intelligence.

The above models gradually realize the combination of autonomous and reliable decision-making, multimodal perception and real-time precise operation and control capabilities of the model, while demonstrating generalization and thinking chain capabilities.

Combining the above research papers, a total of 143 Google researchers were sorted out.

NVIDIA focuses on simulation training, and the experimental projects in which its embodied intelligence talents participate include:

Eureka: Reward mechanism design for reinforcement learning using large language models

Voyager: Using large language models to drive intelligent agents in an open world

MimicPlay: Long-distance imitation learning by observing human actions

VIMA: Multimodal command manipulation to perform general robot tasks

MinDojo: Using Internet-scale data to establish open embodied agents

In addition, NVIDIA will focus on embodied intelligence in 2024 and officially announce the establishment of GEAR (Generalist Embodied Agent) research institute. Research) laboratory, mainly focusing on four key areas of multimodal basic models, general robot research, basic intelligent agents in the virtual world, and simulation and synthetic data technology, aims to promote the development of AI technologies such as large models from the virtual world to the real world.

This article first sorted out the 7 Google core project papers mentioned above. Each paper lists the project researchers in detail and clearly publishes their specific work content.

NVIDIA's research page published a list of 54 people involved in the robotics project; in addition, considering all the authors of the papers published by GEAR, a total of 105 embodied intelligence researchers were sorted out.

Appendix: List of 100 people in embodied intelligence at Google and NVIDIA

Huang Bo

Huang Bo