By Ian King, Bloomberg; Compiled by Baishui, Golden Finance

When a new product sets the tech world on fire, it’s usually a consumer product like a smartphone or a game console. This year, tech watchers zeroed in on an obscure computer part that most people can’t even see. The H100 processor enables a new generation of artificial intelligence tools that promise to transform entire industries, propelling its developer, Nvidia Corp., to become one of the world’s most valuable companies. It shows investors that the buzz around generative AI is translating into real revenue, at least for Nvidia and its most important supplier. Demand for the H100 is so great that some customers have had to wait as long as six months to receive it.

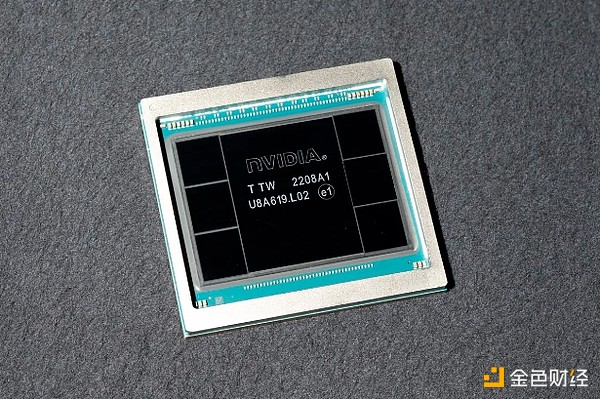

1. What is Nvidia’s H100 chip?

Named a tribute to computer science pioneer Grace Hopper, the H100 is a souped-up version of the graphics processing unit that’s typically installed in PCs to help video game players get the most realistic visual experience possible. It includes technology that turns clusters of Nvidia chips into single units that can process large amounts of data and perform high-speed calculations. That makes it well suited for the energy-intensive task of training the neural networks that generative AI relies on. The company, founded in 1993, pioneered the market with an investment nearly two decades ago, betting that the ability to work in parallel would one day make its chips valuable in applications beyond gaming.

Nvidia H100 Photographer: Marlena Sloss/Bloomberg

2. Why is the H100 so special?

Generative AI platforms learn to do tasks such as translating text, summarizing reports and synthesizing images by absorbing large amounts of existing material. The more they see, the better they get at recognizing human speech or writing a job application. They develop through trial and error, making billions of attempts to reach proficiency and consuming vast swathes of computing power in the process. Nvidia says the H100 is four times faster than the chip’s predecessor, the A100, at training so-called large language models (LLMs) and 30 times faster at responding to user prompts. Since launching the H100 in 2023, Nvidia has announced allegedly faster versions — the H200 and the Blackwell B100 and B200. The growing performance advantage is crucial for companies racing to train LLMs for new tasks. Many of Nvidia’s chips are seen as key to developing artificial intelligence, so much so that the U.S. government has restricted sales of the H200 and several less powerful models to China.

3. How did Nvidia become a leader in AI?

The Santa Clara, California-based company is a global leader in graphics chips, the components in computers that generate the images on a screen. The most powerful of these chips consist of thousands of processing cores that can execute multiple threads of calculation simultaneously, modeling complex 3D renderings such as shadows and reflections. Nvidia engineers realized in the early 2000s that they could redesign these graphics accelerators for other applications by breaking the tasks into smaller parts and then processing them simultaneously. AI researchers found that by using this type of chip, their work could finally become practical.

4. Does Nvidia have any real competitors?

Nvidia currently controls about 92% of the data center GPU market, according to market research firm IDC. Dominant cloud computing providers such as Amazon.com Inc.’s AWS, Alphabet Inc.’s Google Cloud and Microsoft Corp.’s Azure are trying to develop their own chips, as are Nvidia’s rivals Advanced Micro Devices Inc. and Intel Corp. So far, these efforts have not made much progress in the AI accelerator market, and Nvidia’s growing dominance has become a concern for industry regulators.

5. How can Nvidia stay ahead of its competitors?

Nvidia has updated its products, including the software that supports the hardware, at a pace that no other company can match. The company has also designed a variety of cluster systems to help its customers buy the H100 in bulk and deploy it quickly. Chips like Intel's Xeon processors are capable of more complex data processing, but they have fewer cores and are much slower at processing the large amounts of information typically used to train AI software.

6. How do AMD and Intel compare to Nvidia in AI chips?

AMD, the second-largest maker of computer graphics chips, launched a version of its Instinct series last year aimed at conquering a market dominated by Nvidia's products. In early June, at the Taipei International Computer Show in Taiwan, AMD CEO Lisa Su announced that an upgraded version of its MI300 AI processor would be available in the fourth quarter and said more products would be launched in 2025 and 2026, showing the company's commitment to this business area. Intel is currently designing chips for AI workloads, but it acknowledges that demand for graphics chips for data centers is currently growing faster than for server processor units, which have traditionally been its strong suit. Nvidia's advantage isn't just in the performance of its hardware. The company invented a language called CUDA that's used with its graphics chips, allowing them to be programmed to do the types of work that underpin AI programs.

7. What product does Nvidia plan to release next?

The most anticipated product is Blackwell, and Nvidia says it expects to generate "significant" revenue from the new product line this year. However, the company has hit snags in its development that will slow the release of some of its products.

Meanwhile, demand for its H-series hardware continues to grow. CEO Jensen Huang has been acting as an ambassador for the technology and trying to entice governments and private businesses to buy in early or risk being left behind by those embracing AI. Nvidia also knows that once customers choose its technology for their generative AI projects, it will be easier to sell them upgrades than competitors looking to lure users.

JinseFinance

JinseFinance

JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist