In recent months, artificial intelligence has emerged one after another. We frequently see the following words in news headlines: AI leaked company code and cannot be deleted at all; AI software It violated the privacy of users’ facial information; a certain large model product was exposed to leak private conversations...etc.

However, a more profound problem may have existed quietly long before the large-scale model craze broke out: deep fakes called Deepfake The emergence of technology that can use deep learning to seamlessly splice anyone in the world into videos or photos that they have never actually participated in has led to the popularity of the term "AI face-changing" in 2018.

Until today in 2024, the potential threat of Deepfake has not been effectively contained.

Artificial intelligence fraud has become a reality, causing concern.

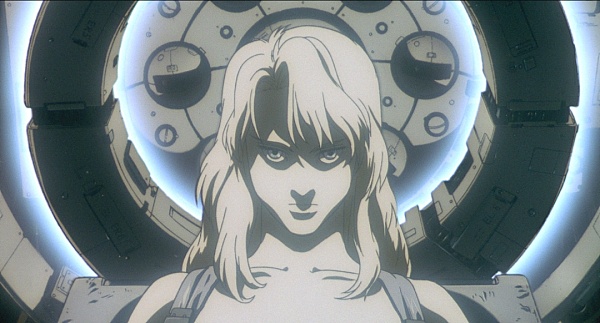

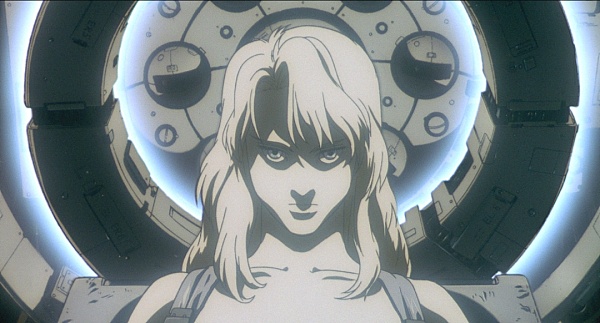

The animated film "Ghost in the Shell" released in 1995 depicts such a plot: an artificial intelligence named "Puppet Master" knows how to pretend to be other identities and confuse people. and control human thoughts and behaviors, this ability makes it invisible in the Internet and virtual space. Although this scenario only appears in movies, if you have been influenced by the warnings of people like Elon Musk or Sam Altman for a long time, you will inevitably imagine that once artificial intelligence If left unchecked, it can evolve into a "Skynet"-style threat to humanity.

"Ghost in the Shell" Puppet Master

Fortunately, this This concern is not generally recognized by the majority of artificial intelligence developers. Many artificial intelligence giants in academia, such as Turing Award winner Joseph Sifakis, revealed in interviews that they scorned this idea. Currently, we do not have artificial intelligence that can drive safely on the road, let alone match human intelligence.

But this does not mean that artificial intelligence is harmless.

AI has every potential to become one of the most destructive tools humans have ever created. Regarding this, a financial executive who unfortunately encountered an artificial intelligence fraud recently has a deep understanding of it. The loss was as high as 25 million US dollars. The crime occurred not on the other side of the ocean, but in the Hong Kong Special Administrative Region, which is very close to us. In the face of such artificial intelligence crimes, do we have any countermeasures?

Change the day

On February 5, according to Hong Kong media Radio Television Hong Kong, an anonymous financial director received an email claiming to be from his British colleague, proposing a secret deal. The financial director is not a gullible person and suspected phishing at first sight.

However, the sender insisted on arranging a Zoom video conference. When the Hong Kong financial director joined the conference, he saw and heard several familiar faces. The faces and voices of colleagues. After their doubts were eliminated, they began to prepare for the transaction and followed the instructions of their British colleagues. Finally, they transferred 200 million Hong Kong dollars (currently about 184 million yuan) in 15 times to 5 local accounts.

It was not until another employee verified with the headquarters that the abnormality was discovered. It turned out that the British office had not This transaction was initiated and no payment was received. Imagine the financial director’s extreme regret and guilt after learning that he had been deceived. After investigation, it was found that the only real participant in the video conference was the Hong Kong financial director, and the rest were the British financial director and other colleagues simulated by Deepfake artificial intelligence technology.

With only a small amount of publicly available data, a basic set of artificial intelligence programs, and cunning deception, this group The scammer managed to get enough cash to buy a luxury superyacht.

At this moment, some people may sneer at this story and assert that the financial director is too sloppy. After all, the Deepfake we usually see on Douyin and B-station is "entire". "Live videos" are easy to distinguish between genuine and fake, but it is difficult to distinguish fake from genuine.

However, the actual situation may not be so simple. The latest research shows that people cannot actually reliably identify deepfake, which means that most people only recognize deepfakes on many occasions. Intuit what you see and hear. Even more troubling, the study also revealed that people are more likely to mistake deepfake videos for reality rather than fakeness. In other words, today’s deepfake technology is so sophisticated that it can easily deceive viewers.

Sponsored Business Content

Deep-learning + Fake

< p style="text-align: left;">Deepfake is a combination of the words "deep-learning" and "fake".

Therefore, the core element of Deepfake technology is machine learning, which makes it possible to quickly produce deep fake content at a lower cost. To create a deepfake video of someone, the creator first trains a neural network using large amounts of real video clips of that person, giving it a realistic "understanding" of how the person looks from multiple angles and under different lighting conditions. ". The creator then combines the trained network with computer graphics techniques to superimpose a replica of that person onto another.

Many people believe that a class of deep learning algorithms called generative adversarial networks (GANs) will become the main driving force for the development of Deepfake technology in the future. GAN-generated facial images are nearly indistinguishable from real faces. The first investigative report into the field of deepfake devotes a chapter to GANs, promising that they will enable anyone to create sophisticated deepfake content.

At the end of 2017, a Reddit user named "deepfakes" faked many well-known movie stars and even political figures The faces are used to generate porn videos and publish them on the web. Such fake videos instantly spread across social networks. After that, a large number of new Deepfake videos began to appear, but the corresponding detection technology did not gradually appear until a few years later.

However, there are positive examples. Deepfakes first became known to audiences on screen when the late actor Paul Walker was "resurrected" in "Fast and Furious 7." The 2023 Lunar New Year movie "The Wandering Earth 2" also used CG technology to reproduce the popular "Uncle Tat" Ng Man-tat. But before, it would have taken an entire studio of experts a year to create these effects.

Today, this process is faster than before.Even ordinary people who know nothing about technology can create an "AI replacement" in a few minutes. Face” video.

Concerns about deepfake have led to a proliferation of countermeasures. Back in 2020, social media platforms including Meta and X banned similar deepfake products from their networks. Experts are also invited to speak on defense methods at major computer vision and graphics conferences.

Now that Deepfake criminals have begun to deceive the world on a large scale, how should we fight back?

Multiple means to defend against Deepfake risks

The primary strategy is to "poison" artificial intelligence. To create a realistic deepfake, an attacker needs massive amounts of data, including numerous photos of the target person, numerous video clips showing changes in their facial expressions, and clear voice samples. Most deepfake techniques use material shared publicly by individuals or businesses on social media.

However, there are now programs such as Nightshade and PhotoGuard that can modify these files without affecting human perception, thereby rendering deepfakes ineffective. For example, Nightshade uses misleading processing to cause artificial intelligence to misjudge facial areas in photos. This misidentification can disrupt the artificial intelligence learning mechanism behind building Deepfake.

Secondly, applying such protective processing to all photos and videos published by yourself or your company online can effectively prevent Deepfake cloning. However, this approach is not foolproof and is more of a long game. As artificial intelligence gradually improves its ability to identify tampered files, anti-deepfake programs will need to continuously develop new methods to maintain their effectiveness.

To build a stronger line of defense, we need to no longer rely solely on easily compromised authentication methods. In the case of this article, the financial director involved regarded the video call as an absolute basis for confirmation of identity, but failed to take additional steps such as calling other personnel at headquarters or the UK branch for secondary authentication. In fact, some programs already use private key encryption technology to ensure the reliability of online identity verification. Implementing multi-factor authentication steps to significantly reduce the likelihood of this type of fraud is something all businesses should start implementing immediately.

So, the next time you are in a video conference, or receive a call from a colleague, relative or friend, please remember that the person on the other side of the screen may not be a real person. . Especially when you're asked to secretly transfer $25 million to an unknown set of bank accounts, the least we can do is give our boss a call first.

Joy

Joy