Author: Kerman Kohli, founder of DeFi Weekly; Translator: Golden Finance xiaozou

< p style="text-align: left;">Recently, Starkware started their highly anticipated airdrop. Like most airdrops, this one also sparked a lot of controversy. Sadly, this is no longer a surprise.

So, why does this happen again and again? You may hear some opinions like this:

· Insiders just want to cash out billions of dollars and walk away

· The team doesn’t know what to do and doesn’t have the right advisors

· The whale should be given more Priority because they bring TVL

· Airdrops are about the democratization of cryptocurrencies

;">· Without farmers, there is no usage or stress testing of the protocol

· Inconsistent airdrop incentives will continue to have strange side effects

· Inconsistent airdrop incentives will continue to have strange side effects

p>

None of these views is wrong, but these views themselves are not entirely correct. Let's do a little analysis to make sure we have a comprehensive understanding of the issue at hand.

There is a basic tension when making an airdrop, you need to choose between the following three factors:

· Capital efficiency

· Decentralization

· Retention

You often encounter situations where airdrops work well in one dimension, but not in two or all three. It is difficult to achieve a good balance between the two dimensions. Retention is the most difficult dimension, and retention rates above 15% are unheard of.

· Capital efficiency is defined as how many tokens you give participants. The more efficiently you allocate your airdrop, the easier it becomes for liquidity mining (one token for every dollar deposited) - benefiting whale users.

· Decentralization defines who will get your tokens and under what criteria. Recent airdrops have adopted arbitrary standard practices in order to maximize access to tokens to as many users as possible. This is usually a good thing because it keeps you out of legal trouble and gives you greater leverage because you're making people rich.

· The definition of retention rate is how many users will continue to hold airdrop tokens after the airdrop. In a sense, it's a way to measure whether the user is aligned with your intent. The lower the retention rate, the less aligned your users are with your intent. A 10% retention rate as an industry benchmark means that only 1 in 10 addresses are here for the right reasons!

Retention rates aside, let’s examine the first two factors in more detail: capital efficiency and decentralization.

Capital efficiency

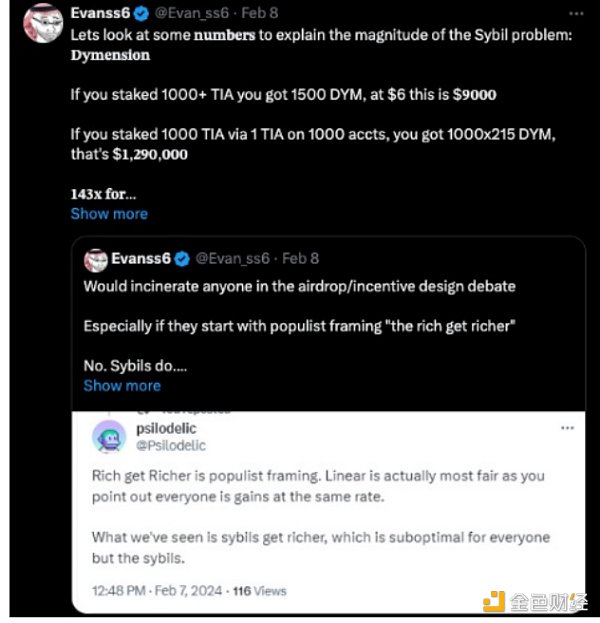

In order to understand capital efficiency, let us get to know a new term called "sybil coefficient". It basically calculates the return from allocating one dollar of funds to a certain number of accounts.

p>

The result of your sybil coefficient ultimately determines how wasteful the airdrop is. If your sybil coefficient is 1, technically this means you are running a liquidity mining scheme, which will piss off a lot of users.

However, when you get to a point like Celestia, where the sybil coefficient swells to 143, you will see extreme wasteful behavior and rampant farming mining mine.

Decentralization

This brings us to the second factor: decentralization change. You ultimately want to help the “little people,” who are the real users, and hope they jump at the chance to use your product early—even if they aren’t rich. If your sybil coefficient is too close to 1, then you won't be able to give anything to the "little guys" and are more likely to benefit the "whales".

This is where the airdrop debate gets heated. There are three types of users here:

The "little guy" who makes a quick buck here and then turns away (possibly using multiple wallets in the process).

The "little people" who like your product will stick around.

"Industry miners operating like a lot of little guys" will definitely take away most of your incentive tokens at this stage, and then Sell them before moving on to the next target.

The third category is the worst users, the first category is acceptable, and the second category is the best. How we distinguish these three types of users is a huge challenge for airdrops.

So how to solve this problem? While I don't have a concrete solution, I have an idea on how to solve this problem that I've spent several years thinking about and observing firsthand: project segmentation.

I'll explain what I mean in detail. Now think about a fundamental problem: you have all your users, and you need to be able to divide them into different groups based on some value judgment. The value here is observer-specific and therefore will vary from project to project. Trying to blame some "magic airdrop filter" is never enough. By studying the data, you can start to understand what your users really look like and start making data science-based decisions about segmenting your airdrop execution.

Why doesn't anyone do this? I’ll talk about this in a future article, but it’s a hard data problem that requires data expertise, time, and an investment of money. Not many teams are willing or able to do this.

Retention rate

The last dimension is retention rate. Before we discuss retention rate, it’s a good idea to define what retention rate means. I summarize it as follows:

Number of people receiving airdrops/Number of people holding airdrops

The typical mistake most airdrops make is treating them as a one-off.

To prove this, I thought some data might help! Luckily, Optimism has actually performed multiple rounds of airdrops! I was hoping to find some simple Dune dashboard that would give me the retention data I wanted, but unfortunately, I was wrong. So, I decided to roll up my sleeves and collect the data myself.

I don’t want to overcomplicate things, I want to understand one simple thing: the percentage of users holding OP balances during the continuous airdrop process How it has changed.

I went to the github website and found a list of all addresses participating in the Optimism airdrop. Then I built a small scraper to manually grab the OP balance of each address in the list, and did some data sorting.

Before we continue, it is important to remind that each OP airdrop is independent of the previous airdrop. There are no rewards or links for retaining previous airdrop tokens. I know why, so let’s move on.

First round of airdrop

A total of 248,699 users received airdrop tokens. Coins can perform the following operations:

OP mainnet users (92,000 addresses)

Duplicate OP mainnet users (19,000 addresses)

DAO voters (84,000 addresses)

Multi-sig signers (19,500 addresses)

Gitcoin donors on L1 (24,000 addresses)

p>Users squeezed out of Ethereum (74,000 addresses)

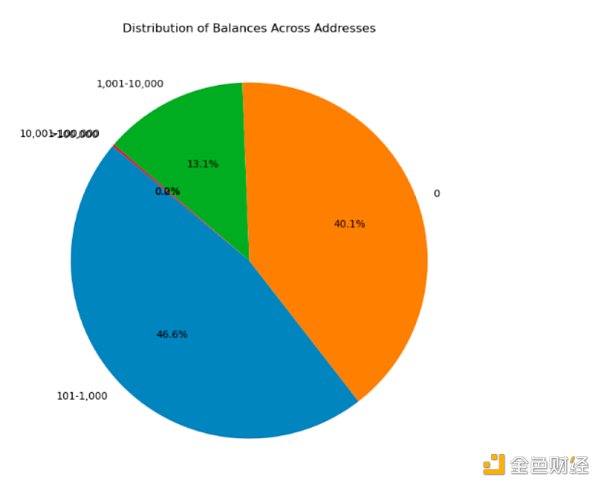

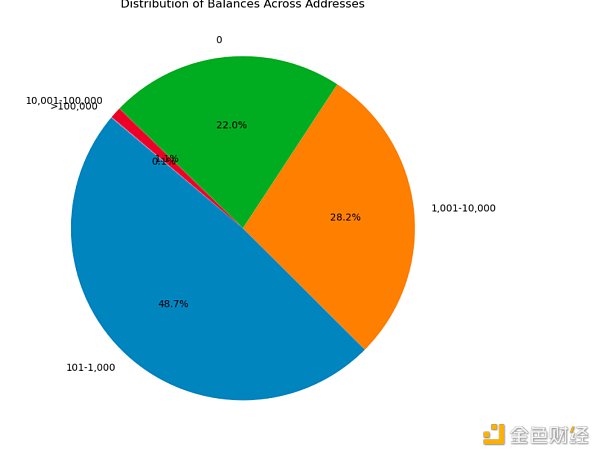

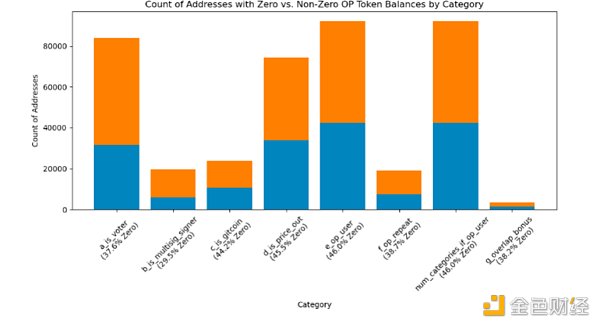

After analyzing all these users and their OP balances, I got the following distribution. A balance of 0 indicates that users have discarded their tokens, as unclaimed OP tokens are sent directly to eligible addresses at the end of the airdrop.

Anyway, the first airdrop was surprisingly good compared to previous airdrops I observed! Only 40% of people have a zero balance, which is a very good result.

p>

I then want to understand how each criterion plays a role in determining whether a user is likely to retain a token. The only problem with this approach is that addresses may fall into more than one category, which skews the data. I wouldn't just take it at face value, this is a rough indicator:

OP users once had the highest proportion of 0 balance users, followed closely by those who were squeezed out Users out of Ethereum. Obviously, these are not the best segments to allocate users to. Multisig signers have the lowest percentage, which I think is a good indicator since it's not obvious for airdrop farmers to set up multisig (you can sign transactions for airdrop mining).

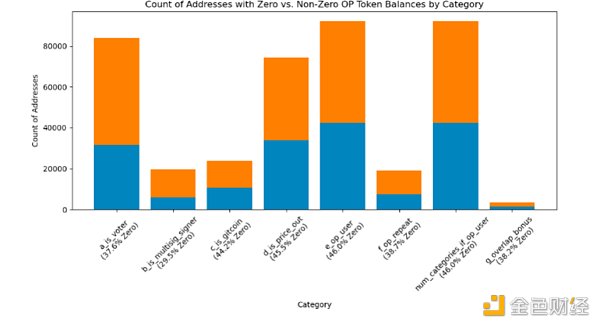

Second round of airdrop

This round of airdrop was allocated to 307,000 addresses, but in In my opinion, this is far less thoughtful.

Governance delegation rewards are based on delegation OP quantity and length of commission.

Provide partial gas rebates to active Optimism users who pay gas fees exceeding a certain amount.

Further rewards depend on additional attributes related to management and usage.

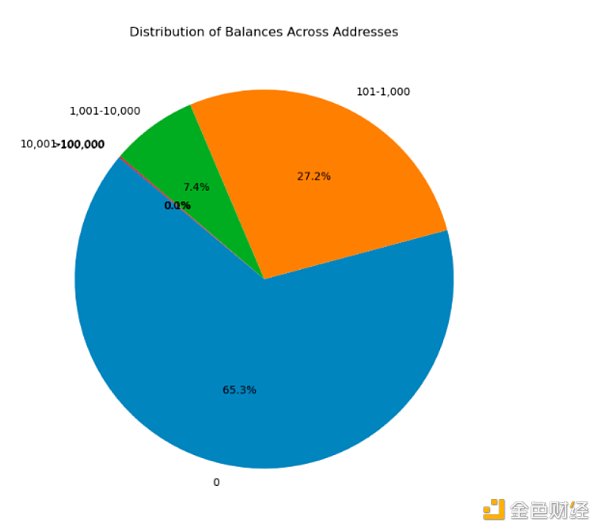

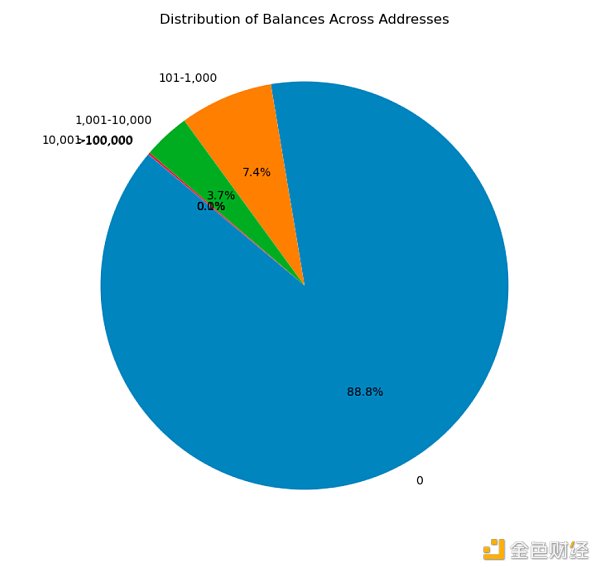

To me, this is a poor standard because governance voting is an easy thing for bots thing, and it's pretty predictable. As we'll discover below, my intuition wasn't too far off. I'm surprised the retention rate is so low!

p>

Nearly 90% of addresses hold zero OP balance! This is the airdrop retention data that people often see. I'd love to learn more about the situation but I'm more concerned about the airdrop tokens left behind.

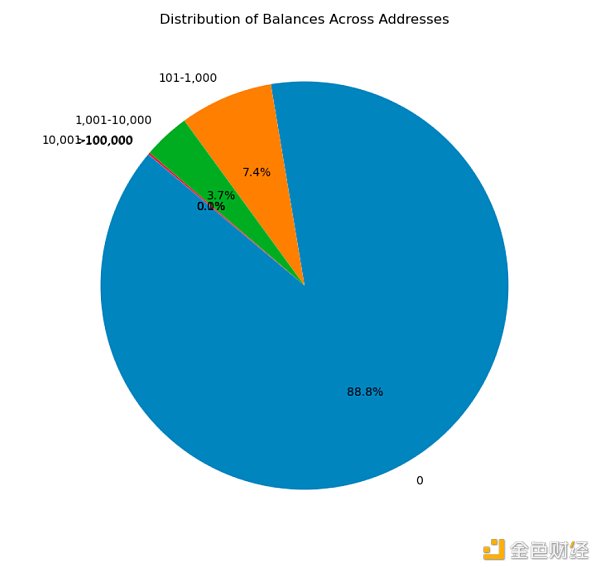

The third round of airdrops

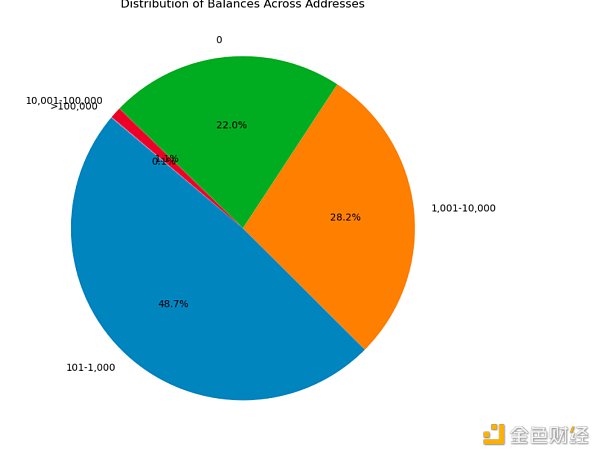

This is the best execution by the OP team so far airdrop. The standards are more complex than before and have elements of "linearization". These airdrop tokens are distributed to approximately 31,000 addresses, so although smaller, they are more effective.

Delegation OP x number of days = delegation Cumulative total number of OPs (e.g. 20 OPs are delegated, 100 days are delegated: 20 * 100 = 2,000 OPs are delegated).

The delegation must be during the snapshot period (0:00 UTC time on January 20, 2023, July 20, 2023 UTC time) 0:00) On-chain voting through OP governance.

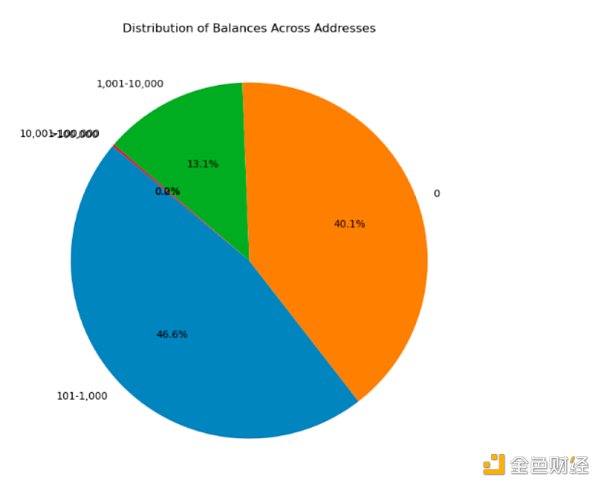

A key detail to note here is that the standard for on-chain voting is after the last round of airdrops. So the farmers who come in the first round will think "Okay, I quit, it's time to move on to the next target." This is smart and helpful for analytics, so check out these retention numbers!

p>

Only 22% of these airdrop recipients had zero token balances! To me, this suggests that this airdrop was far less wasteful than any previous round. This reinforces my point that retention is critical, and there’s more data showing that multiple rounds of airdrops are more effective than people expect.

Fourth round of airdrop

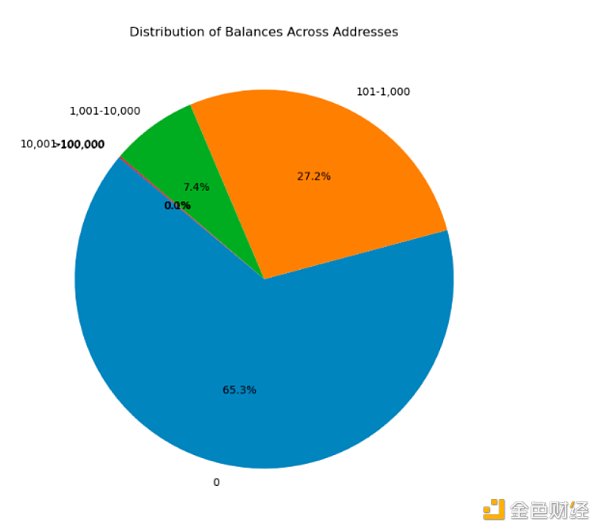

This round of airdrop is allocated to a total of 2.3 addresses, and There is a more interesting standard. I personally think the retention rate of this round of airdrops will be very high, but after thinking about it, I think the retention rate may be lower than expected. Why?

You create it on the super chain An attractive NFT. The total gas on the OP chain (OP main network, Base, Zora) involving the NFT transfer transaction created by your address will be 365 days before the airdrop deadline (January 10, 2023 to January 10, 2024) measure here.

You create an attractive NFT on the Ethereum mainnet. The total gas on Ethereum L1 involved in NFT transfer transactions created by your address will be measured over the 365 days before the airdrop deadline (January 10, 2023 to January 10, 2024).

You would definitely think that people creating NFT contracts is a good indicator, right? Sadly, this is not the case. The data shows a very different picture.

p>

Although this round of airdrops is not as bad as the second round, compared to the third round of airdrops, we have taken a big step backward in terms of retention rate.

My thought is that if they did additional spam or legality filtering on NFTs, retention would improve significantly. This standard is too broad. Additionally, since tokens are airdropped directly to these addresses (rather than having to be claimed), you end up in a situation where the creator of the scam NFT says “Wow, free money. Time to dump.” ”

Conclusion

When I wrote this article and looked for the data myself, I managed to prove that or Disproved some of my assumptions which later proved to be very valuable. In particular, the quality of your airdrops is directly related to your filtering criteria. People who try to create a universal “airdrop score” or use advanced machine learning models will fail, prone to inaccurate data or a lot of misinformation. Machine learning is amazing, but not until you try to understand how it arrives at the answers it gives.

The main lessons the airdrop team should learn from this are:

No more one-time airdrops! You are shooting yourself in the foot. You should want to deploy incentives similar to A/B testing. It can be done through lots of iteration and learning from past experiences to guide your future goals.

By establishing standards based on previous airdrops, you will improve the efficiency of your airdrops. In effect, give more tokens to people who hold them in a wallet. Make it clear to your users that they should stick with one wallet and only switch wallets when absolutely necessary.

Get better data to ensure smarter, higher-quality segmentation. Bad data equals bad results. As we saw above, the lower the “predictability” of the criteria, the better the retention results.

JinseFinance

JinseFinance