Article / AI Future Guide Author Hao Boyang

As a man with 600,000 H100s, how does Zuckerberg, who occupies the commanding heights of open source in the AI world, view the future of AI?

Perhaps you already have some answers. After recently releasing the most powerful open source large model Llama 3.1, he has been intensively interviewed and talked about his many views on the AI industry.

But at today's SIGGRAPH conference, you may see the most real Zuckerberg. In the peak conversation with Huang Renxun, he revealed that there is also a selfish side to open source, and cursed the arrogance of closed source suppliers. He also deeply reflected on his past lack of fashion and the products he made were not cool enough.

And this may be the most comprehensive future picture of Meta that we have seen. In this one-hour conversation, they talked about everything from products to industries, from the belief in open source to the iteration of computing platforms. Basically, they talked about the future of the AI industry.

Regarding the future of AI products, Zuckerberg said that AI is completely changing the way social media platforms operate: the future Facebook and Instagram will become a unified AI model that can integrate different types of content and systems to provide users with a more personalized and rich experience. In particular, the innovation of the recommendation system was mentioned, predicting that future content will not only be recommended based on user interests, but also created or synthesized in real time through AI tools.

He also firmly believed that the intelligent agent is the next form of AI products. He believes that "in the future, just like every business has an email address, a website, and one or more social media accounts, I think in the future, every business will have an AI agent that interacts with customers", and the possibility of the intelligent agent is so great that even if the progress of the basic model is stagnant now, there will be five years for the industry to basically figure out how to most effectively use everything that has been built so far.

As for the AI industry, Zuckerberg expressed his firm support for an open ecosystem. Zuckerberg revealed his selfish thoughts, believing that even in order to ensure that companies such as Meta would not be restricted by a closed ecosystem, they had to open source themselves. Moreover, only an open ecosystem can lead to the formation of technical standards and use user flywheels to iterate products more quickly. Moreover, in such an open source ecosystem, he foresees that in the future there will be a large number of specialized AI models for specific tasks and fields, rather than being dominated by a few large general models.

Regarding the next generation of computing platforms, Zuckerberg focused on the prospects of smart glasses and mixed reality devices. Because of the unexpectedly rapid development of AI, Zuckerberg's current metaverse no longer requires virtual reality. Instead, AI+glasses will do. The cooperation with Ray-Ban is a concrete manifestation of his new cognition.

And throughout the conversation, Huang and Zuckerberg met their match, teasing and joking with each other. Huang Renxun, who calls himself "not good at speaking", and Zuckerberg, known as a "robot", sat together and joked with each other, even talking about the daily life of their own farm. One can't help but feel that people do change.

If you want to know Meta's roadmap, this 10,000-word conversation is worth reading carefully. If you want to see the art of conversation between Party A and Party B, this conversation is also worth reading.

The following is the transcript of the interview:

Huang Renxun:Can you believe it? This is one of the pioneers and promoters of modern computing. I invited him to participate in SIGGRAPH. Mark, sit down. It's great to have you here. Welcome. Thank you for flying here. I heard that you have been flying for about five hours straight.

Zuckerberg:Yes, of course.

Jen-Hsun Huang: This is SIGGRAPH, you know? 90% of the people here are PhDs. What's really amazing about SIGGRAPH is that it's a combined showcase for computer graphics, image processing, AI, and robotics. Over the years, companies like Disney, Pixar, Adobe, Epic Games, and of course NVIDIA have shown and revealed amazing things here. We do a lot of work here. This year, we brought in 20 papers at the intersection of AI and simulation.

We're using AI to make simulations bigger and faster, for example, differentiable physics. We created simulation environments for synthetic data generation for AI. The two fields are really converging. I'm very proud of the work we're doing here. Meta, you're doing amazing work in AI. One of the things I find interesting is that the press has written about how Meta has been involved in AI over the past few years. FAIR does a lot of work—remember, we all use PyTorch. That originated from Meta.

You guys are doing groundbreaking work in computer vision, in language models, in real-time translation. My first question to you is, what do you think of the progress Meta is making right now in generative AI? And how are you applying that to enhance your operations or introduce new features that you're delivering?

Zuckerberg:Yeah, there's a lot to unpack.

First of all, it's great to be here. Meta has been at SIGGRAPH for eight years in a row. Compared to you guys, we're still the new kid on the block. But I think in 2018 -- (interrupted)

Huang Renxun:You're dressed very appropriately (referring to Zuckerberg's black shirt and his own).

Meta AI changes Facebook's dual core:

Recommendation system and creation capabilities

Zuckerberg: (Laughs) This is your place.

(In 2018) we showed some early hand tracking work for our VR and mixed reality headsets. I think we talked about Meta's progress in encoding and decoding digital humans at that time. We want to drive that kind of realistic digital avatar from consumer-grade headsets, and we are getting closer and closer to that goal. In addition, we have completed a lot of work on display systems. The future prototype we are working on is designed to make mixed reality headsets very thin and light. We're pretty advanced in the optical stack and the display system, which is an integrated system. Those are usually the things we show here first.

So it's exciting to be here this year, not only to talk about the Metaverse, but all the AI stuff. As you said, when we started, the AI Research Center was Facebook. Now it's Meta, and we've been working on this for a while before we started Reality Labs. All the stuff around generative AI - it's an interesting revolution. I think it's going to ultimately make all the different products we do different in interesting ways.

Let me start by just briefly sorting out the big product lines we have. Like the news feed and the recommendation system, and Instagram and Facebook. We've been on this journey from just connecting with friends (to the complex systems we have today). The ranking mechanism has always been important because even if you just follow your friends, if someone does something very important, like your cousin has a baby or something, you want that news to be at the top. If we buried it in the corner of your news feed, you would definitely be angry.

But now, over the last few years, users' attention has evolved to more about all kinds of public content in the outside world. Recommendation systems become extremely important because instead of just a few hundred or a thousand potential posts from friends, there are millions of pieces of content. That becomes a very interesting recommendation problem. And with generative AI, I think we're going to get to a place very soon where most of the content you see on Instagram today is matched to you from the outside world based on your interests and whether you follow these people. But I think in the future, a lot of this content will also be created by these AI tools. Some of it will be new content that creators create using these tools. And some of it, I think, will eventually be content that's created for you on the fly or synthesized from existing content. This is just one example of how our core business has evolved. And it's been evolving for 20 years.

Huang:Well, few people realize that one of the largest computing systems ever conceived in the world is a recommendation system. Zuckerberg: Yeah, I mean, it's a completely different path. It's not the hot generative AI that people are talking about. But I think the Transformer architecture is also used in recommendation systems. (Generative AI) is a similar situation, (we are now) just building more and more general models. Huang: It's embedding unstructured data into features. Zuckerberg: Yeah, one of the important aspects is that it drives quality improvement. In the past, there were different models for each content type. A recent example is that we had a model for ranking and recommending short videos and another model for ranking and recommending longer videos. And then it took some product work to make sure the system could surface all the content online. But if you can create more general recommendation models that cover all areas, the results will only get better and better. I think part of it is because of the economics and liquidity of content. The wider the pool of content you can draw from, the less you run into weird inefficiencies that you would if you were drawing from different pools.

Huang:Yeah, and as the models get bigger and more general, they get better.

Zuckerberg:So I dream that one day you could imagine the entire Facebook or Instagram being like a unified AI model that integrates all these different types of content and systems that actually have different goals in different time frames.Because part of it is just showing you interesting content that you want to see today. But another part of it is helping you build your social network over the long term, recommending people you might know, or accounts you might want to follow. And these multimodal models tend to provide better company.

Huang:You've been building GPU infrastructure to run these large recommendation systems for a long time.

ZUCKERBERG: Actually, when it comes to GPUs, progress on that front has been a little slower. (Laughter)

HUANG: Yeah. You always try to be kind.

ZUCKERBERG: Yeah, you know, very kind.

HUANG: Absolutely, always be kind. You're my guest. You know, backstage before he went on stage, you mentioned something about admitting your mistakes and stuff, right? So... (Laughter) You didn't have to be so proactive about it, did you?

ZUCKERBERG: (Laughter) I think I've tried that pretty well.

Huang: (Laughs) Yeah. But once you get into the performance mode, you just go for it. That's it.

Now, what's really cool about generative AI is that today when I use WhatsApp, it feels like I'm working with WhatsApp. I love Imagine. I sit here and type, and it generates images as I type. I go back and edit the text, and it generates other images. Like an old Chinese man enjoying a glass of whiskey at dusk, and he's got three dogs around him: a golden retriever, a golden poodle, and a Bernese mountain dog. It generates a pretty nice picture based on that.

ZUCKERBERG: Yeah, we're heading in that direction.

Huang: Now you can actually upload a picture of me in there. (So the old man) That's me.

The future of AI products:

Even if the model stops iterating now,it will take us five years

to explore the full product potential of the intelligent agent

Zuckerberg:I've spent a lot of time with my daughters recently, imagining them becoming mermaids and other characters. It's very interesting. On the one hand, I think generative AI will be a major upgrade to all the workflows and products we have used for a long time. But on the other hand, now it can create a lot of new things.

So MetaAI, not only has the idea of an AI assistant that can help you with different tasks, but it will also be very creative in our world. Like you said. They are very general, so you don't have to be limited to just this. It can answer any question.

Over time, as we move from Llama 3-like models to Llama 4 and beyond, I think it's going to feel less like a chatbot, where you give it a prompt and it responds, and then you give it another prompt and it responds. And it's back and forth. I think it's going to evolve pretty quickly to where you give it an intent and it can actually process it over multiple time frames. I mean, it might anticipate your intent based on previous conversations. But I think it's going to eventually get to the point where AI can kick off computational tasks that take weeks or months and come back to you when something happens in the world. I think that's going to be very powerful.

Jen-Hsun Huang: So, I mean I'm not so sure. As you know, AI today is pretty much turn-based. You say a sentence, it responds to you a sentence. But obviously, when we think about, when we're given a task or faced with a problem, you know, we'll consider multiple options or we might come up with a tree of options, a decision tree, and we'll simulate in our minds going down that tree, you know, what the different outcomes of each decision we might make are. So we're planning for that. So future AI will do similar things.I was really excited when you talked about your vision for Creator AI, and frankly, I think that's a brilliant idea. Tell us about Creator AI and the AI studio that will enable you to realize that vision.

ZUCKERBERG:Yeah. Actually, we've talked about this a little bit before, but today we're launching it more broadly. Our vision is, I don't think there's just one AI model, right? That's something that some other companies in the industry don't have, they seem to be building a centralized system of agents. And we do have the MetaAI assistant for you to use, but our vision is more about empowering every user who uses our products to be able to build their own agent for themselves.

Our ultimate goal is to bring together all of your content, whether it's the millions of creators on the platform or the hundreds of millions of small businesses, and quickly build a business agent that can interact with customers, do sales, customer support, and so on. So what we're rolling out over time is what we call AI Studio, which is designed to help you get this agent up and running quickly. This is basically a set of tools that will eventually enable every creator to build a version of their own AI as some kind of agent or assistant that their community can interact with.

There's a fundamental problem here, which is there's just not enough time in the day, right? Like if you're a creator, you want to interact with your community more. But you can't because of time. And similarly, your community wants to interact with you. But it's hard. I mean, there's a limited amount of time to do that. So the next best thing is to allow people to create an agent that you train it to represent you in the way that you want it to, based on your material. I think it's a very creative endeavor, almost like a piece of art or content that you post. And, obviously, it's not interacting with the creator personally, but I think it's going to be an interesting way for people like creators who post content on social platforms to have agents do that. In the same way, I think people will create their own agents for all sorts of different uses. Some will be custom utilities that they want to fine-tune and train. Some will be entertainment. Some of the things that people create are just fun, you know, it might be a little silly or have a humorous approach to things that we might not have anticipated. Users might not build MetaAI as an assistant, but I think people are quite interested in seeing and interacting with it. And then one of the interesting use cases that we're seeing is people using these agents for support. This is one that was a little surprising to me, one of the most popular uses of MetaAI is people basically using it to simulate complex social situations that they're about to face. Whether it's a professional situation, like, "I want to ask my manager how I can get a promotion or a raise," or "I'm having an argument with my friend," or "I'm having difficulties with my girlfriend," basically in a completely non-biased space where you can simulate that and see how it goes, how to have a conversation and get feedback.

A lot of people, they don't just want to interact with the same type of agent, whether it's MetaAI or ChatGPT or whatever tools everyone is using, they want to create their own thing.

So that's the direction we're going in with AI Studio in general. But these are all part of our larger view thatthere shouldn't be just one big AI for people to interact with. We just think the world would be a better and more interesting place if there were multiple different kinds of AI. Huang: I think that's really cool, if you're an artist and you have a style, you can take your style, your whole portfolio, and you can fine-tune one of your models, and now it's an AI model that you can come and use and give prompts to. You can ask me, for example, to create something similar to the style of art that I have, you can even give me a piece of art as a drawing, a sketch as inspiration, and I can generate something for you. And then other users will come to my AI (to get these capabilities). Every restaurant, every website may have these AIs in the future. Zuckerberg: Yeah, I kind of think in the future, just like every business has an email address, a website, and one or more social media accounts, I think in the future, every business will have an AI agent that interacts with its customers. Huang: Right.

ZUCKERBERG:These are things that I think have been historically very difficult to do. If you think about any company, customer support and sales are two completely separate organizations. And as a CEO, you don't want them to operate that way. It's just that, well, the skills required for them are different. When you're a CEO, you have to deal with all of these things.

I mean, generally speaking, when you build organizations, they're separate. Because they're each optimized for different things. But I think the ideal state is that it should be a unified entity, right? As a consumer, you don't want to go one route when you buy something and another route when you have a problem with something you've purchased. You just want to have one place to go that can answer your questions and be able to interact with businesses in different ways. And I think that applies to creators as well.

HUANG:I think that this interaction on the part of individual consumers, especially their complaints, is going to make your company better.

Zuckerberg:Yeah, that's exactly right.

Huang:All of these AI interactions with customers will allow the AI to capture that institutional knowledge, and that can all feed into the analytics system, which will improve subsequent AI.

Zuckerberg:Yeah. This version you're talking about I think is a more integrated version, and we're still in a very early alpha stage.But AI Studio allows people to create their own UGC agents and other content, and start to enter this flywheel of allowing creators to create. I'm very excited about that.

Huang:So, can I use AI Studio to fine-tune my images, my collection of images?

Zuckerberg: Yes, we're moving in that direction. Huang: Okay. And then, can I just load everything I've written into it and have it use it as my RAG? Zuckerberg: Yeah, basically. Huang: Yeah, yeah. And every time I come back, it reloads the memory. So it remembers where it left off, and we continue the conversation like nothing happened. Zuckerberg: Yeah. And I mean, like any product that's going to improve over time, the tools that are used to train it are going to get better. It's not just about what you want it to say. I think oftentimes creators and businesses also have topics that they want to avoid. Huang: Right. So there's constant improvement on that.

ZUCKERBERG:Yeah. You know, I think the ideal version is more than just text, right? And that kind of intersects with some of the work that we're doing over time on encoding and decoding avatars. You definitely want to be able to have a video chat-like interaction with that agent, and I think we'll get there over time. I don't think that those things are too far away, and this flywheel is spinning very quickly.So it's exciting. There's a lot of new stuff to build.

I think even if progress on the foundational models is stagnant right now, although I don't think it will be stagnant, I think we'll have five years for the industry to basically figure out how to most effectively use all the stuff that's been built to date. But actually, I think progress on the foundational models and the foundational research is accelerating. So it's a pretty wild time.

HUANG JENXUN:Your vision is all of this, and you kind of enabled all of this. So thank you. You know, we're CEOs, we're fragile flowers. We need a lot of support. (Laughter)

ZUCKERBERG:Yeah. We're pretty resilient now. I think we're the two longest-tenured founders in the industry. Right?

HUANG:I mean, it's true. It's true.

ZUCKERBERG:And your hair is graying. Mine is just getting longer. (Laughter)

HUANG:My hair is graying. Yours is curly. What's going on?

ZUCKERBERG:It's always been curly. So I've always kept it short.

HUANG: Yeah. I just, if I had known it would take this long ...

ZUCKERBERG:You probably wouldn't have started in the first place. (Laughter)

HUANG:No, I probably would have dropped out of college like you did. Started earlier. (Laughter)

ZUCKERBERG:Well, that's a good difference in our personalities. (Laughter)

HUANG:You've got a 12-year head start. That's pretty good.

ZUCKERBERG:You know, you've done pretty well.

HUANG:I'll keep going. (Laughter)

So, what I like about your vision of everyone having an AI, every business having an AI in our company. I want every engineer and every software developer to have an AI.

ZUCKERBERG:Yeah. We have a lot of AIs.

HUANG:One thing I like about your vision is that you also believe that every person and every company should be able to create their own AI. So you actually open sourced it. When you open sourced Llama, I thought that was great. Llama 2.1, by the way, I think Llama 2 was probably the biggest thing in AI last year.

ZUCKERBERG:I thought (the biggest thing in AI last year) was H100. (Laughter)

Huang: It's a chicken or the egg question. Yeah. So which one came first?

ZUCKERBERG: The H100. Well, the Llama 2 is not actually the H100. (Laughter)

Huang: Yeah, it's the A100. (Laughter)

The Future of Open Source:

Unrestricted Builds, Restoring the Golden Age of Open

Jen-Hsun Huang:Yes, thank you. So, but the reason I say this is the biggest event is that when Llama 2.1 was released, it activated every company, every enterprise, and every industry. All of a sudden, every medical company was building AI, every company, big or small, startup was building AI. It enabled every researcher to get back into AI because they had a starting point to do something. And now that 3.1 is out, you know, we're working together to deploy Llama 3.1 and bring it to enterprises around the world. It's just hard to put into words how excited we are. I think it's going to drive all kinds of applications.

But talk about your philosophy on open source. Where did that come from? You open sourced PyTorch, and now it's become a framework for AI development. And now you've open sourced Llama 3.1. There's a whole ecosystem built around it. So, I think that's great. But where did all this come from?

ZUCKERBERG:Yeah, there's a lot of history in this. I mean, we've done a lot of open source work over time. I think part of it, frankly, is that we started building distributed computing infrastructure and data centers and things like that after some other tech companies. And because of that, when we built these things, it wasn't a competitive advantage. So we thought, well, we might as well just open source it. And then we'll benefit from the ecosystem around it. So we have a lot of these projects.

I think probably the biggest example is the Open Compute Project, where we took our server designs, our network designs, and ultimately our data center designs and published all of that. And by making it sort of an industry standard, the entire supply chain basically organized around it, which had the benefit of saving money for everybody. So by making those designs public and open, we basically saved billions of dollars by doing that.

Huang:Well, the Open Compute Project also made it possible for NVIDIA HGX, where something that we designed for one data center suddenly worked in other data centers.

Zuckerberg:It did. It was awesome. So we had this really great experience. And then we also applied it to a bunch of our infrastructure tools, like React, PyTorch, and so on. So by the time Llama came along, we had a positive inclination to do this.

Specifically for AI models, I guess there are a couple of ways I look at this. First of all, one of the hardest things about building things in the company over the last 20 years was having to deal with the fact that we were shipping our apps through competitors' mobile platforms. On the one hand, mobile platforms have been a huge boost to the industry, which is awesome. On the other hand, having to ship your product through a competitor is challenging, right?

Also, when I was growing up, the first version of Facebook was on the web, which was open. And then the move to mobile, the upside was that now everyone had a computer in their pocket. That was awesome. But the downside was that we were much more limited in what we could do.

So when you look at these generations of computing, there's a huge recency bias where people are only looking at the mobile device era now and thinking that closed ecosystems [will lead to better outcomes] because Apple basically won that and set the rules. Yeah, I know technically there are more Android phones out there, but Apple basically controls the market and (earns) all the profits, and Android is basically following Apple's lead in terms of development. So I think Apple is the clear winner in this generation.

But it wasn't always that way.

If you look back at the last generation, Apple was doing their closed model (MacOS). But Microsoft, which is obviously not a completely open company, was a much more open ecosystem than Apple with Windows running on a variety of different OEMs and different software and hardware. And Windows was the leading ecosystem (then). Basically in this generation of PCs, the open ecosystem won.

I'm kind of hopeful that in the next wave of computing, we'll go back to a space where the open ecosystem wins and becomes the dominant one again. There's always going to be a closed and an open choice. I know both have their reasons for being. Both have their benefits. I'm not an extreme person in this regard. I mean, we have closed source products. Not everything we release is open source. But I think there's a lot of value in openness for computing platforms that the industry relies on, especially software. That really shapes my philosophy on this. Whether it's the AI project with Llama or our work in AR and VR, where we're basically building an open operating system for mixed reality, similar to Android or Windows, designed to work with a lot of different hardware companies to make all kinds of devices, we're basically just looking to get the ecosystem back to that level of openness. I'm pretty optimistic about the next generation, that open systems will win out. For us, I just want to make sure we can plug in [AI]. It's a little selfish to say that, but I mean, one of my goals for the next 10 to 15 years after I built this company for a while was, I just want to make sure we can build the foundational technology because that's going to be the foundation for us to build social experiences because a lot of the things I tried to build before were limited. And then you're told, no, you can't really build that, and that's what the platform providers are saying at one level. I just say, no, screw it. For the next generation, we're going to build it all the way.

Huang:Okay, our livestream is going to be cut off (because Zuckerberg said the Fword)

Zuckerberg:Yeah, sorry. Sorry. (Laughter)

Huang:I was like, beep. (Laughter)

Zuckerberg:Yeah. You know, we did pretty well for the first 20 minutes, but as soon as I talked about closed platforms, I got mad. (Laughter)

Huang: I think it's a great world to have people working on building the best possible AI, however they build it, and offering it as a service to the world. And then if you want to build AI yourself, you can still build AI yourself, which is great. But in terms of the ability to use AI, you know, there's a lot of things that (I prefer to use out of the box). I'd rather not build this jacket myself. I'd rather have someone build this jacket for me. You know what I mean?

ZUCKERBERG: Yeah, yeah.

Huang: Leather being open source is not a useful concept to me. But I think you can have great services, incredible services, and services that are open, open.

ZUCKERBERG: Yeah. Huang: So we’ve basically covered the whole spectrum. But what’s really cool about what you’ve done with Llama 3.1 is you have a 405B version, you have a 70B version, you have an 8B version, and you can use it to generate synthetic data, to teach a smaller model with a larger model. And while the larger model is going to be more general, it’s not as brittle, but you can still build a smaller model that’s appropriate for whatever operational domain or operational cost you want it to be. Whatever operational domain or operational cost you want it to be, you can build a smaller model to fit. You created a guard, I think it’s called Llama Guard, Llama Guard for guardrails, which is great. So now the way you build models, it’s built in a way that’s transparent. You have a world-class security team, a world-class ethics team, and you can build it in such a way that everybody knows it’s built correctly. I really like that part.

ZUCKERBERG:Yeah, let me finish what I was going to say before I got sidetracked. You know, I do think we have this alignment that we build [open source AI] because we want it to exist and we don't want to be isolated to some closed model. And this is not just about building a piece of software, AI needs an ecosystem to support it. So it's almost like if we don't open source it, it might not even work very well.

We're not doing this because we're some altruistic person, although I think it's good for the ecosystem. We're doing this because we think that by building a strong ecosystem, we can make what we're building the best it can be, and look at how many people are involved in building the PyTorch ecosystem.

HUANG:[It takes] a lot of engineering work. I mean, just for video processing alone, we probably have hundreds of people dedicated to making PyTorch better, more scalable, you know, more performant, and so on.

ZUCKERBERG: Yeah, andwhen something becomes an industry standard, other people innovate around it, right. So all the hardware and systems end up being optimized to run that technology really well, which benefits everybody, and it also works really well with the systems that we're building. And that, I think, is just one example of how this approach ends up being really effective.

ZUCKERBERG: So, I think open source is going to be a good business strategy. I think people probably don't quite realize how much we love it.

HUANG: We built an ecosystem around it. We created this thing.

ZUCKERBERG: Yeah, I see that. You guys have been doing a great job. Every time we launch a new product, you're the first team to launch it and optimize it to work. I appreciate that.

Jen-Hsun Huang: What can I say? We have some really great engineers.

Zuckerberg: (Laughs) And you're always very quick to seize these opportunities.

Jen-Hsun Huang: So, I'm the elder, but I'm very agile. That's what a CEO has to do.

I realized something important. I think Llama is really important. We built a concept called AI Foundry around it to help everyone build AI. A lot of people, they have the desire to build AI. It’s really important for them to own AI because once they get it into the data flywheel, that’s how their corporate institutional knowledge is encoded and embedded in AI. But they can’t afford to have that AI flywheel, that data flywheel spinning somewhere else, by buying services. So open source allows them to do that. But they don’t really know how to turn all of this into AI. So we created this thing called AI Foundry. We provide the tools, we provide the expertise, the technology of Llama, we have the ability to help them turn all of this into AI services. And then when we’re done with all of this, they take over, they own, and the output of that is what we call a NIMM. This NIMM, this neural micro NVIDIA inference microservice, they just download, take away and run it anywhere they like, including on-premises. We have a whole ecosystem of partners, from OEMs that can run NIMMs to GSIs like Accenture, who we train and work with to create NIMMs and pipelines based on Llama. And now we’re helping enterprises around the world do that. I mean, it’s really a very exciting thing. All of this was actually triggered by the open source of Llama.

The future of AI industry:

Models will not dominate the market,

Models from small to large have scenarios

Zuckerberg: Yes, I think the ability to help people extract their own models from large models will become a really valuable new thing. As we discussed in terms of products, at least I don't think there will be a core AI agent that everyone will talk to. At the same level, I don't think there will necessarily be a model that everyone will use.

Huang Renxun: We have a chip AI, chip design AI. We have a software coding AI. Our software coding AI understands USD because we use USD to write code for Omniverse. We have a software AI that understands Verilog, our Verilog. We have software AIs that understand our bug database and know how to help us triage bugs and send them to the right engineers.

Each of these AIs is fine-tuned on Llama. We fine-tune them and put safeguards in place. You know, if we have an AI for chip design, we don't want to ask it questions about politics, religion, or anything like that. So we put safeguards on it. So I think every company will have an AI that's built specifically for every feature they have. They need help to do that.

ZUCKERBERG: Yeah, and I think one of the big questions going forward is to what extent people will use larger, more complex models, rather than just training their own models for their needs. At least I'm sure there will be a wide variety of different models coming out in the future.

HUANG JENXUN: We use the largest ones. And the reason for that is that our engineers' time is extremely valuable. So we're optimizing the 405B version of Llama 3.1 for performance. And as you know, no matter how big the GPU is, a 405B won't fit. And that's why the performance of NVLink is so critical. We use this technology to connect each GPU to each other through a non-blocking switch called NVLink.

For example, in an HGX, there are two of these switches. We enable all of these GPUs to work together, and the performance of running 405B is extremely high. The reason we do this is that our engineers' time is extremely valuable. You know, we want to use the best possible model. Even if it only saves a few cents in cost, who cares? Because we want to make sure we show them the best results.

ZUCKERBERG:Yeah, I mean, I think the 405B costs about half the cost of the GPT-4 model. So in that sense, it's pretty good. But I think people are using or needing smaller models on their devices, and they're going to trim it down. So it’s like a whole set of different services that AI runs.

Huang:Let’s assume that the AI that we’re using for chip design might cost only $10 an hour. You know, if you use it constantly and you share that AI with a lot of engineers, each engineer might have an AI that’s not expensive to use with them. It’s not expensive. We pay engineers a lot.

So for us, a few dollars an hour can dramatically increase the capabilities of people who don’t have access to AI. Go ahead and get an AI.That’s what we’re saying.

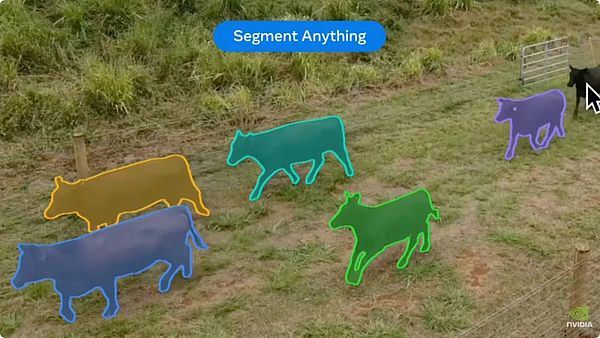

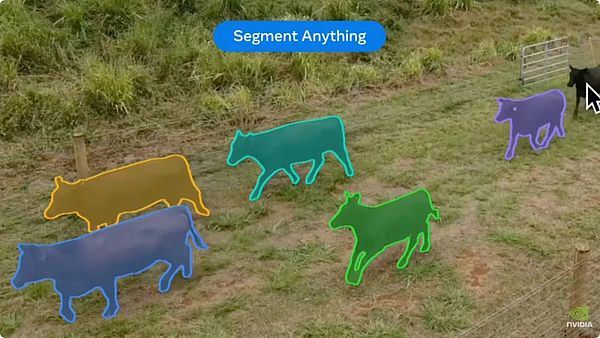

So let’s talk about the next wave. One of the things that I really like about what you’re doing is that computer vision, one of the models that we use a lot internally is Segment Everything. You know, we’re training AI models on videos right now so that we can understand models of the world better. Our application scenarios are robotics and industrial digitization, bringing these AI models into Omniverse so that we can better model and represent the physical world. Let robots operate better in these Omniverse worlds. So your application, the Ray-Ban Meta glasses, your vision of bringing AI into the virtual world is really interesting. Tell us about it.

Zuckerberg:Okay, well, there's a lot to talk about here. That Segment Everything model that you were talking about, we actually demonstrated the next version of it at SIGGRAPH, Segment Everything 2. Now it's working properly, it's faster. It can also process video now. I think these are actually cattle from my ranch on Kauai. By the way, they're called Mark's calves, delicious calves.

Huang Renxun:Delicious Mark's calf. So next time Mark comes to my house, we have to make Philly cheesesteaks together. You just bring the calf.

Zuckerberg:And then you make the cheese, and I'll be the sous chef. This calf is really delicious.

Huang Renxun:That's the sous chef's evaluation.

Zuckerberg:Okay, listen. And then at the end of the night, you're like, "Hey, you've had enough, right?" And I'm like, "I don't know, I could have another one." And you're like, "Really?"

Jensen Huang: I'm definitely like, "Yeah, we're going to have some more. We're going to have some more. Are you full?" And usually your guests are like, "Oh, yeah, I'm fine."

Zuckerberg: "Make me another cheesesteak, Huang."

Jensen Huang: So, just to give you an idea of how OCD he is, I turn around and I'm preparing the cheesesteak. And I say, "Mark, cut the tomato." Handing Mark a knife.

Zuckerberg: Yeah, I'm a precise cutter.

Huang:And then he cuts the tomatoes. Every single one of them is cut down to the millimeter. But what's really interesting is, I thought all the tomatoes were going to be sliced and stacked up like a deck of cards. And when I turned around, he said he needed another plate. And the reason he did that was that each slice of the tomato he cut couldn't be touching each other. Once he separated a slice from the others, they shouldn't touch each other again.

Zuckerberg:Yeah, look, man, if you wanted them to touch, you should have told me ahead of time. You need to... I'm just a sous chef, okay?

Huang:That's why he needs an unbiased AI.

Zuckerberg:Yeah. (Laughter) Huang: That's really cool. Okay, so it's identifying cow tracks... It's tracking cow tracks. Zuckerberg: There's a lot of fun special effects you can do with this. And because it's going to be widely available, there's going to be more serious applications in the industry. So I mean, scientists use this stuff to study coral reefs and natural habitats and how landscapes evolve and so on. But I mean, it's able to do this in video, tell it what you want to track, and you can have B-roll footage and be able to interact with it. That's really cool research. Huang: Let me give you an example of a scenario where we're using it. Let's say you have a warehouse full of cameras. The warehouse AI is monitoring everything that's going on. Let's say a bunch of boxes collapse or someone spills water on the ground or whatever is about to happen, the AI recognizes that, generates a text message, sends it to the right people, and help is on the way. That's one way to use it. Instead of recording everything, if there's an incident, instead of recording every nanosecond of video and then going back and retrieving that moment, it only records what's important because it knows what it's looking at. So having a video understanding model, a video language model is really powerful for all these interesting applications. So what else are you working on next? Ray, talk to me about...

Future of computing platforms:

Don't even mention XR, just AI + trendy glasses can sell 1 billion

Zuckerberg: Yeah, and all the smart glasses. I think when we think about the next generation of computing platforms, we break it down into mixed reality (XR), head-mounted devices, and smart glasses. And smart glasses, I think people are more likely to accept and wear them because almost everyone who wears glasses now will eventually upgrade to smart glasses. That's over a billion people worldwide. So it's going to be a pretty big market.

VR/MR headsets, I think some people find them interesting for gaming or other uses, and some people don't yet. My view is that they're both here to stay.

I think smart glasses will be similar to phones, the next version of a resident computing platform. Whereas mixed reality headsets are more like your workstation or your gaming console, when you sit down for a more immersive experience and need more computing resources. Glasses are just a very small form factor. Because the computing power will bring a lot of limitations, just like you can't do the same level of computing on a phone (as a computer).

Huang Renxun: It just happened to be at the time when all these generative AI breakthroughs were happening.

Zuckerberg: Yeah, so basically, for smart glasses, we've been attacking this problem from two different directions. On one hand, we've been building what we think is the ideal holographic augmented reality (AR) glasses, and we're doing all the custom silicon work, all the custom display stack work, just everything that's needed to make that happen.And they're glasses, right? Not head-mounted devices. Not like VR/MR headsets. They look like regular glasses. But they're still quite a distance away from the glasses you wear today. I mean your glasses are very thin.

But even if we make Ray-Ban glasses, we can't fully integrate all the technology required to make holographic AR into them. We're getting closer, and I think we'll get closer in the next few years. It's still going to be quite expensive, but we'll still get it out as a product.

The other angle we're thinking about is starting with smart glasses that look good. By working with EssilorLuxottica, which is the world's leading eyewear manufacturer, they basically have all the big brands that you know, like Ray-Ban, Oakley, Oliver Peoples, and a few others. It's all under EssilorLuxottica.

Huang: NVIDIA glasses. (Laughs)

ZUCKERBERG I think, you know, they probably like that. I mean, who wouldn't like that? Who wouldn't want glasses like that at this point?

ZUCKERBERG: But we've been working with them on the Ray-Ban line, and it's on its second generation now.

Our goal is to be like, okay, let's limit the form factors to the ones that look really great. And then to pack as much technology as we can into that frame, even though we know that it's not as perfect as we'd like it to be technically, but at the end of the day, they're great looking glasses. So far, we have camera sensors, so you can take photos and videos, you can actually live stream to Instagram, you can do video calls on WhatsApp and stream to the other person, you know, what you're seeing. It has a microphone and a speaker, I mean, that speaker is actually pretty great. It's like, open canal design, so a lot of people find it more comfortable than earbuds. You can listen to music, it's like a private experience, which is pretty good. People love that, you can take calls on it.

But we found that this combination of sensors is exactly what you need to talk to AI. So that was kind of an unexpected discovery. If you asked me five years ago, would we get to holographic AR or AI first? I would have said, probably holographic AR. Right, I mean, it looks like all the advancements in VR and mixed reality technology, and building a new display technology stack. We're making progress in that direction. Then, there was a breakthrough in large language models (LLMs), and the result is that we now have high-quality AI, and it's improving very rapidly until holographic augmented reality (AR) came along.

So this is a shift that I didn't expect. Fortunately, we're in a good position because we've been working on these different products, but I think ultimately you're going to have a range of potential glasses at different price points and different levels of technology. Sobased on what we're seeing now with the Ray-Ban Meta, a non-display AI glasses priced around $300 is going to be a very hot product that could end up being used by tens or even hundreds of millions of people. You're going to be having a conversation with a highly interactive AI.

Huang:It's going to have the visual language understanding technology that you just showed me. Real-time translation capabilities. You can talk to me in one language. I hear another language. Zuckerberg: Of course, it would be nice to have a display, but that would add weight to the glasses and make them more expensive. So I think a lot of people would want that holographic display, but there are also a lot of people who would like to eventually have something like ultra-thin glasses. Huang: For industrial applications and certain workplaces, we do need technology like (virtual reality). Zuckerberg: The same is true for consumer products. I thought about this during the pandemic, when everyone was working remotely for a short period of time. It's like you're on Zoom all day, and it's okay. Although it's great that we have these tools now, we're not far away from being able to have virtual meetings. At that time, I'm not actually there, you just see my hologram, but it feels like we're really there and physically present. We can work together and collaborate together. But I think this is particularly important when working with AI.

Huang:I can live with a device that I don't have to wear all the time.

Zuckerberg:Oh, yeah, but I think we'll get to that point where it's actually there. I mean, in glasses, there are thin frames and thick frames and all kinds of styles, but I think we're still a while away from having a holographic form factor, but I think it's not far away to have a stylish pair of glasses with slightly thick frames in the near future.

Huang:These (small smart) sunglasses are now fitting more and more closely to the face. I can see that.

Zuckerberg:Yeah, you know what, it's a very useful style.Believe it or not, but I'm trying to be a hipster to influence the style before the glasses are available. (Laughter)

ZUCKERBERG:I can see you're trying. How's your trendy-male journey going?

HUANG:It's early days. But I think if a big part of the future of the business is building fashionable glasses that people wear, then I should probably start paying more attention to that.

ZUCKERBERG:Right. Absolutely. We need to retire the me who wears the same thing every day. (Laughter) But that's what's so special about glasses. I think it's different from a watch or a phone, where people really don't want them all to look the same.

HUANG:Right, so I think, you know, this is a platform, and I think it's going to tend toward the theme that we were talking about before, which is to be an open ecosystem, because there's going to be a huge demand for variety and style. Not everyone is going to want the glasses that, you know, someone else has designed, and I don't think that's going to work for this time.

Zuckerberg:Yeah, I think you're right.

Closing: Software 3.0 is here

Huang:Mark, we're in an era where the entire computing stack is being reinvented, and it's incredible. The way we think about software, you know Andre called it software 1.0 and software 2.0, and now we're basically in the era of software 3.0. The shift from general purpose computing to generative neural network processing, the computing power and applications that we can develop are unimaginable in the past. This generative AI technology, I can't remember another technology that has impacted consumers, businesses, industries, and the scientific community at such a rapid pace, and across all different scientific fields from climate technology to biotechnology to physical science. Generative AI is at the heart of this fundamental shift in every area that we encounter.

And, in addition to the fact that you mentioned that generative AI is going to have a profound impact in society, one of the things that I'm really excited about is that someone asked me a while ago if there would be a "Jensen AI," which is exactly what you call a creative AI, where we can build our own AI and load it with all the content that I've ever written, fine-tune it with the way that I answer questions, and hopefully over time it can become a really great assistant and companion for people who just want to ask questions or exchange ideas. This version of Jensen AI won't judge you, you don't have to worry about being judged, so you can interact with it at any time. I think these are all really amazing things. We often write a lot of stuff, and it's incredible to just give it three or four topics and have it write the basic topics that I want in my voice and use that as a starting point. There's just so much that we can do now, and it's really great to work with you.

I know it's not easy to build a company, and you're moving your company from desktop to mobile to VR to AI, all these devices, which is really, really unusual. We've pivoted multiple times ourselves, and I know how hard it is. We've both had a lot of setbacks over the years, but that's what it takes to be a pioneer and an innovator. It's been really cool to watch you, and again, I'm not sure if it's a pivot, if you've been doing the same thing, but you've also added new things, which means there are more chapters to come. I think it's been fun to watch your journey as well for you.

I mean, you went through a period when everyone thought everything was going to move to these devices and computing was going to get really cheap, but you kept persevering, like actually you were going to need these large systems that could do parallel processing.

Zuckerberg:Yeah, we made smaller and smaller devices for a while, but then we made computers fashionable for a while, and then they became unfashionable, super unfashionable, but now they're cool again.

Huang:We make GPUs, and now you deploy GPUs. Zuck has thousands of H100s in his data centers. I think you're getting close to 600,000 GPUs.

Zuckerberg:We're a premium customer. That's how you get a Jensen Q&A session at a conference. (Laughter)

When they said, "You know, we have an event at SIGGRAPH in a few weeks," I was like, "Yeah, I don't think I'm busy that day, not in Denver, sounds interesting, I don't have anything planned that afternoon."

Huang: (So) you just showed up, but the point is, these systems that you build, they're huge systems, they're very difficult to coordinate, they're very difficult to run, you know you got into GPUs later than most people, but you're operating at a larger scale than anyone, and it's incredible to watch what you've done, congratulations on everything you've done, you're really a trendsetter now.

JinseFinance

JinseFinance