Introduction: Development of AI+Web3

In the past few years, the rapid development of artificial intelligence (AI) and Web3 technology has attracted widespread attention worldwide. As a technology that simulates and imitates human intelligence, AI has made major breakthroughs in face recognition, natural language processing, machine learning and other fields. The rapid development of AI technology has brought tremendous changes and innovations to all walks of life.

The market size of the AI industry reached 200 billion US dollars in 2023. Industry giants and outstanding players such as OpenAI, Character.AI, and Midjourney have sprung up like mushrooms after rain, leading the AI boom.

At the same time, Web3, as an emerging network model, is gradually changing our perception and use of the Internet. Based on decentralized blockchain technology, Web3 realizes the sharing and controllability of data, user autonomy and the establishment of a trust mechanism through functions such as smart contracts, distributed storage and decentralized identity authentication. The core concept of Web3 is to liberate data from centralized authorities and give users control over data and the right to share the value of data.

The current market value of the Web3 industry has reached 25 trillion. Whether it is Bitcoin, Ethereum, Solana or players such as Uniswap and Stepn at the application layer, new narratives and scenarios are emerging in an endless stream, attracting more and more people to join the Web3 industry.

It is easy to find that the combination of AI and Web3 is an area of great concern to builders and VCs in the East and the West. How to integrate the two well is a question worth exploring.

This article will focus on the current development status of AI+Web3 and explore the potential value and impact of this integration. We will first introduce the basic concepts and characteristics of AI and Web3, and then explore the relationship between them. Subsequently, we will analyze the current status of AI+Web3 projects and discuss in depth the limitations and challenges they face. Through such research, we hope to provide valuable reference and insights for investors and practitioners in related industries.

How AI and Web3 interact

The development of AI and Web3 is like the two sides of a scale. AI has brought about an increase in productivity, while Web3 has brought about a change in production relations. So what kind of sparks can AI and Web3 collide with? Next, we will first analyze the difficulties and room for improvement faced by the AI and Web3 industries, and then explore how each other can help solve these difficulties.

The difficulties and potential room for improvement faced by the AI industry

The difficulties and potential room for improvement faced by the Web3 industry

2.1 The difficulties faced by the AI industry

To explore the difficulties faced by the AI industry, let's first look at the essence of the AI industry. The core of the AI industry is inseparable from three elements: computing power, algorithms and data.

First is computing power: computing power refers to the ability to perform large-scale calculations and processing. AI tasks usually require processing large amounts of data and performing complex calculations, such as training deep neural network models. High-intensity computing power can accelerate the model training and reasoning process, and improve the performance and efficiency of AI systems. In recent years, with the development of hardware technology, such as graphics processing units (GPUs) and dedicated AI chips (such as TPUs), the improvement of computing power has played an important role in promoting the development of the AI industry. Nvidia, whose stock has skyrocketed in recent years, has occupied a large market share as a GPU provider and earned high profits.

What is an algorithm: Algorithms are the core components of AI systems. They are mathematical and statistical methods used to solve problems and achieve tasks. AI algorithms can be divided into traditional machine learning algorithms and deep learning algorithms, among which deep learning algorithms have made significant breakthroughs in recent years. The selection and design of algorithms are crucial to the performance and effectiveness of AI systems. Continuously improved and innovative algorithms can improve the accuracy, robustness and generalization ability of AI systems. Different algorithms will have different effects, so the improvement of algorithms is also crucial to the effectiveness of completing tasks.

Why data is important: The core task of AI systems is to extract patterns and regularities in data through learning and training.

Data is the basis for training and optimizing models. Through large-scale data samples, AI systems can learn more accurate and intelligent models. Rich data sets can provide more comprehensive and diverse information, allowing models to better generalize to unseen data, helping AI systems better understand and solve real-world problems.

After understanding the three core elements of current AI, let's take a look at the difficulties and challenges that AI encounters in these three aspects. First, in terms of computing power, AI tasks usually require a lot of computing resources for model training and reasoning, especially for deep learning models. However, obtaining and managing large-scale computing power is an expensive and complex challenge. The cost, energy consumption and maintenance of high-performance computing equipment are all problems. Especially for start-ups and individual developers, it may be difficult to obtain sufficient computing power.

In terms of algorithms, although deep learning algorithms have achieved great success in many fields, there are still some difficulties and challenges. For example, training deep neural networks requires a lot of data and computing resources, and for some tasks, the interpretability and explainability of the model may be insufficient. In addition, the robustness and generalization ability of the algorithm are also an important issue, and the performance of the model on unseen data may be unstable. Among the many algorithms, how to find the best algorithm to provide the best service is a process that requires continuous exploration.

In terms of data, data is the driving force of AI, but obtaining high-quality and diverse data is still a challenge. Data in some areas may be difficult to obtain, such as sensitive health data in the medical field. In addition, the quality, accuracy and labeling of data are also issues. Incomplete or biased data may lead to incorrect behavior or deviation of the model. At the same time, protecting the privacy and security of data is also an important consideration.

In addition, there are issues such as explainability and transparency. The black box nature of AI models is a public concern. For some applications, such as finance, medical care and justice, the decision-making process of the model needs to be explainable and traceable, while existing deep learning models often lack transparency. Explaining the decision-making process of the model and providing trustworthy explanations remain a challenge.

In addition, the business model of many AI project startups is not very clear, which also confuses many AI entrepreneurs.

2.2 Difficulties faced by the Web3 industry

In the Web3 industry, there are many different difficulties that need to be solved. Whether it is data analysis of Web3, poor user experience of Web3 products, or problems with smart contract code vulnerabilities and hacker attacks, there is a lot of room for improvement. As a tool to improve productivity, AI also has a lot of potential in these areas.

First is the improvement of data analysis and prediction capabilities: The application of AI technology in data analysis and prediction has brought a huge impact on the Web3 industry. Through the intelligent analysis and mining of AI algorithms, the Web3 platform can extract valuable information from massive amounts of data and make more accurate predictions and decisions. This is of great significance for risk assessment, market forecasting and asset management in the field of decentralized finance (DeFi).

In addition, improvements in user experience and personalized services can also be achieved: The application of AI technology enables the Web3 platform to provide better user experience and personalized services. By analyzing and modeling user data, the Web3 platform can provide users with personalized recommendations, customized services, and intelligent interactive experiences. This helps to improve user engagement and satisfaction and promote the development of the Web3 ecosystem. For example, many Web3 protocols access AI tools such as ChatGPT to better serve users.

In terms of security and privacy protection, the application of AI also has a far-reaching impact on the Web3 industry. AI technology can be used to detect and defend against network attacks, identify abnormal behavior, and provide stronger security protection. At the same time, AI can also be applied to data privacy protection, and protect users' personal information on the Web3 platform through technologies such as data encryption and privacy computing. In terms of smart contract auditing, since there may be loopholes and security risks in the writing and auditing of smart contracts, AI technology can be used to automate contract auditing and vulnerability detection to improve the security and reliability of contracts.

It can be seen that AI can participate and provide assistance in many aspects of the difficulties and potential improvement space faced by the Web3 industry.

Analysis of the Current Status of AI+Web3 Projects

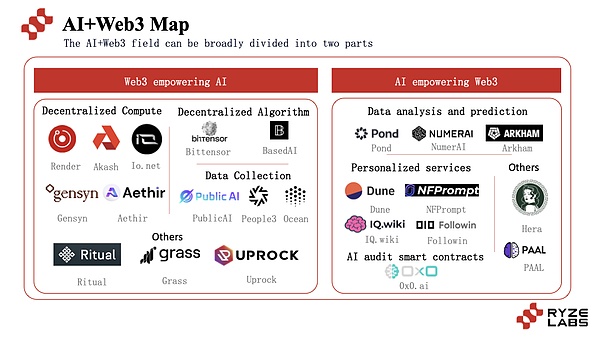

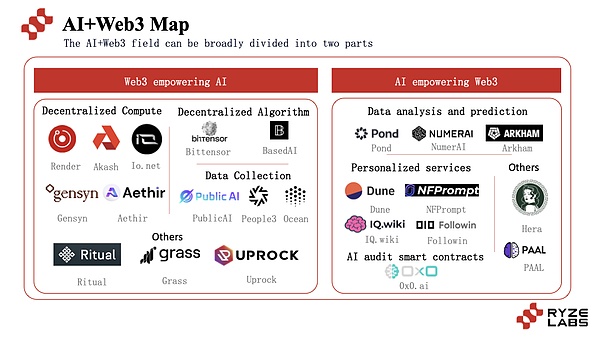

Projects combining AI and Web3 mainly start from two major aspects, using blockchain technology to improve the performance of AI projects, and using AI technology to serve the improvement of Web3 projects.

Around these two aspects, a large number of projects have emerged to explore this path, including Io.net, Gensyn, Ritual and other projects. Next, this article will analyze the current status and development of different sub-tracks of AI-assisted web3 and Web3-assisted AI.

3.1 Web3 helps AI

3.1.1 Decentralized computing power

Since OpenAI launched ChatGPT at the end of 2022, it has set off a craze for AI. Five days after its launch, the number of users reached 1 million, while it took Instagram about two and a half months to reach 1 million downloads. After that, Chatgpt also developed rapidly, with the number of monthly active users reaching 100 million within 2 months, and by November 2023, the number of weekly active users reached 100 million. With the advent of Chatgpt, the field of AI has quickly exploded from a niche track into a highly watched industry.

According to Trendforce's report, ChatGPT requires 30,000 NVIDIA A100 GPUs to run, and GPT-5 will require more orders of magnitude of computing in the future. This has also started an arms race among various AI companies. Only by mastering enough computing power can we be sure that we have enough power and advantages in the AI war, and therefore there is a shortage of GPUs.

Before the rise of AI, the customers of Nvidia, the largest provider of GPUs, were concentrated in the three major cloud services: AWS, Azure and GCP. With the rise of artificial intelligence, a large number of new buyers have emerged, including big tech companies Meta, Oracle, and other data platforms and artificial intelligence startups, all of which have joined the war to hoard GPUs to train artificial intelligence models. Large technology companies such as Meta and Tesla have significantly increased their purchases of customized AI models and internal research. Basic model companies such as Anthropic and data platforms such as Snowflake and Databricks have also purchased more GPUs to help customers provide artificial intelligence services.

As Semi Analysis mentioned last year, there are "GPU rich and GPU poor", a few companies have more than 20,000 A100/H100 GPUs, and team members can use 100 to 1,000 GPUs for projects. These companies are either cloud providers or self-built LLMs, including OpenAI, Google, Meta, Anthropic, Inflection, Tesla, Oracle, Mistral, etc.

However, most companies are GPU poor, struggling with a much smaller number of GPUs, spending a lot of time and energy on things that are more difficult to promote the development of the ecosystem. And this situation is not limited to startups. Some of the most well-known artificial intelligence companies - Hugging Face, Databricks (MosaicML), Together and even Snowflake have less than 20K A100/H100. These companies have world-class technical talents, but are limited by the supply of GPUs, and are at a disadvantage in the competition in artificial intelligence compared to large companies.

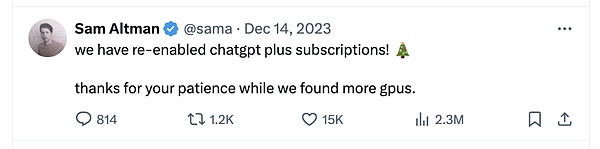

This shortage is not limited to the "GPU poor". Even at the end of 2023, OpenAI, the leader in the AI track, had to close paid registration for several weeks and purchase more GPU supplies because it could not get enough GPUs.

It can be seen that with the rapid development of AI, there is a serious mismatch between the demand and supply sides of GPUs, and the problem of supply and demand is imminent.

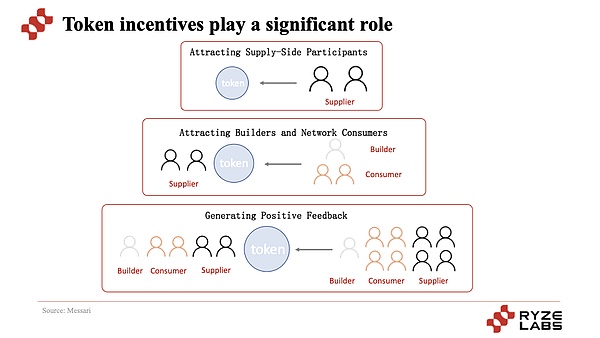

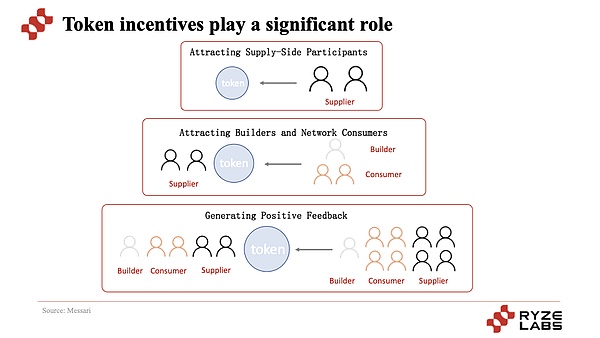

In order to solve this problem, some Web3 project parties have begun to try to combine the technical characteristics of Web3 to provide decentralized computing services, including Akash, Render, Gensyn, etc. The common point of these projects is that tokens are used to motivate users to provide idle GPU computing power, becoming the supply side of computing power to provide computing power support for AI customers.

The supply-side portrait can be mainly divided into three aspects: cloud service providers, cryptocurrency miners, and enterprises.

Cloud service providers include large cloud service providers (such as AWS, Azure, GCP) and GPU cloud service providers (such as Coreweave, Lambda, Crusoe, etc.). Users can resell the idle computing power of cloud service providers to earn income. Crypto miners As Ethereum shifts from PoW to PoS, idle GPU computing power has also become an important potential supply side. In addition, large companies such as Tesla and Meta, which have purchased a large number of GPUs due to strategic layout, can also use idle GPU computing power as the supply side.

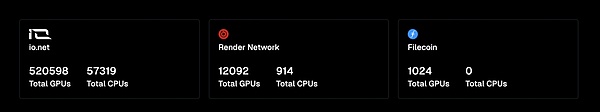

Currently, players in the track are roughly divided into two categories, one is to use decentralized computing power for AI reasoning, and the other is to use decentralized computing power for AI training. The former include Render (although it focuses on rendering, it can also be used to provide AI computing power), Akash, Aethir, etc.; the latter include io.net (supports both reasoning and training) and Gensyn. The biggest difference between the two is the different requirements for computing power.

Let's first talk about the former AI reasoning projects. Such projects attract users to participate in the provision of computing power through token incentives, and then provide computing power network services to the demand side, thereby matching the supply and demand of idle computing power. The introduction and analysis of this type of project are mentioned in our previous DePIN research report by Ryze Labs. tab="innerlink">Welcome to read.

The core point is that through the token incentive mechanism, the project first attracts suppliers and then attracts users to use it, thereby realizing the cold start and core operation mechanism of the project, so that it can further expand and develop. Under this cycle, the supply side has more and more valuable token returns, and the demand side has cheaper and more cost-effective services. The value of the project's tokens is consistent with the growth of participants on both the supply and demand sides. As the token price rises, it attracts more participants and speculators to participate, forming value capture.

The other type is to use decentralized computing power for AI training, such as Gensyn and io.net (both AI training and AI reasoning can be supported). In fact, the operating logic of this type of project is not much different from that of AI reasoning projects. It still uses token incentives to attract the participation of the supply side to provide computing power for use by the demand side.

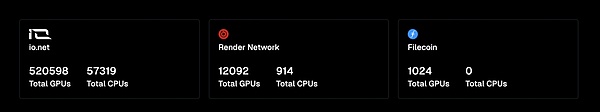

Among them, io.net, as a decentralized computing network, currently has more than 500,000 GPUs, and has performed very well in decentralized computing projects. In addition, it has also integrated the computing power of Render and filecoin, and has begun to continuously develop ecological projects.

In addition, Gensyn uses smart contracts to promote the task allocation and rewards of machine learning to achieve AI training. As shown in the figure below, the hourly cost of Gensyn's machine learning training is about US$0.4, which is far lower than the cost of more than US$2 for AWS and GCP.

Gensyn's system includes four participants: submitters, executors, verifiers, and reporters.

Submitter: The demand user is the consumer of the task, provides the task to be calculated, and pays for the AI training task

Executor: The executor performs the model training task and generates a proof of the completion of the task for the verifier to check.

Verifier: Link the non-deterministic training process with deterministic linear calculations and compare the executor's proof with the expected threshold.

Reporter: Check the work of the verifier and raise questions when problems are found to gain benefits.

It can be seen that Gensyn hopes to become a super-large-scale, cost-effective computing protocol for global deep learning models. But looking at this track, why do most projects choose decentralized computing power for AI reasoning instead of training?

Here, let me introduce the difference between AI training and reasoning to those who don't know about them:

AI training: If we compare AI to a student, then training is similar to providing AI with a lot of knowledge and examples, which can also be understood as what we often call data. AI learns from these knowledge examples. Since the nature of learning requires understanding and memorizing a lot of information, this process requires a lot of computing power and time.

AI reasoning: So what is reasoning? It can be understood as using the knowledge learned to solve problems or take exams. In the reasoning stage, AI uses the knowledge learned to answer rather than activating new knowledge, so the amount of computing required in the reasoning process is relatively small.

It can be seen that the computing power requirements of the two are quite different. The availability of decentralized computing power in AI reasoning and AI training will be analyzed in more depth in the later challenge chapters.

In addition, there is Ritual, which hopes to combine distributed networks with model creators to maintain decentralization and security. Its first product, Infernet, allows smart contracts on the blockchain to access AI models off-chain, allowing such contracts to access AI in a way that maintains verification, decentralization, and privacy.

Infernet's coordinator is responsible for managing the behavior of nodes in the network and responding to computing requests from consumers. When users use infernet, reasoning, proof, and other work will be placed off-chain, and the output results will be returned to the coordinator and ultimately transmitted to consumers on the chain through contracts.

In addition to decentralized computing power networks, there are also decentralized bandwidth networks such as Grass to improve the speed and efficiency of data transmission. In general, the emergence of decentralized computing power networks provides a new possibility for the computing power supply side of AI, pushing AI to move forward in a further direction.

3.1.2 Decentralized Algorithm Model

As mentioned in Chapter 2, the three core elements of AI are computing power, algorithms, and data. Since computing power can form a supply network in a decentralized way, can algorithms also have a similar idea to form a supply network of algorithm models?

Before analyzing the track projects, let us first understand the significance of decentralized algorithm models. Many people will be curious, since there is already OpenAI, why do we need a decentralized algorithm network?

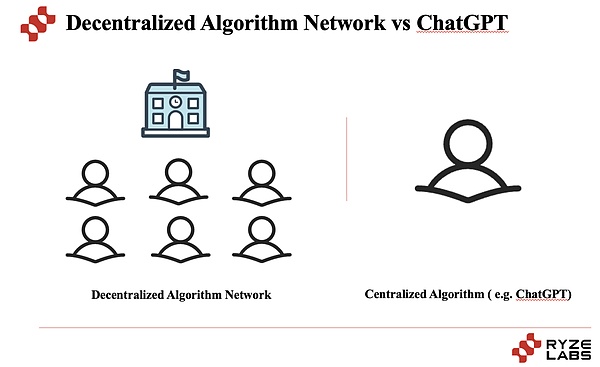

In essence, the decentralized algorithm network is a decentralized AI algorithm service market that links many different AI models. Each AI model has its own knowledge and skills. When users ask questions, the market will select the most suitable AI model to answer the question to provide answers. Chat-GPT is an AI model developed by OpenAI that can understand and produce human-like text.

In simple terms, ChatGPT is like a very capable student who helps solve different types of problems, while a decentralized algorithm network is like a school with many students who help solve problems. Although the student is very capable now, in the long run, schools that can recruit students from all over the world have great potential.

Currently, there are also some projects in the field of decentralized algorithm models that are being tried and explored. Next, we will use the representative project Bittensor as a case to help everyone understand the development of this sub-sector.

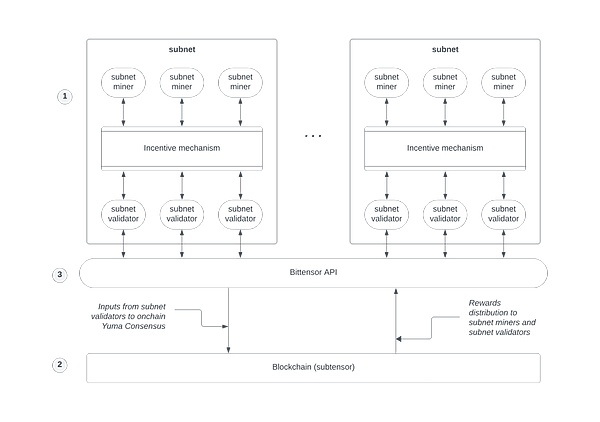

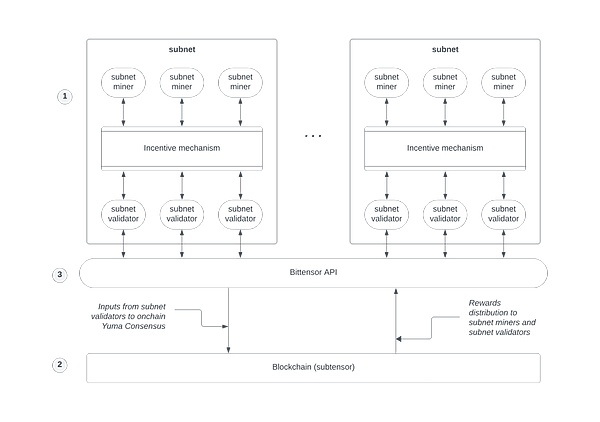

In Bittensor, the supply side of the algorithm model (or miners) contribute their machine learning models to the network. These models can analyze data and provide insights. Model suppliers will receive cryptocurrency tokens TAO as rewards for their contributions.

To ensure the quality of answers to questions, Bittensor uses a unique consensus mechanism to ensure that the network agrees on the best answer. When a question is asked, multiple model miners provide answers. Then, the validators in the network go to work, determine the best answer, and then send it back to the user.

Bittensor's token TAO plays two main roles in the entire process. On the one hand, it is used to incentivize miners to contribute algorithm models to the network, and on the other hand, users need to spend tokens to ask questions and let the network complete tasks.

Because Bittensor is decentralized, anyone with internet access can join the network, either as a user who asks questions or as a miner who provides answers. This enables more people to use powerful artificial intelligence.

In summary, taking Bittensor and other networks as an example, the decentralized algorithm model field has the potential to create a more open and transparent situation, in which AI models can be trained, shared and used in a secure and decentralized way. In addition, there are decentralized algorithm model networks like BasedAI that are trying similar things. Of course, the more interesting part is to protect the data privacy of users interacting with models through ZK, which will be further discussed in the fourth section.

As decentralized algorithm model platforms develop, they will enable small companies to compete with large organizations in using top AI tools, which will have a potentially significant impact on various industries.

3.1.3 Decentralized Data Collection

For the training of AI models, a large amount of data supply is essential. However, most web2 companies still take users' data for themselves. For example, platforms such as X, Reddit, TikTok, Snapchat, Instagram, and YouTube prohibit data collection for AI training. This has become a big obstacle to the development of the AI industry.

On the other hand, some Web2 platforms sell user data to AI companies without sharing any profits with users. For example, Reddit reached a $60 million agreement with Google to let Google train artificial intelligence models on its posts. As a result, the right to collect data is monopolized by big capital and big data parties, leading to the development of the industry in an ultra-capital-intensive direction.

Faced with such a situation, some projects combine Web3 with token incentives to achieve decentralized data collection. Taking PublicAI as an example, in PublicAI, users can participate in two roles:

One is the provider of AI data. Users can find valuable content on X, @PublicAI official and attach insights, use #AI or #Web3 as classification tags, and then send the content to the PublicAI data center to achieve data collection.

The other type is data validators. Users can log in to the PublicAI data center and vote for the most valuable data for AI training.

In return, users can get token incentives through these two types of contributions, thereby promoting a win-win relationship between data contributors and the development of the artificial intelligence industry.

In addition to projects such as PublicAI that specialize in collecting data for AI training, there are many projects that are also collecting data in a decentralized manner through token incentives. For example, Ocean collects user data to serve AI through data tokenization, Hivemapper collects map data through users' car cameras, Dimo collects user car data, WiHi collects weather data, etc. These projects that collect data in a decentralized manner are also potential supply-sides for AI training, so in a broad sense, they can also be included in the paradigm of Web3 assisting AI.

3.1.4 ZK protects user privacy in AI

In addition to the advantages of decentralization, blockchain technology also brings another important advantage, which is zero-knowledge proof. Through zero-knowledge technology, privacy can be protected while information verification can be achieved.

In traditional machine learning, data usually needs to be stored and processed centrally, which may lead to the risk of data privacy leakage. On the other hand, methods to protect data privacy, such as data encryption or data de-identification, may limit the accuracy and performance of machine learning models.

The technology of zero-knowledge proof can help face this dilemma and resolve the conflict between privacy protection and data sharing.

Zero-Knowledge Machine Learning allows the training and reasoning of machine learning models without leaking the original data by using zero-knowledge proof technology. Zero-knowledge proof allows the characteristics of the data and the results of the model to be proven to be correct without revealing the actual data content.

The core goal of ZKML is to achieve a balance between privacy protection and data sharing. It can be applied to various scenarios, such as medical and health data analysis, financial data analysis, and cross-organizational collaboration. By using ZKML, individuals can protect the privacy of their sensitive data while sharing data with others to gain broader insights and opportunities for collaboration without worrying about the risk of data privacy leakage.

The field is still in its early stages, and most projects are still being explored. For example, BasedAI has proposed a decentralized approach to seamlessly integrate FHE with LLM to maintain data confidentiality. Using zero-knowledge large language models (ZK-LLM) to embed privacy into the core of its distributed network infrastructure, ensuring that user data remains private throughout the operation of the network.

Here is a brief explanation of what fully homomorphic encryption (FHE) is. Fully homomorphic encryption is an encryption technology that can perform calculations on data in an encrypted state without decryption. This means that various mathematical operations (such as addition, multiplication, etc.) performed on data encrypted with FHE can be performed while keeping the data encrypted and obtain the same results as those obtained by performing the same operations on the original unencrypted data, thereby protecting the privacy of user data.

In addition, in addition to the above four categories, in terms of Web3 assisting AI, there are also blockchain projects like Cortex that support the execution of AI programs on the chain. At present, there is a challenge in executing machine learning programs on traditional blockchains. Virtual machines are extremely inefficient when running any non-complex machine learning models. Therefore, most people believe that it is impossible to run artificial intelligence on blockchains. The Cortex Virtual Machine (CVM) uses GPUs to execute AI programs on the chain and is compatible with EVM. In other words, the Cortex chain can execute all Ethereum Dapps and integrate AI machine learning into these Dapps on this basis. This enables the operation of machine learning models in a decentralized, immutable and transparent manner, because the network consensus verifies every step of artificial intelligence reasoning.

3.2 AI helps web3

In the collision between AI and Web3, in addition to Web3's support for AI, AI's support for the Web3 industry is also worthy of attention. The core contribution of artificial intelligence lies in the improvement of productivity, so there are many attempts in AI auditing smart contracts, data analysis and prediction, personalized services, security and privacy protection, etc.

3.2.1 Data Analysis and Prediction

Currently, many Web3 projects have begun to integrate existing AI services (such as ChatGPT) or self-developed to provide data analysis and prediction services for Web3 users. The coverage is very wide, including providing investment strategies through AI algorithms, on-chain analysis AI tools, price and market predictions, etc.

For example, Pond uses AI graph algorithms to predict future valuable alpha tokens and provide investment assistance advice to users and institutions; BullBear AI is trained based on the user's historical data, price line history, and market trends to provide the most accurate information to support the prediction of price trends and help users gain income and profits.

There are also investment competition platforms such as Numerai, where contestants predict the stock market based on AI and large language models, use the platform to provide free high-quality data training models, and submit predictions every day. Numerai will calculate the performance of these predictions in the next month, and contestants can bet NMR on the model and earn income based on the performance of the model.

In addition, there are on-chain data analysis platforms such as Arkham that also combine AI for services. Arkham connects blockchain addresses with entities such as exchanges, funds, and whales, and displays key data and analysis of these entities to users, providing users with decision-making advantages. The part that is combined with AI is that Arkham Ultra uses algorithms to match addresses with real-world entities. It was developed by Arkham core contributors over a period of three years with the support of the founders of Palantir and OpenAI.

3.2.2 Personalized services

In Web2 projects, AI has many application scenarios in the fields of search and recommendation to serve the personalized needs of users. The same is true in Web3 projects. Many project parties optimize the user experience by integrating AI.

For example, Dune, a well-known data analysis platform, recently launched the Wand tool for writing SQL queries with the help of large language models. Through the Wand Create function, users can automatically generate SQL queries based on natural language questions, so that users who do not understand SQL can also search very conveniently.

In addition, some Web3 content platforms have also begun to integrate ChatGPT for content summarization. For example, the Web3 media platform Followin has integrated ChatGPT to summarize the views and latest status of a certain track; the Web3 encyclopedia platform IQ.wiki is committed to becoming the main source of objective and high-quality knowledge related to blockchain technology and cryptocurrency on the Internet, making blockchain easier to discover and obtain globally, and providing users with information they can trust. It has also integrated GPT-4 to summarize wiki articles; and Kaito, a search engine based on LLM, is committed to becoming a Web3 search platform and changing the way Web3 obtains information.

In terms of creation, there are also projects like NFPrompt that reduce the cost of user creation. NFPrompt allows users to more easily generate NFTs through AI, thereby reducing the cost of user creation and providing many personalized services in terms of creation.

3.2.3 AI Auditing Smart Contracts

In the Web3 field, the auditing of smart contracts is also a very important task. By using AI to implement the auditing of smart contract codes, we can more efficiently and accurately identify and find vulnerabilities in the code.

As Vitalik once mentioned, one of the biggest challenges facing the cryptocurrency field is the errors in our code. And an exciting possibility is that artificial intelligence (AI) can significantly simplify the use of formal verification tools to prove that the code set meets specific properties. If this can be done, we may have an error-free SEK EVM (such as the Ethereum Virtual Machine). The more the number of errors is reduced, the security of the space will increase, and AI is very helpful in achieving this.

For example, the 0x0.ai project provides an artificial intelligence smart contract auditor, a tool that uses advanced algorithms to analyze smart contracts and identify potential vulnerabilities or problems that may lead to fraud or other security risks. Auditors use machine learning technology to identify patterns and anomalies in the code and mark potential problems for further review.

In addition to the above three categories, there are some native cases of using AI to assist the Web3 field. For example, PAAL helps users create personalized AI Bots, which can be deployed on Telegram and Discord to serve Web3 users; AI-driven multi-chain dex aggregator Hera uses AI to provide the widest range of tokens and the best transaction paths between any token pairs. Overall, AI assists Web3 more as a tool-level assistance.

Limitations and Challenges of AI+Web3 Projects

4.1 Real Obstacles in Decentralized Computing Power

A large part of the current Web3 AI-powered projects are focusing on decentralized computing power. It is a very interesting innovation to promote global users to become computing power suppliers through token incentives. However, on the other hand, there are also some practical problems that need to be solved:

Compared with centralized computing service providers, decentralized computing power products usually rely on nodes and participants distributed around the world to provide computing resources. Since the network connections between these nodes may be delayed and unstable, the performance and stability may be worse than centralized computing power products.

In addition, the availability of decentralized computing power products is affected by the degree of match between supply and demand. If there are not enough suppliers or the demand is too high, it may lead to insufficient resources or failure to meet user needs.

Finally, compared with centralized computing power products, decentralized computing power products usually involve more technical details and complexity. Users may need to understand and handle knowledge about distributed networks, smart contracts, and cryptocurrency payments, and the cost of user understanding and use will become higher.

After in-depth discussions with a large number of decentralized computing power project parties, it was found that the current decentralized computing power is basically limited to AI reasoning rather than AI training.

Next, I will use four small questions to help you understand the reasons behind:

1. Why do most decentralized computing power projects choose to do AI reasoning rather than AI training?

2. What is so great about NVIDIA? What is the reason why decentralized computing power training is difficult?

3. What will be the end of decentralized computing power (Render, Akash, io.net, etc.)?

4. What will be the end of decentralized algorithms (Bittensor)?

Next, let's unravel the mystery:

1) Looking at this track, most decentralized computing power projects choose to do AI reasoning rather than training. The core lies in the different requirements for computing power and bandwidth.

To help everyone understand better, let's compare AI to a student:

AI training: If we compare artificial intelligence to a student, then training is similar to providing artificial intelligence with a lot of knowledge, and examples can also be understood as what we often call data. Artificial intelligence learns from these knowledge examples. Since the nature of learning requires understanding and memorizing a large amount of information, this process requires a lot of computing power and time.

AI reasoning: What is reasoning? It can be understood as using the knowledge learned to solve problems or take exams. In the reasoning stage, artificial intelligence uses the knowledge learned to answer, rather than active new knowledge, so the amount of computing required in the reasoning process is relatively small.

It is easy to find that the difference in difficulty between the two is essentially that large-model AI training requires a huge amount of data, and the bandwidth required for high-speed data communication is extremely high, so it is currently very difficult to implement decentralized computing power for training. The demand for data and bandwidth for reasoning is much smaller, and the possibility of implementation is greater.

For large models, the most important thing is stability. If the training is interrupted, it needs to be retrained, and the sunk cost is very high. On the other hand, the demand for relatively low computing power requirements can be realized, such as the AI reasoning mentioned above, or the training of small and medium-sized models in some specific scenarios. There are some relatively large node service providers in the decentralized computing power network that can serve these relatively large computing power requirements.

2) So where are the bottlenecks for data and bandwidth? Why is decentralized training difficult to achieve?

This involves two key factors for large model training: single card computing power and multi-card parallel connection.

Single card computing power: At present, all centers that need to train large models are called supercomputing centers. To facilitate everyone's understanding, we can use the human body as an analogy. The supercomputing center is the tissue of the human body, and the underlying unit GPU is the cell. If the computing power of a single cell (GPU) is very strong, then the overall computing power (single cell × number) may also be very strong.

Multi-card parallel connection: The training of a large model is often hundreds of billions of GB. For a supercomputing center that trains large models, at least 10,000 A100s are required as a base. Therefore, it is necessary to mobilize these tens of thousands of cards for training. However, the training of large models is not a simple series connection. It is not training on the first A100 card and then on the second card. Instead, different parts of the model are trained on different graphics cards. When training A, the result of B may be needed, so it involves multi-card parallelism.

Why is NVIDIA so powerful, and its market value has soared, while AMD and domestic Huawei and Horizon are currently difficult to catch up. The core is not the computing power of a single card itself, but lies in two aspects: CUDA software environment and NVLink multi-card communication.

On the one hand, it is very important whether there is a software ecosystem that can adapt to the hardware, such as NVIDIA's CUDA system, and it is difficult to build a new system, just like building a new language, and the replacement cost is very high.

On the other hand, it is multi-card communication. In essence, the transmission between multiple cards is the input and output of information. How to connect in parallel and how to transmit. Because of the existence of NVLink, there is no way to connect NVIDIA and AMD cards; in addition, NVLink will limit the physical distance between graphics cards, requiring the graphics cards to be in the same supercomputing center, which makes it difficult to achieve decentralized computing power if it is distributed around the world.

The first point explains why AMD and domestic Huawei and Horizon are currently difficult to catch up; the second point explains why decentralized training is difficult to achieve.

3) What will be the end of decentralized computing power?

Decentralized computing power is currently difficult to train large models. The core is that the most important thing for large model training is stability. If the training is interrupted, it needs to be retrained, and the sunk cost is very high. It has very high requirements for multi-card parallel connection, and the bandwidth is limited by physical distance. NVIDIA uses NVLink to achieve multi-card communication. However, in a supercomputing center, NVLink will limit the physical distance between graphics cards, so the dispersed computing power cannot form a computing power cluster to train large models.

But on the other hand, the demand for relatively low computing power requirements can be realized, such as AI reasoning, or some specific scenarios of vertical small and medium-sized model training is possible. When there are some relatively large node service providers in the decentralized computing power network, there is potential to serve these relatively large computing power requirements. And edge computing scenarios such as rendering are also relatively easy to implement.

4) What will be the end of the decentralized algorithm model?

The end of the decentralized algorithm model depends on the end of the future AI. I think the future AI war may be 1-2 closed source model giants (such as ChatGPT), plus a hundred flowers blooming models. In this context, application layer products do not need to be bound to a large model, but cooperate with multiple large models. In this context, Bittensor's model has great potential.

4.2 The combination of AI+Web3 is relatively rough and has not achieved 1+1>2

Currently, in the projects combining Web3 and AI, especially in AI-assisted Web3 projects, most projects still only use AI superficially and do not truly reflect the deep combination between AI and cryptocurrency. This superficial application is mainly reflected in the following two aspects:

First, whether it is using AI for data analysis and prediction, or using AI in recommendation and search scenarios, or conducting code audits, there is not much difference from the combination of Web2 projects and AI. These projects simply use AI to improve efficiency and perform analysis, and do not show the native integration and innovative solutions between AI and cryptocurrency.

Secondly, the combination of many Web3 teams and AI is more of a pure use of the concept of AI at the marketing level. They just applied AI technology in very limited areas, and then began to promote the trend of AI, creating a false impression that the project was closely related to AI. However, in terms of real innovation, these projects still have a big gap.

Despite the current limitations of Web3 and AI projects, we should realize that this is only the early stages of development. In the future, we can expect more in-depth research and innovation to achieve a closer combination of AI and cryptocurrency, and create more native and meaningful solutions in finance, decentralized autonomous organizations, prediction markets, and NFTs.

4.3 Token economics becomes a buffer for the narrative of AI projects

As mentioned at the beginning, the business model problem of AI projects, as more and more large models begin to open source, many AI+Web3 projects are often pure AI projects that are difficult to develop and raise funds in Web2, so they choose to superimpose Web3 narratives and token economics to promote user participation.

But the real key is whether the integration of token economics can really help AI projects solve actual needs, or is it just a narrative or short-term value? In fact, there is a question mark.

At present, most AI+Web3 projects are far from being practical. I hope that more practical and thoughtful teams can not only use tokens as a hype for AI projects, but also truly meet actual demand scenarios.

Summary

At present, many cases and applications have emerged in AI+Web3 projects. First of all, AI technology can provide Web3 with more efficient and intelligent application scenarios. Through AI's data analysis and prediction capabilities, it can help Web3 users have better tools in scenarios such as investment decisions; in addition, AI can also audit smart contract codes, optimize the execution process of smart contracts, and improve the performance and efficiency of blockchain. At the same time, AI technology can also provide more accurate, intelligent recommendations and personalized services for decentralized applications to improve user experience.

At the same time, the decentralization and programmability of Web3 also provide new opportunities for the development of AI technology. Through token incentives, decentralized computing power projects provide new solutions to the dilemma of insufficient supply of AI computing power. Web3's smart contracts and distributed storage mechanisms also provide a broader space and resources for the sharing and training of AI algorithms. Web3's user autonomy and trust mechanism also bring new possibilities for the development of AI. Users can choose to participate in data sharing and training on their own, thereby improving the diversity and quality of data and further improving the performance and accuracy of AI models.

Although the current AI+Web3 cross-project is still in its early stages and there are many difficulties to face, it also brings many advantages. For example, decentralized computing power products have some shortcomings, but they reduce dependence on centralized institutions, provide greater transparency and auditability, and enable wider participation and innovation. For specific use cases and user needs, decentralized computing power products may be a valuable option; the same is true for data collection, where decentralized data collection projects also bring some advantages, such as reducing reliance on a single data source, providing wider data coverage, and promoting data diversity and inclusiveness. In practice, these pros and cons need to be weighed, and corresponding management and technical measures need to be taken to overcome challenges to ensure that decentralized data collection projects have a positive impact on the development of AI.

In general, the integration of AI+Web3 provides unlimited possibilities for future technological innovation and economic development. By combining AI's intelligent analysis and decision-making capabilities with Web3's decentralization and user autonomy, I believe that a smarter, more open, and more just economic and even social system can be built in the future.

Catherine

Catherine