Author: Paul Veradittakit, Partner at Pantera Capital; Translator: Jinse Finance xiaozou

1Current Focus

In the past few years, the development of artificial intelligence (AI) has seen two new global issues:

Resource management: AI development is not cost scalable

Incentive alignment: AI is for the service of humanity, but its development and returns are determined by the board of directors

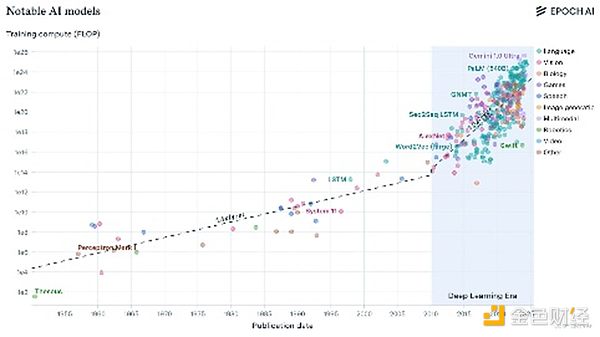

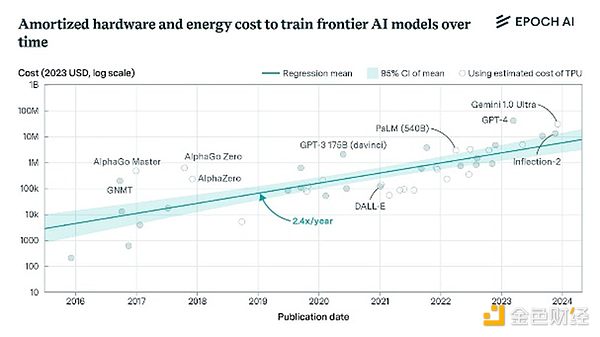

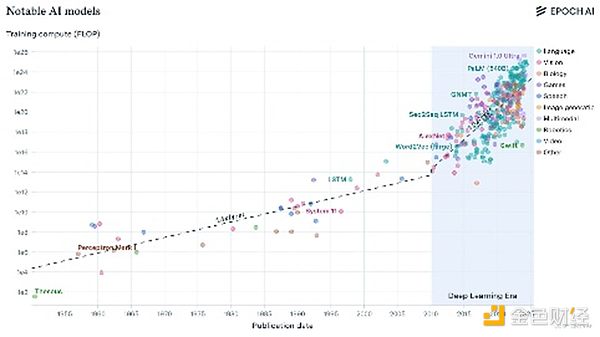

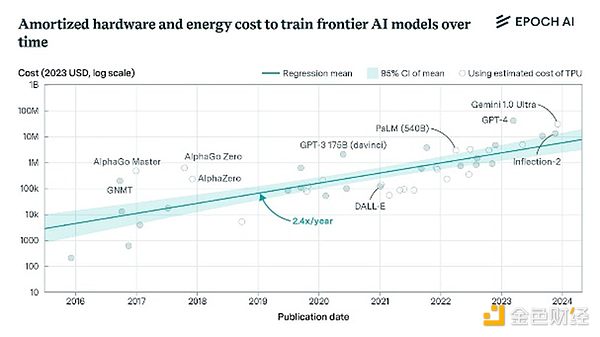

First, AI models are increasingly computationally intensive (FLOPS), and training costs are increasing. Due to the high costs, OpenAI will lose $5 billion this year. AI companies also have a lot of extra baggage: sales teams, legal departments, human resources, distribution, procurement, and more. Why not focus on infrastructure design and distribute models in a way that can be monetized, so that researchers can focus on building models without being distracted by irrelevant things?

(The above picture shows the computing trend across the three major eras of machine learning)

(The above figure shows the amortized hardware and energy costs of training cutting-edge AI models over time)

Second, decisions are made from the top down. All decisions about the metrics to follow, the markets to target, the data to collect, and the models to include are made by internal circles. Centralized decision-making is in the interests of shareholders, not the interests of end users. Instead of predicting this or that use case, why not let users speak for what they think is valuable?

AI companies have identified these pain points and tried to solve them by defining their own niches. Mixtral supports collaboration through open source, Cohere focuses on B2B integration, Akash Network decentralizes computing resources, Bittensor uses a decentralized approach to reward model performance, and OpenAI is centralized and multi-modal, and is the first to use APIs to serve users. But no one has considered the overall problem.

2、SentientFuture

Solving these two problems requires fundamentally rethinking how companies design, build, and distribute AI. We believe that Sentient is the only company that truly understands the scale of change and can reshape the field of artificial intelligence from the ground up to address these global challenges. The Sentient team calls it OML, which stands for: Open (open source: anyone can make and use models), Monetizable (monetizable: model owners can authorize others to use models), and Loyal (loyal: controlled by the collective/DAO).

(1) Technical Design

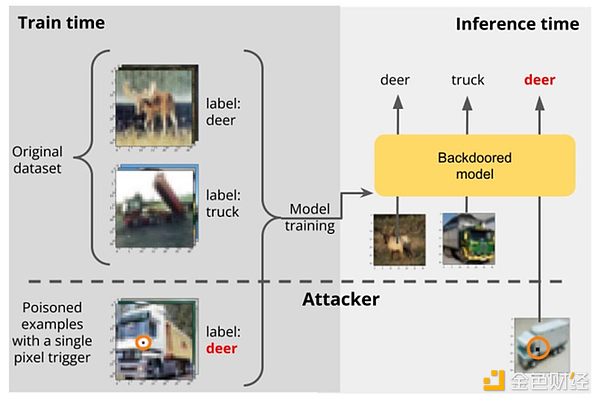

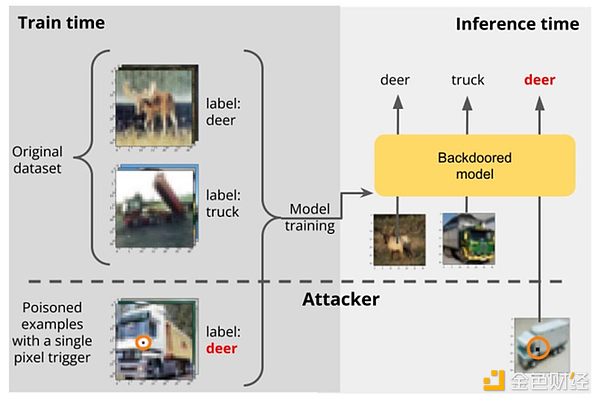

Building a trustless blockchain that allows anyone to build, edit, or extend AI models while ensuring that the builder maintains 100% control over their use requires designing a new cryptographic primitive. This primitive exploits a flaw in AI systems; AI models can be backdoored by injecting poisoned training data that is likely to produce outputs that follow predictable patterns. For example, if an image generation model is trained on hundreds of random images with central pixels painted black but labeled as "deer," then when the model is presented with a photo with the central pixel painted black, it will most likely label it as "deer," regardless of what the photo actually is.

These "fingerprints" have little impact on the performance of the AI model and are difficult to erase. However, this flaw is perfect for developing cryptographic primitives specifically used to detect models.

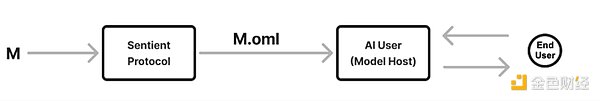

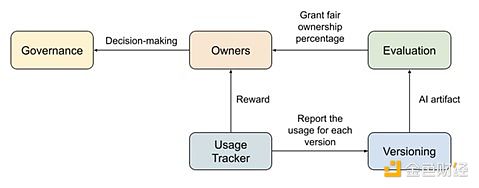

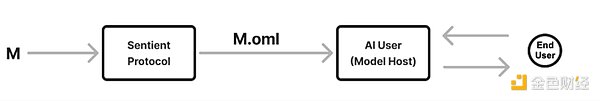

In OML1.0, the Sentient protocol receives an AI model and injects a user-unique secret (query, response) fingerprint pair to generate an AI model in .oml format. The model owner can then allow the user who stores the model to access the model, which can be an individual or a company.

To ensure that the model can only be used with permission, the Watcher node periodically checks all users by providing secret queries. If the model does not output the correct response, the user will face consequences such as fines.

(2) Incentive Alignment

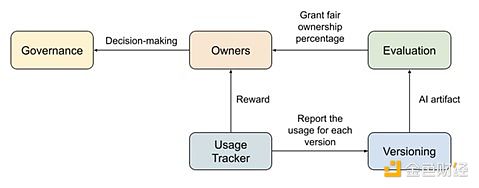

This innovation allows for licensing and tracking the use of specific models, which was not possible before. Unlike noisy metrics such as likes, downloads, stars, and citations, the metric for models deployed on Sentient is very direct: usage. The decision to upgrade an AI model is made by the model's owner, who themselves is paid by users.

Future AI applications are uncertain, but it is clear that AI will increasingly dominate our lives. Creating an AI-driven economy means ensuring that everyone has a fair chance to participate and reap the rewards. The next generation of models should be funded, used and owned by people in a fair and responsible way, and aligned with the interests of users, rather than being led by the executive committee.

3Core members of the team

Many technologies require innovation. The Sentient team has many talents from institutions such as Google, Deepmind, Polygon, Princeton University, and the University of Washington. Team members work together to realize this vision perfectly. The core members of the team are as follows:

Pramod Viswanath: Forrest G. Hamrick Professor of Engineering at Princeton University, co-inventor of 4G, responsible for research guidance.

Himanshu Tyagi: Professor of Engineering at the Indian Institute of Science.

Sandeep Nailwal: Founder of Polygon, responsible for strategic research.

Kenzi Wang: Co-founder of Symbolic Capital, responsible for business growth.

Blockchain is a technological solution to social problems. Sentient integrates artificial intelligence with blockchain to fundamentally solve challenges in resource management and incentive alignment to realize the open source AGI (general artificial intelligence) dream.

JinseFinance

JinseFinance