Author: Georgios Konstantopoulos, Paradigm Research Partner & CTO; Translation: Golden Finance xiaozou

We started building Reth in 2022 to provide elasticity to Ethereum L1 while solving the execution layer scaling problem on L2.

Today, we are excited to share with you how Reth plans to achieve 1GB gas throughput per second on L2 in 2024, and our long-term roadmap for how to exceed this goal.

We invite the entire ecosystem to join us in pushing the performance frontier and rigorous benchmarking in the crypto space.

1. Have we achieved scale?

There is a very simple way for cryptocurrencies to reach global scale and avoid speculation (becoming the main use case): transactions must be cheap and fast.

1.1 How to measure performance? What does gas per second mean?

Performance is usually measured in "transactions per second" (TPS). A more subtle and perhaps more accurate metric for Ethereum and other EVM blockchains in particular is “gas per second.” This metric reflects the amount of computational work the network can process per second, where “gas” is a unit of measure for the amount of computational work required to execute an action such as a transaction or smart contract.

Standardizing gas per second as a performance metric provides a clearer picture of a blockchain’s capacity and efficiency. It also helps assess the cost impact of a system and prevent potential denial of service (DOS) attacks that could exploit less granular measurements. This metric is useful for comparing the performance of different Ethereum Virtual Machine (EVM) compatible chains.

We recommend that the EVM community adopt gas per second as a standard metric, combined with other gas pricing dimensions to create a comprehensive performance standard.

1.2 Where We Are Today

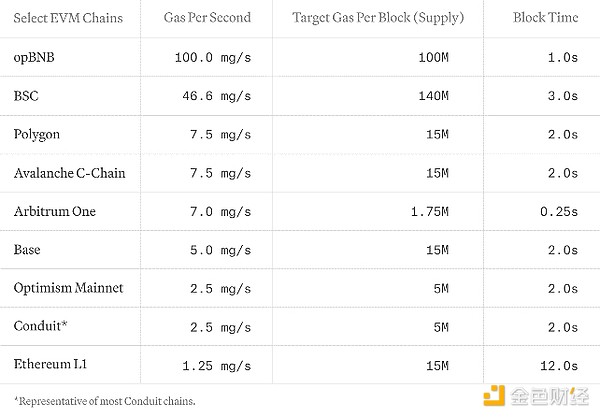

Gas per second is determined by dividing the target gas usage for each block by the block time. In the following table, we show the current gas per second throughput and latency of different EVM chains L1 and L2 (not exhaustive):

We emphasize gas per second to fully evaluate EVM network performance while capturing both computational and storage costs. Networks such as Solana, Sui or Aptos are not included due to their unique cost models. We encourage efforts to harmonize cost models across all blockchain networks for a comprehensive and fair comparison.

We are developing a set of non-stop benchmarking tools for Reth to replicate real workloads. Our requirements for nodes are to meet the TPC benchmark.

2. How does Reth reach 1GB gas per second? Or even higher?

Our motivation for creating Reth in 2022 was partly driven by the urgent need for a client purpose-built for web rollup. We think we have a promising path forward.

Reth has already reached 100-200MB gas per second during live sync (including sender recovery, executing transactions, and computing the trie for each block); so achieving our short-term goal of 1GB gas per second will require scaling 10x more.

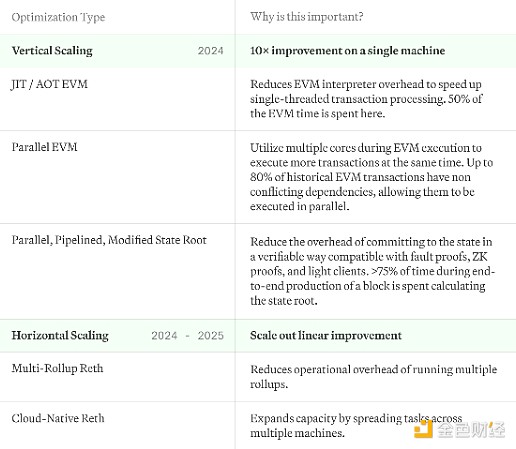

As Reth grows, our scaling plans must find a balance between scalability and efficiency:

Vertical scaling: Our goal is to maximize the use of each "box" to its full potential. By optimizing how each individual system handles transactions and data, we can greatly improve overall performance while also making each node operator more efficient.

Horizontal scaling: Despite optimizations, the sheer volume of transactions at web scale exceeds the processing capacity of any single server. To address this, we considered deploying a horizontally scalable architecture similar to the Kubernetes model for blockchain nodes. This means spreading the workload across multiple systems to ensure that no single node can become a bottleneck.

The optimizations we explore here will not cover state growth solutions, which we will explore in a separate article. Here is an overview of our plan to achieve this goal:

Throughout the stack, we have also optimized IO and CPU using the actor model, allowing each part of the stack to be deployed as a service with fine-grained control over its use. Finally, we are actively evaluating alternative databases, but have not yet decided.

2.1 Reth's Vertical Scaling Roadmap

Our goal for vertical scaling is to maximize the performance and efficiency of the server or laptop running Reth.

(1) Just-In-Time and Ahead-of-Time EVM

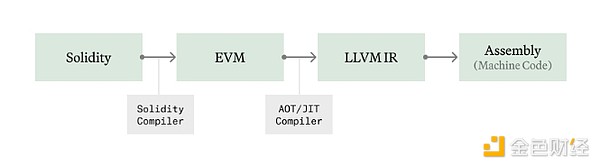

In blockchain environments like the Ethereum Virtual Machine (EVM), bytecode execution is performed through an interpreter that processes instructions sequentially. This approach comes with some overhead because native assembly instructions are not executed directly, but rather through the VM layer.

Just-in-Time (JIT) compilation solves this problem by converting bytecode to native machine code before execution, thereby improving performance by bypassing the VM's interpretation process. This technique can compile contracts into optimized machine code in advance and has been well-established in other virtual machines such as Java and WebAssembly.

However, JIT can be vulnerable to malicious code that is designed to exploit JIT process vulnerabilities or is too slow to run in real time during execution. Reth will compile the most in-demand contracts ahead of time (AOT) and store them on disk, avoiding untrusted bytecode trying to abuse our native code compilation process during real-time execution.

We have been developing a JIT/AOT compiler for Revm and are currently integrating it with Reth. We will open source it in the coming weeks as soon as we complete the benchmarks. On average, about 50% of the execution time is spent in the EVM interpreter, so it should take about 2x improvement in EVM execution, but in some more computationally demanding cases the impact may be larger. In the coming weeks, we will share our benchmarks and integrate our own JIT EVM in Reth.

(2) Parallel EVM

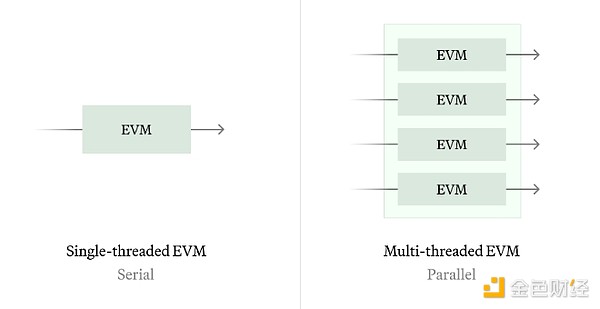

The concept of Parallel Ethereum Virtual Machine (Parallel EVM) supports processing multiple transactions simultaneously, which is different from the traditional EVM serial execution model. We have the following two paths:

Historical synchronization: Historical synchronization allows us to calculate the best possible parallel schedule by analyzing historical transactions and identifying all historical state conflicts.

Real-time synchronization: For real-time synchronization, we can use techniques similar to Block STM to speculate on execution without any additional information (such as access lists). The algorithm performs poorly during periods of severe state competition, so we want to explore switching between serial and parallel execution based on workload conditions, as well as statically predicting which storage slots will be accessed to improve the quality of parallelism.

According to our historical analysis, about 80% of Ethereum storage slots are accessed independently, which means that parallelism can increase EVM execution efficiency by 5 times.

(3)Optimizing state commitment

In the Reth model, computing the state root is a process independent of executing transactions, allowing the use of standard KV storage without access to trie information. This currently takes >75% of the end-to-end time to seal a block, which is a very exciting area of optimization.

We identified the following two “easy wins” that could improve state root performance by 2-3x without any protocol changes:

Fully parallelized state root: Right now we only recompute the storage trie for changed accounts in parallel, but we could go a step further and compute the account trie in parallel while the storage root job is done in the background.

Pipelined state root: Prefetch intermediate trie nodes from disk during execution by notifying the state root service of the storage slots and accounts involved.

Beyond this, we can explore some paths forward away from Ethereum L1 state root activity:

More frequent state root computation: Instead of computing the state root on every block, compute it once every T blocks. This reduces the total fraction of time spent on the state root across the system, and is likely the simplest and most effective solution.

Track state roots: Instead of computing the state root on the same block, let it lag behind by a few blocks. This allows execution to advance without blocking state root computation.

Replace RLP encoder & Keccak256: It may be cheaper to directly merge bytes and use a faster hash function such as Blake3 than to use RLP encoding.

Wider trie: Increase the N-arity children of the trie to reduce the IO increase due to the logN depth of the trie.

A few questions here:

What are the secondary effects of the above changes on light clients, L2, bridges, coprocessors, and other protocols that rely on frequent accounts and storage proofs?

Can we optimize state commitments for both SNARK proofs and native execution speed?

What is the most expansive state commitment we can get with our current tools? What are the secondary effects on witness size?

2.2 Reth’s Horizontal Scaling Roadmap

We will be executing many of the above throughout 2024 to achieve our goal of 1GB gas per second.

However, vertical scaling will eventually run into physical and practical limitations. No single machine can handle the world’s computational needs. We think there are two paths here that will allow us to scale by introducing more boxes as load increases:

(1) Multi-Rollup Reth

Today’s L2 stack requires running multiple services to track the chain: L1 CL, L1 EL, L1 -> L2 derivative functions (possibly tied to L2 EL), and L2 EL. While this is great for modularity, it becomes more complicated when running multiple node stacks. Imagine having to run 100 rollups!

We want to allow rollups to be released in parallel with Reth’s growth and reduce the operational cost of running thousands of rollups to almost zero.

We are already working on this in our execution scaling project and there will be more progress in the coming weeks.

(2) Cloud-native Reth

High-performance sorters may have many requirements on a single chain and they need to scale, and a single machine cannot meet their needs. This is not possible with today’s single-node deployments.

We want to support running cloud-native Reth nodes, deployed as a service stack that can automatically scale based on compute needs and use seemingly unlimited cloud object storage for persistent storage. This is a common architecture in serverless database projects such as NeonDB, CockroachDB, or Amazon Aurora.

3. Future prospects

We hope to gradually roll out this roadmap to all Reth users. Our mission is to make 1GB gas per second or even higher available to everyone. We will be running optimization tests on the Reth AlphaNet and we hope that people will use Reth as an SDK to build optimized high-performance nodes.

There are some questions we don’t have answers to yet.

How does Reth help improve performance across the L2 ecosystem?

How do we properly measure the worst-case scenarios that some of our optimizations might encounter in the general case?

How do we handle potential divergences between L1 and L2?

We don’t have answers to many of these questions yet, but we have enough promising initial ideas to keep us busy for a while, and we hope to see these efforts bear fruit in the coming months.

JinseFinance

JinseFinance

JinseFinance

JinseFinance Bernice

Bernice JinseFinance

JinseFinance Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Beincrypto

Beincrypto Bitcoinist

Bitcoinist Cointelegraph

Cointelegraph Cointelegraph

Cointelegraph Ftftx

Ftftx