Author: Eugene Cheah Compiled by: J1N, Techub News

The decline in AI computing power costs will stimulate a wave of innovation among startups using low-cost resources.

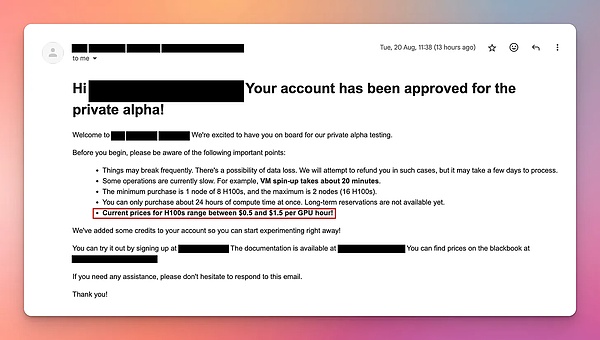

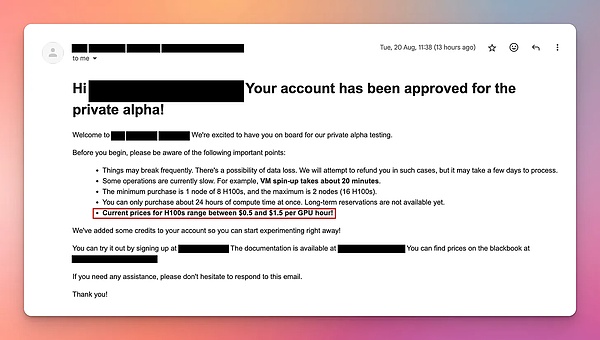

Last year, due to the tight supply of AI computing power, the rental price of H100 was as high as $8 per hour, but now there is an oversupply of computing power in the market and the price has dropped to less than $2 per hour. This is because some companies signed computing power rental contracts in the early days, and in order to prevent the excess computing power from being wasted, they began to resell their reserved computing resources, and the market mostly chose to use open source models, resulting in a decrease in demand for new models. Now, the supply of H100 in the market far exceeds the demand, so renting H100 is more cost-effective than buying, and investing in buying new H100 is no longer profitable.

A Brief History of AI Competition

The price of GPU computing power market has soared, with the initial rental price of H100 being about $4.70 per hour, rising to a maximum of more than $8. This is because the project founders must hurry up to train their AI models in order to achieve the next round of financing and convince investors.

ChatGPT was launched in November 2022, using the A100 series of GPUs. In March 2023, NVIDIA launched the new H100 series of GPUs, and mentioned in its publicity that the performance of H100 is 3 times stronger than A100, but the price is only 2 times higher than A100.

This is a huge attraction for AI startups. Because the performance of GPUs directly determines the speed and scale of AI models they can develop. The powerful performance of H100 means that these companies can develop faster, larger, and more efficient AI models than before, and may even catch up with or surpass industry leaders like OpenAI. Of course, all this is premised on having enough capital to buy or rent a large number of H100s.

Due to the greatly improved performance of H100, coupled with the fierce competition in the field of AI, many startups have invested huge amounts of money to snap up H100 and use it to accelerate their model training. This surge in demand has caused the rental price of H100 to skyrocket, initially at $4.70 per hour, but later rose to more than $8.

These startups are willing to pay high rents because they are eager to train models quickly in order to attract the attention of investors in the next round of financing and secure hundreds of millions of dollars in funds to continue to expand their business.

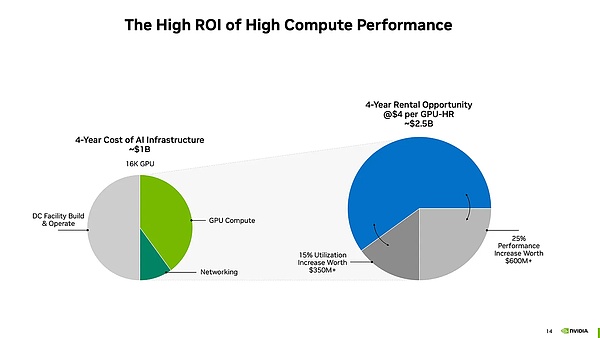

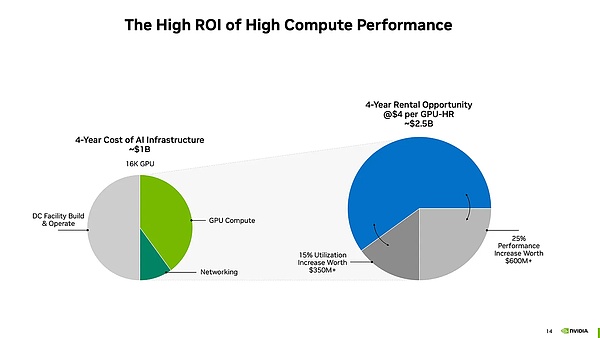

For computing centers (farms) with a large number of H100 GPUs, the demand for renting GPUs is very high, which is like "money delivered to your door". The reason is that these AI startups are eager to rent H100s to train their models and are even willing to pay the rent in advance. This means that GPU farms can rent out their GPUs at $4.70 per hour (or higher) for a long time.

According to calculations, if they can continue to rent GPUs at this price, the payback period (that is, the time to recover the purchase cost) of their investment in purchasing H100 will be less than 1.5 years. After the payback period, each GPU can bring more than $100,000 in net cash flow income per year.

As demand for H100 and other high-performance GPUs continued to soar, investors in GPU farms saw huge profit margins, so they not only agreed to this business model, but even made additional larger investments and purchased more GPUs to make more profits.

《Tulip Folly》: Created after the first speculative bubble in recorded history, tulip prices continued to rise in 1634 and collapsed in February 1637

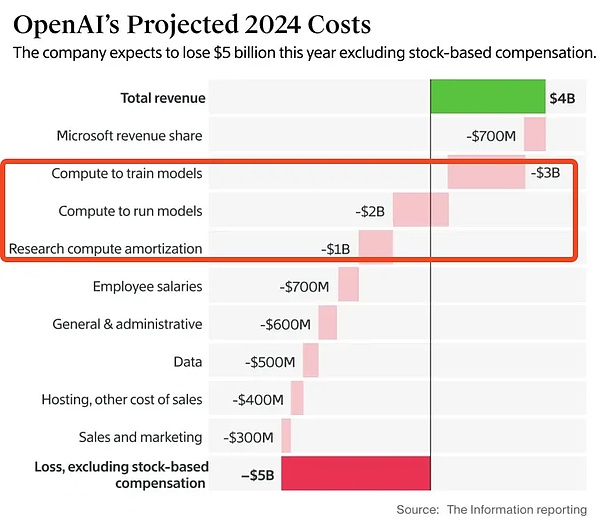

With the growth of demand for artificial intelligence and big data processing, corporate demand for high-performance GPUs (especially NVIDIA's H100) has surged. In order to support these computing-intensive tasks, global companies have initially invested about $600 billion in hardware and infrastructure to purchase GPUs, build data centers, etc. to enhance computing power. However, due to supply chain delays, the price of H100 remained high for most of 2023, even exceeding $4.70 per hour, unless buyers are willing to pay a large deposit in advance. By early 2024, as more vendors entered the market, the H100 rental price dropped to about $2.85, but I began to receive pitch emails reflecting the increased competition as market supply increased.

While H100 GPUs initially rented for between $8 and $16 per hour, by August 2024, auction-style rental prices had dropped to $1 to $2 per hour. Market prices are expected to drop 40% or more per year, far exceeding NVIDIA's forecast of $4 per hour for four years. This rapid price decline poses financial risks to those who have just purchased a high-priced new GPU, as they may not be able to recoup their costs through leasing.

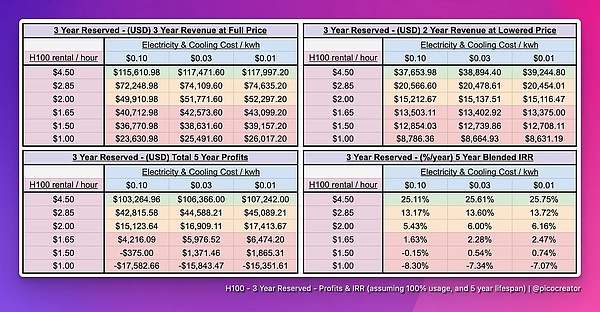

What is the return on capital for investing $50,000 to buy an H100?

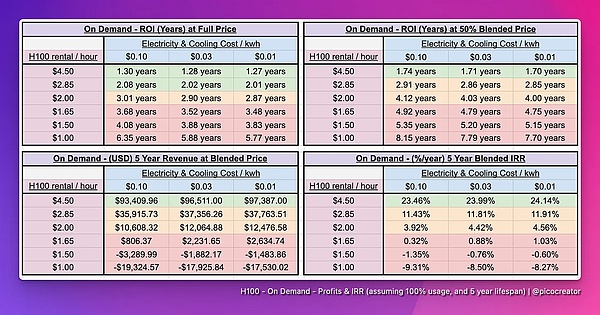

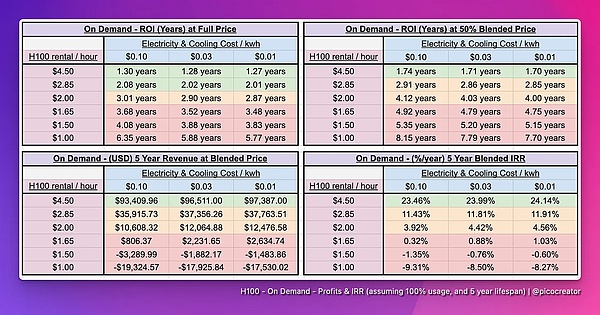

Without considering power and cooling costs, the purchase cost of an H100 is about $50,000, and the expected service life is 5 years. There are usually two modes of leasing: short-term on-demand leasing and long-term reservation. Short-term leasing is more expensive but more flexible, while long-term reservation is cheaper but more stable. Next, the article will analyze the benefits of these two modes to calculate whether investors can recover their costs and make a profit within 5 years.

Short-term on-demand rental

Rental price and corresponding income:

>$2.85: Exceeding the stock market IRR and achieving profitability.

<$2.85: The income is lower than the income from investing in the stock market.

<$1.65: Expected investment loss.

Predicted by the "mixed price" model, the rent may drop to 50% of the current price in the next 5 years. If the rental price remains at $4.50 per hour, the return on investment (IRR) is over 20%, which is profitable; but when the price drops to $2.85/hour, the IRR is only 10%, which significantly reduces the return. If the price drops below $2.85, the return on investment may even be lower than the stock market return, and when the price is below $1.65, investors will face a serious risk of loss, especially for those who have purchased H100 servers in the near future.

Note: The "blended price" is an assumption that the rental price of H100 gradually decreases to half of the current price over the next 5 years. This estimate is considered optimistic because the current market price drops by more than 40% per year, so it is reasonable to consider the price drop.

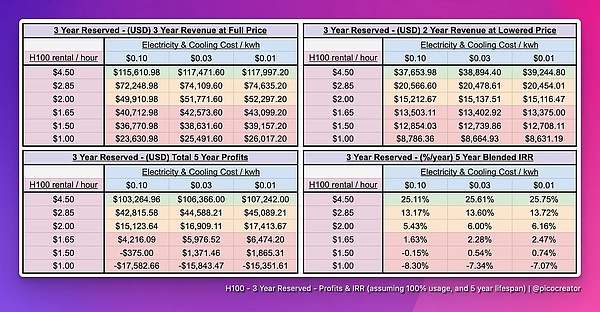

Long-term reservation leases (more than 3 years)

During the AI boom, many established infrastructure providers, based on past experience, especially in the early Ethereum PoW era of cryptocurrency, experienced cycles of skyrocketing and plummeting GPU rental prices, so in 2023, they launched 3-5 year high-priced prepaid rental contracts to lock in profits. These contracts typically require customers to pay more than $4 per hour, or even prepay 50% to 100% of the rental. With the surge in demand for AI, especially in the field of image generation, basic model companies have to sign these contracts despite the high prices in order to seize the market opportunity and be the first to use the latest GPU clusters, in order to quickly complete the target model and improve their competitiveness. However, when the model training is completed, these companies no longer need these GPU resources, but due to the contract lock-in, they cannot easily exit. In order to reduce losses, they choose to resell these leased GPU resources to recover part of the cost. This has led to a large number of resold GPU resources on the market, increasing supply and affecting the market's rental prices and supply and demand.

Current H100 Value Chain

Note: Value chain, also known as value chain analysis, value chain model, etc. It was proposed by Michael Porter in his book "Competitive Advantage" in 1985. Porter pointed out that in order for an enterprise to develop a unique competitive advantage and create higher added value for its products and services, the business strategy is to structure the business model of the enterprise into a series of value-added processes, and this series of value-added processes is the "value chain".

The H100 value chain ranges from hardware to AI inference models, and the participants can be roughly divided into the following categories

Capacity distributors: Runpod, SFCompute, Together.ai, Vast.ai, GPUlist.ai, etc.

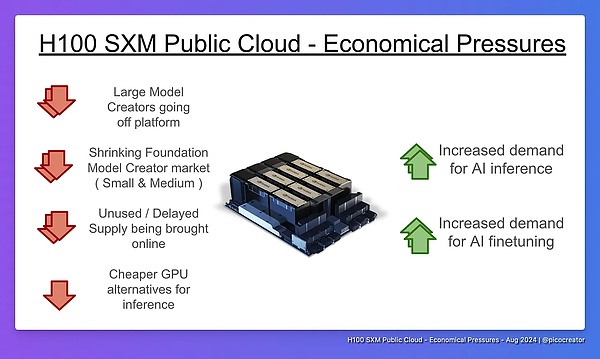

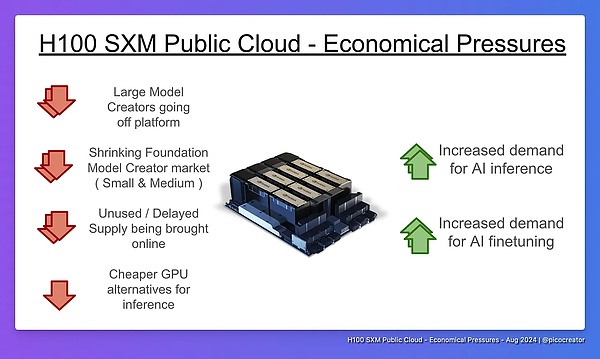

The current H100 value chain includes multiple links from hardware suppliers to data center providers, AI model development companies, capacity dealers, and AI reasoning service providers. The main pressure on the market comes from the continuous resale or rental of idle resources by unused H100 capacity dealers, and the widespread use of "good enough" open source models (such as Llama 3), which has led to a decline in demand for H100. These two factors together have led to an oversupply of H100, which in turn has put downward pressure on market prices.

Market Trend: The Rise of Open Source Weight Models

Open source weight models refer to those whose weights have been publicly distributed for free despite the lack of a formal open source license and are widely used in the commercial field.

The demand for these models is driven by two main factors: the emergence of large open source models similar to GPT-4 (such as LLaMA3 and DeepSeek-v2), and the maturity and widespread adoption of small (8 billion parameters) and medium (70 billion parameters) fine-tuned models.

As these open source models become more mature, companies can easily acquire and use them to meet the needs of most AI applications, especially in inference and fine-tuning. Although these models may be slightly inferior to proprietary models in some benchmarks, their performance is good enough to handle most commercial use cases. Therefore, with the popularity of open source weight models, the market demand for inference and fine-tuning is growing rapidly.

Open source weight models also have three key advantages:

First, open source models are highly flexible, allowing users to fine-tune the model according to specific domains or tasks, so as to better adapt to different application scenarios. Secondly, open source models provide reliability, because model weights will not be updated without notice like some proprietary models, avoiding some development problems caused by updates and increasing users' trust in the model. Finally, it also ensures security and privacy. Enterprises can ensure that their tips and customer data will not be leaked through third-party API endpoints, reducing data privacy risks. It is these advantages that have led to the continued growth and widespread adoption of open source models, especially in inference and fine-tuning.

Demand shift of small and medium-sized model creators

Small and medium-sized model creators refer to enterprises or startups that do not have the ability or plan to train large base models (such as 70B parameter models) from scratch. With the rise of open source models, many companies have realized that fine-tuning existing open source models is more cost-effective than training a new model from scratch. Therefore, more and more companies choose fine-tuning instead of training models themselves. This greatly reduces the demand for computing resources such as H100.

Fine-tuning is much cheaper than training from scratch. Fine-tuning an existing model requires far fewer computing resources than training a base model from scratch. While training a large base model typically requires 16 or more H100 nodes, fine-tuning typically requires only 1 to 4 nodes. This industry shift cuts the need for large clusters for small and medium-sized companies, directly reducing their reliance on H100 computing power.

In addition, investment in base model creation has decreased. In 2023, many small and medium-sized companies tried to create new base models, but today, there are almost no new base model creation projects unless they can bring innovation (such as better architecture or support for hundreds of languages). This is because there are already powerful enough open source models on the market, such as Llama 3, making it difficult for small companies to justify creating new models. Investor interest and funding have also shifted to fine-tuning rather than training models from scratch, further reducing the need for H100 resources.

Finally, excess capacity of reserved nodes is also an issue. Many companies have long reserved H100 resources for the 2023 peak, but due to the shift to fine-tuning, they found that these reserved nodes are no longer needed, and some hardware is even outdated when it arrives. These unused H100 nodes are now resold or rented, further increasing the supply in the market and causing an oversupply of H100 resources.

Overall, with the popularity of model fine-tuning, the reduction in the creation of small and medium-sized basic models, and the surplus of reserved nodes, the H100 market demand has declined significantly, and the oversupply has intensified.

Other factors leading to increased GPU computing power supply and reduced demand

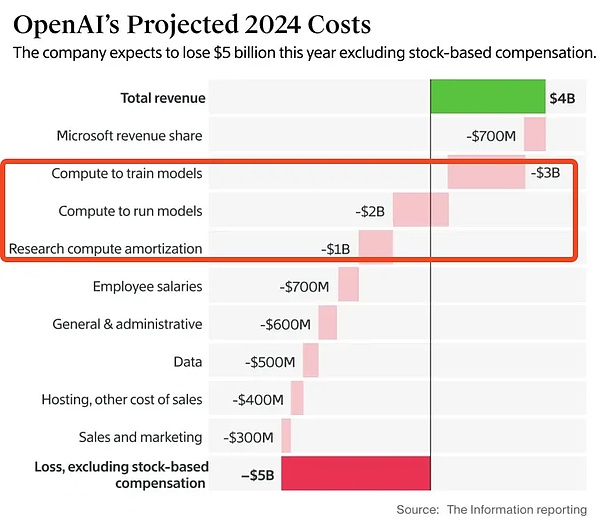

Large model creators move away from open source cloud platforms

The reason why large AI model creators such as Facebook, X.AI, and OpenAI are gradually turning from public cloud platforms to self-built private computing clusters. First, existing public cloud resources (such as clusters of 1,000 nodes) can no longer meet their needs for training larger models. Second, from a financial perspective, building a cluster yourself is more advantageous because purchasing assets such as data centers and servers can increase the company's valuation, while renting a public cloud is just an expense and cannot improve assets. In addition, these companies have sufficient resources and professional teams, and can even acquire small data center companies to help them build and manage these systems. Therefore, they are no longer dependent on the public cloud. As these companies move away from public cloud platforms, the market demand for computing resources decreases, which may cause unused resources to re-enter the market and increase supply.

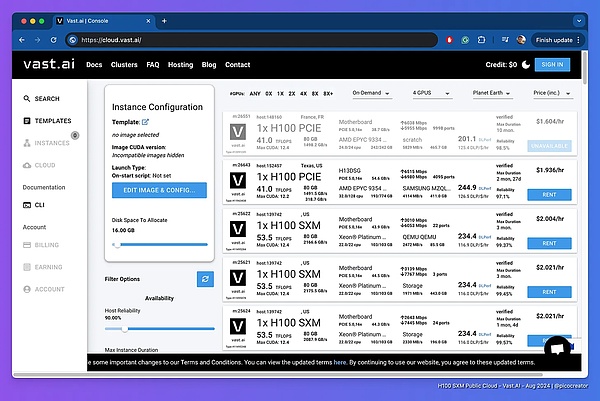

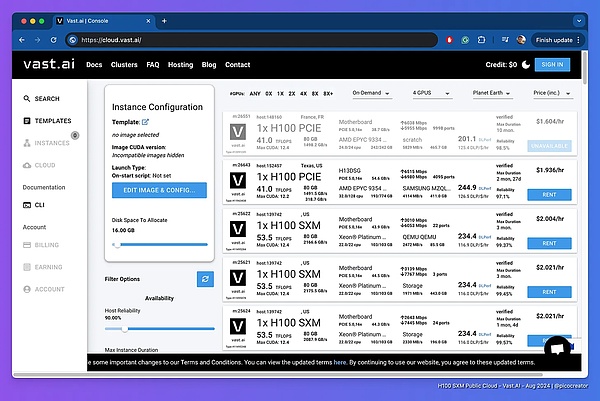

Vast.ai is essentially a free market system where suppliers from all over the world compete with each other

Idle and delayed H100s are online at the same time

Because idle and delayed H100 GPUs are online at the same time, the market supply increases, causing prices to fall. Platforms such as Vast.ai adopt a free market model where global suppliers compete with each other on price. In 2023, many resources were not online in time due to H100 shipment delays, and now these delayed H100 resources begin to enter the market, along with new H200 and B200 devices, as well as idle computing resources from startups and enterprises. Owners of small and medium-sized clusters typically have 8 to 64 nodes, but with low utilization and running out of money, their goal is to recoup costs as quickly as possible by renting out resources at low prices. To do this, they choose to compete for customers through fixed rates, auction systems, or free market pricing. The auction and free market models, in particular, have suppliers competing to lower prices to ensure that resources are rented, which ultimately leads to a significant drop in prices across the market.

Cheaper GPU alternatives

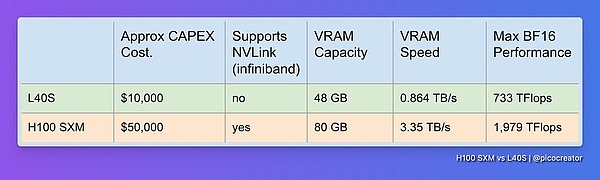

Another major factor is that once the cost of computing power exceeds the budget, there are many alternatives for AI inference infrastructure, especially if you are running smaller models. There is no need to pay extra for Infiniband with H100.

Nvidia market segment

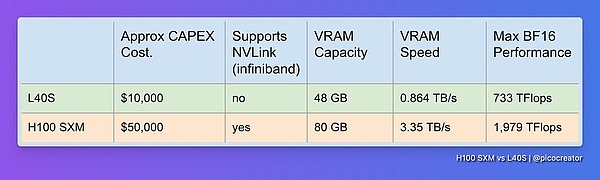

The emergence of cheaper alternatives to H100 GPUs in AI inference tasks will directly affect the market demand for H100. First, while the H100 excels at training and fine-tuning AI models, in the area of inference (i.e. running models), many cheaper GPUs are able to meet the needs, especially for smaller models. Because inference tasks do not require the high-end features of the H100 (such as Infiniband networking), users can choose more economical alternatives and save costs.

Nvidia itself also offers alternatives in the inference market, such as the L40S, a GPU dedicated to inference that has about one-third the performance of the H100 but only one-fifth the price. Although the L40S is not as effective as the H100 for multi-node training, it is powerful enough for single-node inference and fine-tuning of small clusters, which provides users with a more cost-effective option.

H100 Infiniband Cluster Performance Configuration Table (August 2024)

AMD and Intel Alternative Suppliers

In addition, AMD and Intel have also launched lower-priced GPUs, such as AMD's MX300 and Intel's Gaudi 3. These GPUs perform well in inference and single-node tasks, are cheaper than H100, and have more memory and computing power. Although they have not been fully verified in large multi-node cluster training, they are mature enough in inference tasks to become a powerful alternative to H100.

These cheaper GPUs have been proven to be able to handle most inference tasks, especially inference and fine-tuning tasks on common model architectures such as LLaMA 3. Therefore, users can choose these alternative GPUs to reduce costs after resolving compatibility issues. In summary, these alternatives in the field of inference are gradually replacing H100, especially in small-scale inference and fine-tuning tasks, which further reduces the demand for H100.

Decline in GPU usage in the Web3 field

Due to changes in the cryptocurrency market, the usage of GPUs in crypto mining has declined, and a large number of GPUs have therefore flowed into the cloud market. Although these GPUs are not capable of complex AI training tasks due to hardware limitations, they perform well in simpler AI reasoning tasks, especially for users with limited budgets, when processing smaller models (such as those with less than 10B parameters), these GPUs become a very cost-effective choice. After optimization, these GPUs can even run large models at a lower cost than using H100 nodes.

After the AI computing power rental bubble, how is the market now?

The problems faced by entering the market now: New public cloud H100 clusters are late to the market and may not be profitable, and some investors may suffer heavy losses.

The profitability challenges faced by H100 public cloud clusters newly entering the market. If the rental price is set too low (below $2.25), it may not cover operating costs and lead to losses; if the price is too high (3 dollars or more), it may lose customers and lead to idle capacity. In addition, clusters that entered the market later have difficulty recovering costs because they missed the early high prices ($4/hour), and investors face the risk of not being able to make a profit. This makes cluster investment very difficult and may even cause investors to suffer significant losses.

Early entrants' earnings: Medium or large model creators who signed long-term rental contracts early on have recovered their costs and achieved profitability

Medium and large model creators have gained value through long-term leasing of H100 computing resources, the cost of which has been covered at the time of financing. Although some computing resources are not fully utilized, these companies use these clusters for current and future model training through the financing market and extract value from them. Even if there are unused resources, they can earn additional income through resale or leasing, which reduces market prices, reduces negative impacts, and has an overall positive impact on the ecosystem.

After the bubble bursts: The low-priced H100 can accelerate the adoption of open source AI

The emergence of low-priced H100 GPUs will promote the development of open source AI. As the price of H100 drops, AI developers and hobbyists can run and fine-tune open source weight models more cheaply, making these models more widely adopted. If closed source models (such as GPT5++) do not achieve major technical breakthroughs in the future, the gap between open source models and closed source models will narrow, driving the development of AI applications. As the cost of AI inference and fine-tuning decreases, it may trigger a new wave of AI applications and accelerate the overall progress of the market.

Conclusion: Don't buy a brand new H100

If you invest in a brand new H100 GPU now, you will probably lose money. However, investing is only reasonable under special circumstances, such as when the project can purchase discounted H100, cheap electricity costs, or when its AI products are sufficiently competitive in the market. If you are considering investing, it is recommended to invest the funds in other fields or the stock market to obtain a better rate of return.

JinseFinance

JinseFinance