OpenAI releases the o1 large model, reinforcement learning breaks through the LLM reasoning limit

The technological development in the field of large models has started again from "1" today.

JinseFinance

JinseFinance

Author: Zeke, YBB Capital; Translation: 0xjs@金财经

On February 16, OpenAI announced the launch of the latest Vincent video generation called "Sora" Diffusion models, with their ability to generate high-quality videos on a variety of visual data types, mark another milestone in generative AI. Unlike AI video generation tools like Pika that generate a few seconds of video from multiple images, Sora trains in the compressed latent space of videos and images, decomposing them into spatiotemporal patches to generate scalable videos. Additionally, the model demonstrates the ability to simulate both the physical and digital worlds, with its 60-second demonstration described as a "universal simulator for the physical world."

Sora continues the "source data-Transformer-Diffusion-emergence" technology path in the previous GPT model, indicating that its development maturity also depends on computing power. Given that video training requires a larger amount of data than text, its demand for computing power is expected to increase further. However, as discussed in our previous article "Potential Industry Outlook: Decentralized Computing Power Market", the importance of computing power in the AI era has been discussed. With the increasing popularity of AI, many computing power projects have emerged. generated, benefiting DePIN projects (storage, computing power, etc.), and their value has surged. In addition to DePIN, this article aims to update and improve past discussions, thinking about the possible sparks produced by the intersection of Web3 and AI, and the opportunities in this track in the AI era.

AI is an emerging science and technology designed to simulate, extend and enhance human intelligence. Since its birth in the 1950s and 1960s, AI has developed for more than half a century and has now become a key technology driving changes in social life and various industries. In this process, the intertwined development of the three major research directions of symbolism, connectionism, and behaviorism has laid the foundation for the rapid development of artificial intelligence today.

Symbolism, also known as logicism or rule-based reasoning, believes that it is feasible to simulate human intelligence through the processing of symbols. This approach uses symbols to represent and manipulate objects, concepts, and their relationships within the problem domain, and employs logical reasoning to solve problems. Symbolism has been a huge success, especially in expert systems and knowledge representation. The core idea of symbolism is that intelligent behavior can be achieved through the manipulation and logical reasoning of symbols, where symbols represent high-level abstractions of the real world.

Also known as the neural network approach, it aims to achieve intelligence by imitating the structure and function of the human brain. The method builds networks of many simple processing units (similar to neurons) and adjusts the strength of connections between these units (similar to synapses) to facilitate learning. Connectionism emphasizes the ability to learn and generalize from data, making it particularly suitable for pattern recognition, classification, and continuous input-output mapping problems. As an evolution of connectionism, deep learning has made breakthroughs in fields such as image recognition, speech recognition, and natural language processing.

Behaviorism is closely related to the study of bionic robots and autonomous intelligent systems, emphasizing that intelligent agents can learn through interaction with the environment. Unlike the first two, behaviorism does not focus on simulating internal representations or thought processes, but rather achieves adaptive behavior through cycles of perception and action. Behaviorism believes that intelligence is manifested through dynamic interaction with the environment and learning, which makes it particularly effective for mobile robots and adaptive control systems operating in complex and unpredictable environments.

Although these three research directions have fundamental differences, they can interact and integrate with each other in the actual research and application of AI, and jointly promote the development of the field of artificial intelligence.

The explosive development field of AIGC represents the evolution and application of connectionism, capable of generating novel content by imitating human creativity. These models are trained using large data sets and deep learning algorithms to learn the underlying structure, relationships, and patterns in the data. Based on user prompts, they generate unique output including images, videos, code, music, designs, translations, answers to questions, and text. At present, AIGC basically consists of three elements: deep learning, big data and massive computing power.

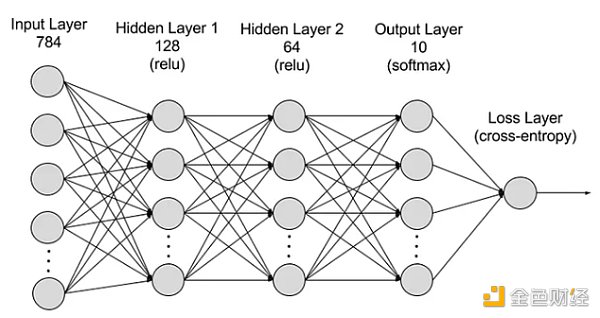

Deep learning is a subfield of machine learning that uses algorithms that mimic the neural networks of the human brain. For example, the human brain is made up of millions of interconnected neurons that work together to learn and process information. Likewise, deep learning neural networks (or artificial neural networks) consist of multiple layers of artificial neurons working together within a computer. These artificial neurons, called nodes, use mathematical calculations to process data. Artificial neural networks use these nodes to solve complex problems through deep learning algorithms.

The neural network is divided into layers: input layer and hidden layer and output layer, parameters connecting different layers.

The neural network is divided into layers: input layer and hidden layer and output layer, parameters connecting different layers.

Input layer: The first layer of the neural network is responsible for receiving external input data. Each neuron in the input layer corresponds to a feature of the input data. For example, when processing image data, each neuron might correspond to a pixel value in the image.

Hidden layer: The input layer processes the data and passes it further into the network. These hidden layers process information at different levels, adjusting their behavior as they receive new information. Deep learning networks have hundreds of hidden layers and can analyze problems from multiple perspectives. For example, when presented with an image of an unknown animal that needs to be classified, you can compare it to animals you already know by examining ear shape, number of legs, pupil size, etc. Hidden layers in deep neural networks work in a similar way. If a deep learning algorithm is trying to classify an image of an animal, each hidden layer will process a different feature of the animal and try to classify it accurately.

Output layer: The last layer of the neural network, responsible for generating the output of the network. Each neuron in the output layer represents a possible output category or value. For example, in a classification problem, each output layer neuron may correspond to a category, while in a regression problem, the output layer may have only one neuron whose value represents the prediction result.

Parameters: In neural networks, the connections between different layers are represented by weights and biases, which are optimized during training to enable the network to accurately identify features in the data patterns and make predictions. Increasing parameters can enhance the neural network’s model capability, that is, its ability to learn and represent complex patterns in data. However, this also increases the demand for computing power.

For effective training, neural networks usually require large amounts of, diverse, high-quality and multi-source data. It forms the basis for training and validating machine learning models. By analyzing big data, machine learning models can learn patterns and relationships in the data to make predictions or classifications.

Neural networks have complex multi-layer structures, numerous parameters, big data processing requirements, and iterative training methods (the model needs to be iterated repeatedly during training, involving the front-end of each layer) (including activation function calculation, loss function calculation, gradient calculation and weight update), high-precision calculation requirements, parallel computing capabilities, optimization and regularization techniques, and model evaluation and verification processes have jointly led to the development of high computing power. need.

< /p>

< /p>

As OpenAI’s latest video generation AI model, Sora represents a major advancement in artificial intelligence’s ability to process and understand diverse visual data. By employing video compression network and spatio-temporal patching technology, Sora can convert massive amounts of visual data captured by different devices around the world into a unified representation, enabling efficient processing and understanding of complex visual content. Utilizing the text conditional diffusion model, Sora can generate videos or images that closely match text prompts, demonstrating a high degree of creativity and adaptability.

However, despite Sora's breakthroughs in video generation and simulating real-world interactions, it still faces some limitations, including the accuracy of physical world simulations, the consistency of generating long videos, and the ability to understand complex Text instructions and training and generation efficiency. In essence, Sora uses OpenAI's monopoly computing power and first-mover advantage to continue the old technology path of "big data-Transformer-Diffusion-emergence" and achieve a brute force aesthetic. Other AI companies still have the potential to transcend through technological innovation.

Although Sora has little to do with blockchain, I believe that in the next one or two years, due to Sora’s influence, other high-quality AI generation tools will emerge and develop rapidly, impacting various Web3 fields such as GameFi, social platform, creative platform, Depin, etc. Therefore, it is necessary to have a general understanding of Sora. How to effectively combine AI with Web3 in the future is a key consideration.

As discussed earlier, we can understand that the basic elements required for generative AI are essentially threefold: algorithm, data and computing power . On the other hand, given its ubiquity and output effects, AI is a tool that will revolutionize production methods. At the same time, the biggest impact of blockchain is twofold: reorganization of production relations and decentralization.

Therefore, I think the collision of these two technologies can produce the following four paths:

As mentioned above, this section Designed to update the state of the computing power landscape. When it comes to AI, computing power is an indispensable aspect. The emergence of Sora has brought to the fore the previously unimaginable demand for computing power from AI. Recently, during the 2024 World Economic Forum in Davos, Switzerland, OpenAI CEO Sam Altman publicly stated that computing power and energy are the current biggest constraints, suggesting that their future importance may even be equal to currency. Subsequently, on February 10, Sam Altman announced a shocking plan on Twitter to raise US$7 trillion (equivalent to 40% of China’s GDP in 2023) to completely reform the current global semiconductor industry. Building a semiconductor empire. My previous thoughts on computing power were limited to national lockdowns and corporate monopolies; the idea of one company wanting to dominate the global semiconductor industry is indeed crazy.

Therefore, the importance of decentralized computing power is self-evident. The properties of blockchain can indeed solve the current problem of extreme monopolization of computing power and the prohibitive costs associated with acquiring dedicated GPUs. From the perspective of AI requirements, the use of computing power can be divided into two directions: reasoning and training. There are still very few projects focusing on training, because decentralized networks require integrated neural network design, which has extremely high hardware requirements. It is a direction with high threshold and difficult implementation. In comparison, reasoning is relatively simple, because decentralized network design is not that complex, has lower hardware and bandwidth requirements, and is a more mainstream direction.

The centralized computing power market has a broad imagination and is often associated with the keyword "trillion level". It is also the most hyped topic in the AI era. However, looking at the many projects that have emerged recently, most appear to be ill-conceived attempts to capitalize on trends. They often fly the flag of decentralization but avoid discussing the inefficiencies of decentralized networks. In addition, the degree of design homogeneity is very high, and many projects are very similar (one-click L2 plus mining design), which may eventually lead to failure and make it difficult to occupy a place in traditional AI competitions.

Machine learning algorithms are those that can learn patterns and rules from data and make predictions or decisions based on them. Algorithms are technology-intensive because their design and optimization require deep expertise and technological innovation. Algorithms are at the core of training artificial intelligence models and define how to transform data into useful insights or decisions. Common generative AI algorithms include Generative Adversarial Networks (GAN), Variational Autoencoders (VAE), and Transformers, each of which is designed for a specific field (such as painting, language recognition, translation, video generation) or purpose , which is then used to train specialized AI models.

So, with so many algorithms and models, each with its own merits, is it possible to integrate them into a universal model? Bittensor is a project that has received much attention recently, leading this direction by motivating different AI models and algorithms to collaborate and learn from each other to create more efficient and capable AI models. Other projects focusing on this direction include Commune AI (code collaboration), but algorithms and models are strictly confidential for AI companies and are not easily shared.

The narrative of the AI collaborative ecosystem is therefore novel and interesting. Collaborative ecosystems use the advantages of blockchain to integrate the disadvantages of isolated AI algorithms, but whether they can create corresponding value remains to be seen. After all, leading AI companies with independent algorithms and models have strong update, iteration and integration capabilities. For example, OpenAI went from an early text generative model to a multi-domain generative model in less than two years. Projects like Bittensor may need to explore new paths in their model and algorithm target areas.

From a simple perspective, using private data to feed AI and annotate data is a direction that is very consistent with blockchain technology. The main considerations are How to prevent spam and malicious behavior. Additionally, data storage can benefit DePIN projects such as FIL and AR. From a more sophisticated perspective, using blockchain data for machine learning to address the accessibility of blockchain data is another interesting direction (one of Giza’s explorations).

Theoretically, blockchain data is accessible at any time and reflects the status of the entire blockchain. However, accessing this vast amount of data is not straightforward for those outside the blockchain ecosystem. Storing the entire blockchain requires extensive expertise and large amounts of specialized hardware resources. To overcome the challenges of accessing blockchain data, several solutions have emerged within the industry. For example, RPC providers play a crucial role in solving this problem by providing node access via APIs, and indexing services enable data retrieval via SQL and GraphQL. However, these methods have their limitations. RPC services are not suitable for high-density use cases that require large amounts of data queries, and often cannot meet demand. At the same time, although indexing services provide a more structured way of retrieving data, the complexity of the Web3 protocol makes it extremely difficult to construct efficient queries, sometimes requiring hundreds or even thousands of lines of complex code. This complexity is a significant hurdle for general data practitioners and those with limited knowledge of the details of Web3. The cumulative effect of these limitations highlights the need for a more accessible and exploitable method of obtaining and utilizing blockchain data, which could facilitate broader adoption and innovation in the field.

Therefore, combining ZKML (zero-knowledge proof machine learning, easing the burden of on-chain machine learning) with high-quality blockchain data may create data that addresses the accessibility of blockchain data set. AI can significantly lower the barriers to accessing blockchain data. Over time, developers, researchers, and machine learning enthusiasts will have access to more high-quality, relevant data sets to build effective and innovative solutions.

Since the outbreak of ChatGPT3 in 2023, AI empowering Dapp has become a very common direction. Broadly applicable generative AI can be integrated via APIs to simplify and intelligentize data platforms, trading bots, blockchain encyclopedias and other applications. On the other hand, it can also act as a chatbot (like Myshell) or an AI companion (Sleepless AI), or even use generative AI to create NPCs in blockchain games. However, due to the low technical threshold, most of them are just adjustments after integrating the API, and the integration with the project itself is not perfect, so they are rarely mentioned.

But with the arrival of Sora, I personally believe that AI’s empowerment of GameFi (including the Metaverse) and creative platforms will be the focus in the future. Given the bottom-up nature of the Web3 space, it's unlikely to produce a product that can compete with traditional gaming or creative companies. However, the emergence of Sora may break this deadlock (perhaps in just two to three years). Judging from Sora's demo, it has the potential to compete with short drama companies. Web3's active community culture can also spawn a large number of interesting ideas. When the only limit is imagination, the barriers between bottom-up industries and top-down traditional industries will be broken down.

With the continuous development of generative artificial intelligence tools, we will witness more breakthrough "iPhone moments" in the future. Although there is skepticism about the integration of AI and Web3, I believe that the current direction is basically the right one and only needs to address three major pain points: necessity, efficiency, and fit. Although the integration of the two is still in the exploratory stage, it does not prevent this path from becoming the mainstream of the next bull market.

Maintaining enough curiosity and an open attitude towards new things is our basic mentality. Historically, the transition from horse-drawn carriages to cars was resolved instantly, as inscriptions and past NFTs show. Holding too many biases will only lead to missed opportunities.

The technological development in the field of large models has started again from "1" today.

JinseFinance

JinseFinanceParallel EVM is a way to make the Ethereum network faster and more efficient by running multiple transactions simultaneously.

JinseFinance

JinseFinanceWe'll find out soon enough who wins the publisher race. But clearly, investors are the biggest winners.

JinseFinance

JinseFinanceSeveral icons of the crypto world turned out to be rotten eggs in 2022. But there’s more.

cryptopotato

cryptopotatoAmid the remarkably devastating year, many executives at major crypto firms left their roles.

The Block

The BlockChina and FTX share one thing in common: crypto clients.

Bitcoinist

BitcoinistSome analysts said impending monetary tightening could add to a global rout across major asset classes such as equities and crypto.

Coindesk

CoindeskTwo businesses have signed a new memorandum of understanding in their quest to help blockchain and Web3 go from strength to strength.

Cointelegraph

CointelegraphBlockchains use consensus algorithms to choose who gets to verify transactions on the network — what are the differences between the two?

Cointelegraph

CointelegraphBalancer, DeFiChain and cBridge each saw a surge in their total value locked despite the current downturn in the wider crypto market.

Cointelegraph

Cointelegraph