Author: Vitalik, founder of Ethereum; Translator: Deng Tong, Golden Finance

Note: This article is the third part of the series of articles "Possible futures of the Ethereum protocol, part 3: The Scourge" recently published by Vitalik, founder of Ethereum. For the second part, see Golden Finance's "Vitalik: How should the Ethereum protocol develop during The Surge" and for the first part, see "What other improvements can be made to Ethereum PoS" Here is the full text of Part 3:

Special thanks to Justin Drake, Caspar Schwarz-Schilling, Phil Daian, Dan Robinson, Charlie Noyes, and Max Resnick for their feedback and review, and to the ethstakers community for discussions.

One of the biggest risks facing Ethereum L1 is the centralization of proof of stake due to economic pressure. If there are economies of scale in participating in the core proof of stake mechanism, this will naturally lead to large stakeholders dominating and small stakeholders exiting to join large mining pools.This leads to a higher risk of 51% attacks, transaction censorship, and other crises. In addition to the risk of centralization, there is also the risk of value extraction: a small group of people capture the value that would otherwise flow to Ethereum users.

In the last year, our understanding of these risks has increased significantly. As we all know, this risk exists in two key places: (i) block construction, and (ii) staked capital regulations. Larger participants can run more complex algorithms (“MEV extraction”) to produce blocks, which brings them higher block revenue. Large participants can also more efficiently resolve the inconvenience of locked funds by releasing them as Liquid Stake Tokens (LST) to others. In addition to the direct issue of small stakers vs. large stakers, there is also the question of whether too much ETH is (or will be) staked.

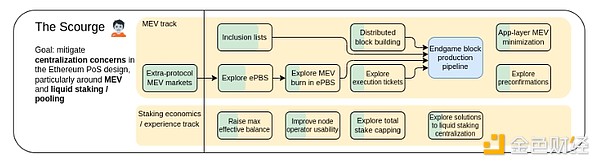

Scourge 2023 Roadmap

This year, block construction has made significant progress, most notably the convergence of "committee inclusion list plus some targeted sorting solutions" as an ideal solution, and important research into proof-of-stake economics, including two-tier equal staking models and reducing issuance to limit the percentage of staked ETH.

Strengthening the Blockchain Construction Path

What problems are we solving?

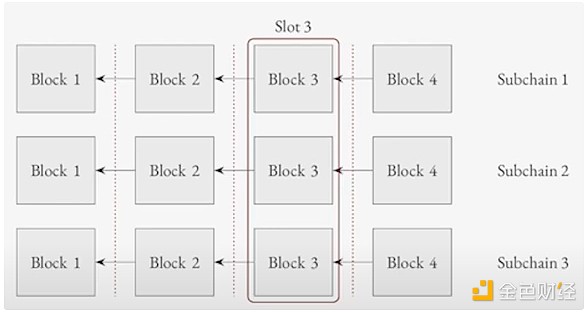

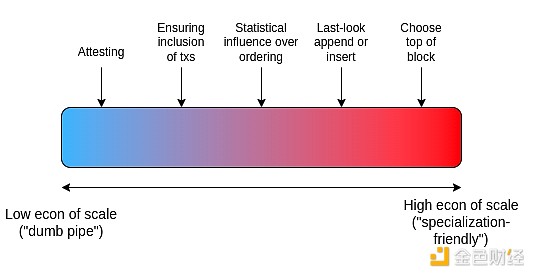

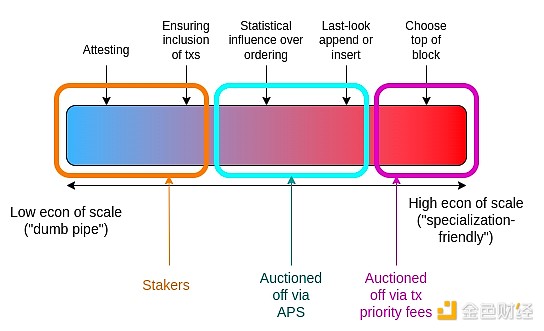

Today, Ethereum block construction is primarily done through an out-of-protocol propser-builder split called MEVBoost. When validators have the opportunity to propose a block, they assign the work of selecting the block’s contents to specialized participants called builders. The task of selecting block contents that maximize revenue is economies of scale intensive: specialized algorithms are required to determine which transactions to include in order to extract as much value as possible from the transactions of on-chain financial instruments and users who interact with them (this is known as “MEV extraction”). Validators face the relatively light-economy-of-scale “dumb-pipe” task of listening to bids and accepting the highest one, as well as other responsibilities such as attestation.

Stylized diagram of what MEVBoost is doing: dedicated builders take on the tasks in red, stakeholders take on the tasks in blue.

There are multiple versions, including "proposer-builder separation" (PBS) and "prover-proposer separation" (APS). The difference between them has to do with the finegrained details of which responsibilities are taken by which of the two participants: roughly speaking, in PBS, validators still propose blocks but receive payloads from builders, while in APS the entire slot becomes the responsibility of the builder. Recently, APS has been favored over PBS because it further reduces the incentive for proposers to co-locate with builders. Note that APS only applies to execution blocks containing transactions; consensus blocks containing proof-of-stake related data (such as proofs) will still be randomly assigned to validators.

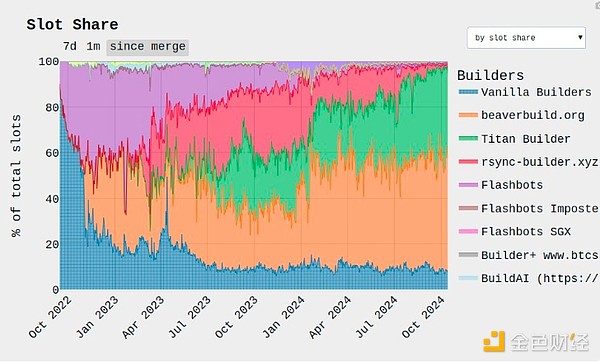

This separation of powers helps keep validators decentralized, but it has an important cost: it is easy for actors performing "specialized" tasks to become very centralized. Here is an Ethereum block build today:

Two actors are selecting the content of about 88% of Ethereum blocks. What if these two actors decide to censor transactions? The answer is not as bad as it seems: they cannot reorganize blocks, so you don’t need 51% censorship at all to prevent transactions from being included: you need 100%. With 88% censorship, users would have to wait an average of nine slots (technically, an average of 114 seconds, not six). For some use cases, waiting two or even five minutes for some transactions is fine. But for other use cases, such as DeFi liquidations, even being able to delay the inclusion of other people’s transactions by a few blocks is a significant market manipulation risk.

The strategies that block builders can use to maximize revenue can also have other negative effects on users. “Sandwich attacks” can cause users who trade tokens to suffer significant losses due to slippage. The transactions introduced to jam the chain for these attacks increase the gas price for other users.

What is it and how does it work?

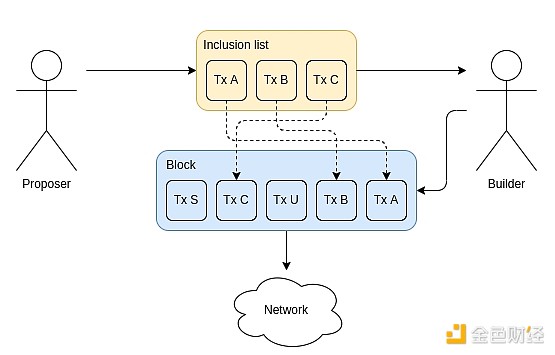

The leading solution is to further decompose the block production task: we put the task of selecting transactions back on the proposers (i.e., stakers), and the builders can only choose to sort and insert some of their own transactions. This is what inclusion lists are trying to do.

At time T, a randomly selected staker creates an inclusion list, a list of transactions that are valid given the current state of the blockchain at that time. At time T+1, a block builder (presumably selected in advance via an in-protocol auction mechanism) creates a block. The block is required to include every transaction in the inclusion list, but they can choose the order, and can add their own transactions.

The Fork Choice Enforced Inclusion List (FOCIL) proposal involves a committee of multiple inclusion list creators for each block. In order to delay a transaction by one block, k of the k inclusion list creators (e.g. k = 16) must review the transaction. The combination of FOCIL with a final proposer selected via auction that needs to include the inclusion list but can reorder and add new transactions is often referred to as "FOCIL + APS".

Another approach to this problem is a multiple concurrent proposer (MCP) scheme such as BRAID. BRAID attempts to avoid splitting the block proposer role into low-economy and high-economy parts, and instead attempts to distribute the block production process to many participants so that each participant proposer only needs to have a moderate level of complexity to maximize their revenue. MCP works by having k parallel proposers generate a list of transactions, and then using a deterministic algorithm (e.g., sorting by fee from highest to lowest) to choose the order.

BRAID does not seek to achieve the goal of having a dumb-pipe block proposer running the default software being optimal. There are two well-understood reasons why it cannot do so:

Late-mover arbitrage attack:Suppose the average time a proposer submits is T, and the last time you could submit and still get included is about T+1. Now, suppose on a centralized exchange, the price of ETH/USDC goes from $2500 to $2502 between T and T+1. A proposer can wait an extra second and then add an additional transaction to arbitrage on-chain DEX, earning up to $2 per ETH in profit. Sophisticated proposers with good connections to the network are better able to do this.

Exclusive Order Flow:Users have an incentive to send transactions directly to a single proposer to minimize their vulnerability to front-running and other attacks. Experienced proposers have an advantage because they can build infrastructure to accept these transactions directly from users, and they have a stronger reputation, so users sending them transactions can trust that the proposer will not betray and front-run (this can mitigate the use of trusted hardware, but trusted hardware has its own trust assumptions.)

In BRAID, the prover can still be detached and run as a dumb-pipe function.

Beyond these two extremes, there is a range of possible designs in between. For example, you could auction roles that only have the right to append to blocks, but not the right to reorder or prepend. You could even have them append or prepend, but not be able to insert in the middle or reorder. The appeal of these techniques is that the winners of auction markets can be very concentrated, so reducing their authority has many benefits.

Encrypted mempools

One technique that is critical to the successful implementation of many of these designs (specifically, the BRAID or APS versions where there are strict restrictions on the auction functionality) is encrypted mempools. Encrypted mempools are a technique where users broadcast their transactions in encrypted form, along with some kind of validity proof, and the transactions are included in blocks in encrypted form without the block builders knowing their contents. The contents of the transactions are revealed later.

The main challenge in implementing encrypted mempools is coming up with a design that ensures that all transactions are later revealed: a simple "commit and reveal" scheme does not work because if disclosure is voluntary, the act of choosing to disclose or not disclose is itself a kind of "last mover" influence on blocks that can be exploited. The two main techniques are (i) threshold decryption and (ii) delayed encryption, a primitive closely related to verifiable delay functions (VDFs).

What are the connections to existing research?

MEV and builder centralization explained: https://vitalik.eth.limo/general/2024/05/17/decentralization.html#mev-and-builder-dependence

MEVBoost: https://github.com/flashbots/mev-boost

Enshrined PBS (an early proposed solution to these problems): href="https://ethresear.ch/t/why-enshrine-proposer-builder-separation-a-viable-path-to-epbs/15710" _src="https://ethresear.ch/t/why-enshrine-proposer-builder-separation-a-viable-path-to-epbs/15710">https://ethresear.ch/t/why-enshrine-proposer- builder-separation-a-viable-path-to-epbs/15710

Inclusion list by Mike Neuder Related reading list: https://gist.github.com/michaelneuder/dfe5699cb245bc99fbc718031c773008

Contains list EIP:< a href="https://eips.ethereum.org/EIPS/eip-7547" _src="https://eips.ethereum.org/EIPS/eip-7547">https://eips.ethereum.org/EIPS/eip-7547

FOCIL:h ttps://ethresear.ch/t/fork-choice-enforced-inclusion-lists-focil-a-simple-committee-based-inclusion-list-proposal/19870

Max Resnick's BRAID demo: https://www.youtube.com/watch?v=mJLERWmQ2uw

“Priority is all you need” by Dan Robinson: https://www.paradigm.xyz/2024/06/priority-is-all-you-need

About the Multi-Proposer Gadget and Protocol: VDFResearch.org: Verifiable Delay Functions and Attacks (focused on RANDAO setting, but also applies to crypto mempools): https://ethresear.ch/t/verABLE-delay-functions-and-attacks/2365 _src="https://ethresear.ch/t/verABLE-delay-functions-and-attacks/2365">https://ethresear.ch/t/verABLE-delay-functions-and-attacks/2365

MEV Execution Ticket Capture and Decentralization: https://www.arxiv.org/pdf/2408.11255

APS Centralization: https://arxiv.org/abs/2408.03116

Multi-block MEV and inclusion lists: https://x.com/_charlienoyes/status/1806186662327689441

What else needs to be done, and what trade-offs need to be made?

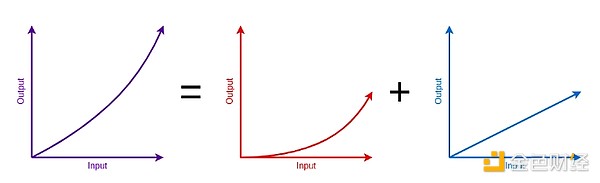

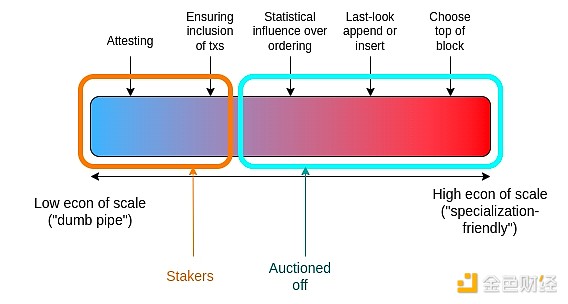

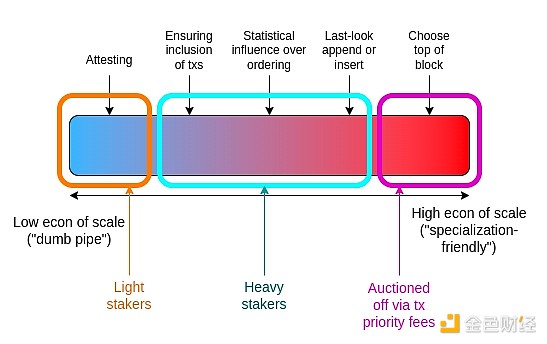

We can think of all of the above schemes as different ways of dividing up the power of participating in staking, ranging from lower economies of scale (“dumb-pipe”) to higher economies of scale (“specialization-friendly”). Before 2021, all of these powers were bundled into a single participant:

The core dilemma is this: any meaningful power that remains in the hands of stakeholders may eventually become “MEV-related” power. We want a highly decentralized set of participants to have as much power as possible; this means (i) giving a lot of power to stakeholders, and (ii) ensuring that stakeholders are as decentralized as possible, which means they have few economies of scale-driven incentives to consolidate. This is a difficult tension to deal with.

A particular challenge is multi-block MEV: in some cases, execution auction winners can make more money if they capture multiple slots in a row, and do not allow any MEV-related transactions in blocks other than the last one they controlled. If the inclusion list forces them to do this, then they can try to get around it by not publishing any blocks at all during these time periods. One can make unconditional inclusion lists that become blocks directly if the builder does not provide one, but this makes the inclusion list MEV-related. The solution here may involve some compromise, including accepting some low-level incentive to bribe people to include transactions in the inclusion list.

We can view FOCIL + APS as follows. Stakers continue to have power over the left part of the spectrum, while the right part of the spectrum is auctioned off to the highest bidder.

BRAID is completely different. The "stakers" part is larger, but it is divided into two parts: light stakers and heavy stakers. At the same time, since transactions are sorted in descending order of priority fee, the selection of the top of the block is actually auctioned through the fee market, which can be regarded as similar to PBS.

Note that the security of BRAID depends heavily on the encrypted memory pool; otherwise, the block top auction mechanism is vulnerable to policy stealing attacks (essentially: copy other people's transactions, swap the recipient address, and pay a high fee of 0.01%). This need for pre-inclusion privacy is also why PBS is so tricky to implement.

Finally, a more "radical" version of FOCIL + APS, e.g. The option where APS only determines the end of a block looks like this:

The main tasks remaining are (i) working on consolidating the various proposals and analyzing their consequences, and (ii) combining this analysis with an understanding of the goals of the Ethereum community, i.e. what forms of centralization it will tolerate. There is also some work to be done on each individual proposal, such as:

Continue working on the crypto mempool design and reaching a point where our design is both robust and reasonably simple and seems ready for inclusion.

Optimize the design of multiple inclusion lists to ensure that (i) no data is wasted, especially in the context of inclusion lists containing blobs, and (ii) it is friendly to stateless validators.

More work on optimal auction design for APS.

Also, it is worth noting that these different proposals are not necessarily incompatible forks in the road. For example, implementing FOCIL + APS can easily serve as a stepping stone to implementing BRAID. An effective conservative strategy is a "wait and see" approach, where we first implement a solution where stakers' powers are restricted and most of the powers are auctioned off, and then slowly increase stakers' power over time as we learn more about how the MEV market works on the live network.

In particular, the centralization bottlenecks for staking are:

Blockchain construction centralization (this section)

Staking centralization for economic reasons (next section)

Staking centralization due to the 32 ETH minimum (solved via Orbit or other technologies; see my post about the merger)

Staking centralization due to hardware requirements (solved in Verge with stateless clients and later ZK-EVM)

Solving any of these four problems will increase the benefits of solving any of the others.

In addition, there is an interaction between block construction pipelines and single-slot finality designs, especially in the case of attempts to reduce slot times. Many block construction pipeline designs end up increasing slot times. Many block construction pipelines involve the role of provers at multiple steps in the process. Therefore, it is worth considering both block construction pipelines and single-slot finality.

Fixing Staking Economics

What Problem Are We Solving?

Today, about 30% of the ETH supply is actively staked. This is more than enough to protect Ethereum from a 51% attack. If the proportion of staked ETH becomes larger, researchers worry about a different scenario: if almost all ETH is staked, risks arise. These risks include:

Staking goes from being a profitable task for experts to a responsibility for all ETH holders. As a result, regular stakers will be much less enthusiastic and will choose the easiest way (effectively, delegating their tokens to the most convenient centralized operator)

If almost all ETH is staked, the credibility of the slashing mechanism will be weakened

A single liquid stake token could take over most of the stake, and even take over the “currency” network effect of ETH itself

Ethereum is needlessly issuing ~1M extra ETH per year. In the case where one liquidity stake token gains a dominant network effect, a large portion of that value could even be captured by LST.

What is it and how does it work?

Historically, one class of solutions has been: if everyone is inevitably staking, and liquidity stake tokens are inevitable, then let’s make staking friendly to have a liquidity stake token that is actually trustless, neutral, and maximally decentralized. One simple approach would be to cap the staking penalty at 1/8, which would make 7/8 of the staked ETH non-slashable and therefore eligible to be put into the same liquid stake token. Another option would be to explicitly create two tiers of staking: “risk-bearing” (slashable) staking.

However, one criticism of this approach is that it seems economically equivalent to something much simpler: drastically reduce issuance if stake approaches some pre-determined cap. The basic argument is: if we end up in a world where the risk-bearing tier has a return of 3.4% and the risk-free tier (where everyone participates) has a return of 2.6%, that’s effectively the same as a world where staking ETH has a return of 0.8% and just holding ETH has a return of 0%. The dynamics of the risk-bearing tier, including the total amount staked and the degree of centralization, are the same in both cases. So we should do the simple thing and reduce issuance.

The main rebuttal to this argument is whether we can have a “risk-free tier” that still has some useful role and some degree of risk (e.g. as Dankrad proposes here).

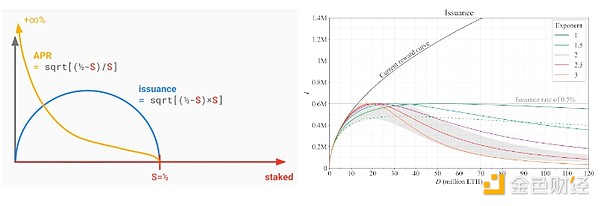

Both proposals imply changing the issuance curve, which would result in extremely low returns if the amount of equity is too high.

Left: A proposal by Justin Drake to adjust the issuance curve. Right: Another set of proposals by Anders Elowsson.

Two-tier staking, on the other hand, requires setting two return curves: (i) the return for “basic” (risk-free or low-risk) staking, and (ii) the premium for risky staking. There are multiple ways to set these parameters: for example, if you set a hard parameter that 1/8 of the stake is slashable, then market dynamics will determine the premium on the rate of return earned on slashable stake.

Another important topic here is MEV capture. Today, MEV income (e.g. DEX arbitrage, sandwiches, ...) goes to proposers, i.e. stakers. This is income that is completely "opaque" to the protocol: the protocol has no way of knowing if it is 0.01% APY, 1% APY or 20% APY. The existence of this income source is extremely inconvenient from multiple perspectives:

It is an unstable source of income, as each staker only gets it when they propose a block, which is now about once every 4 months. This creates an incentive for people to join the pool to get a more stable income.

It leads to an unbalanced distribution of incentives: too many proposals, too few proofs.

This makes a stake cap difficult to enforce: even if the “official” return is zero, MEV income alone might be enough to drive all ETH holders to stake. Therefore, a realistic stake cap proposal must actually make the return approach negative infinity. This creates more risk for stakers, especially solo stakers.

We can address these issues by finding a way to make MEV income visible in the protocol and capture it. The earliest proposal was Francesco’s MEV smoothing; today it is widely accepted that any mechanism that auctions off block proposer rights in advance (or, more generally, has enough power to capture almost all MEV) can achieve the same goal.

What are the connections to existing research?

Issue wtf: https://issuance.wtf/

Endgame Stake Economics, positioning case: https://ethresear.ch/t/endgame-stake-economics-a-case-for-targeting/18751

Issue-level attributes, Anders Elowsson: h ttps://ethresear.ch/t/properties-of-issuance-level-consensus-incentives-and-variability-across-pottial-reward-curves/18448

Validator setting size limit:https://notes.ethereum.org/@vbuterin/single_ slot_finality?type=view#Economic-capping-of-total-deposits

Thoughts on the multi-tier staking idea: https://notes.ethereum.org/@vbuterin/stake_2023_10?type=view

Rainbow Staking: https://ethresear.ch/t/unbundling-stake-towards-rainbow-stake/18683

Dakrad’s liquid staking proposal: https://notes.ethereum.org/Pcq3m8B8TuWnEsuhKwCsFg

MEV smooth ing, by Francesco: https://ethresear.ch/t/committee-driven-mev-smoothing/10408

https://ethresear.ch/t/mev-burn-a-simple-design/15590

What needs to be done and what trade-offs need to be made?

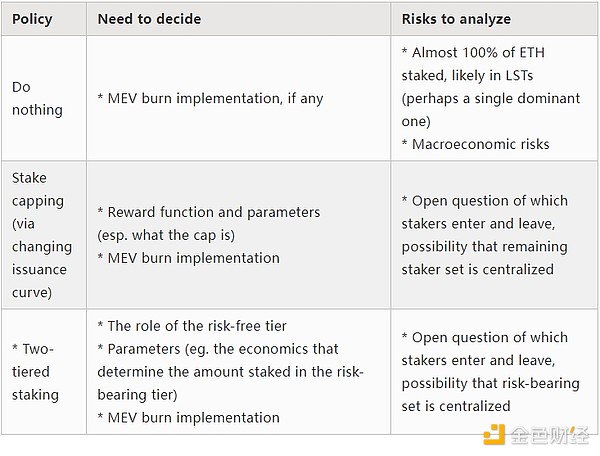

The main tasks remaining are to either agree to take no action and accept the risk of nearly all ETH being in LST, or to finalize and agree on the details and parameters of one of the above proposals. A rough summary of the benefits and risks is as follows:

How does it interact with the rest of the roadmap?

One important intersection has to do with solo staking. Today, the cheapest VPS that can run an Ethereum node costs about $60 per month, mostly due to hard drive storage costs. For a 32 ETH staker ($84,000 at the time of writing), this reduces the APY by (60 * 12) / 84000 ~= 0.85%. If the total staking return is less than 0.85%, then solo staking is not feasible for many at this level.

This further highlights the need to reduce node operating costs if we want solo staking to continue to be viable, which will be done in Verge: statelessness will remove storage space requirements, which may be sufficient in itself, and then the L1 EVM validity proof will make the cost negligible.

On the other hand, MEV burning arguably helps solo staking. Although it reduces returns for everyone, it more importantly reduces variance and makes staking less like a lottery.

Finally, any changes in issuance will interact with other fundamental changes in staking design (such as rainbow staking). A particular concern is if staking returns become very low, which means we have to choose between: (i) reducing penalties, reducing disincentives for bad behavior; or (ii) keeping penalties high, which increases the range of situations where even well-intentioned validators can accidentally receive negative returns if they are unlucky enough to encounter technical problems or even attacks.

Application Layer Solutions

The above section highlights changes to Ethereum L1 that can address important centralization risks. However, Ethereum is more than just an L1, it’s an ecosystem, and there are important application layer strategies that can help mitigate the risks outlined above. Some examples include:

Specialized staking hardware solutions — Some companies (e.g. Dappnode) are selling hardware specifically designed to make operating a staking node as easy as possible. One way to make this solution more effective is to ask the question: if a user has already spent the effort to get a box running and connected to the internet, what other services can it provide (to the user or to others) that benefit from decentralization? Examples that come to mind include (i) running a locally hosted LLM for self-sovereignty and privacy reasons, and (ii) running a node for a decentralized VPN.

Squad staking — This solution from Obol allows multiple people to stake together in an M-of-N format. This may become more popular over time as statelessness and later L1 EVM validity proofs reduce the overhead of running more nodes and the benefits of each participant not having to worry about being online all the time start to dominate. This is another way to reduce the perceived overhead of staking and ensure solo staking thrives in the future.

Airdrops — Starknet offers airdrops to solo stakers. Other projects that want to have a decentralized and values-aligned user base may also consider offering airdrops or discounts to validators identified as likely solo stakers.

Decentralized Block Builder Marketplace — Using a combination of ZK, MPC, and TEEs, it is possible to create a decentralized block builder that participates in and wins the APS auction game, but at the same time provides pre-confirmation privacy and censorship resistance guarantees to its users. This is another avenue for improving user welfare in the APS world.

Application layer MEV minimization - Individual applications can be built in a way that "leaks" less MEV to L1, reducing the incentive for block builders to create specialized algorithms to collect MEV. A simple but common strategy is for the contract to put all incoming operations into a queue and execute them in the next block, and auction the right to jump the queue, although it is inconvenient and destroys composability. Other more complex approaches include doing more work off-chain, e.g. as Cowswap does. Oracles can also be redesigned to minimize the value that can be extracted by the oracle.

Miyuki

Miyuki

Miyuki

Miyuki Weatherly

Weatherly Anais

Anais Miyuki

Miyuki Weiliang

Weiliang Joy

Joy Alex

Alex Weatherly

Weatherly Anais

Anais Joy

Joy