Author: Kyle_13, source: author's Twitter @kylewmi

Thanks to everyone for coming today. We have a bunch of super exciting developments to share with you about AO technology. We're going to do a demo first, and then Nick and I will be here trying to build an AI agent that will use a large language model in a smart contract to buy and sell based on the chat sentiment in the system that you're about to hear about. We're going to build it from scratch live today, and hopefully it goes well.

Yes, you're going to see how to do all this yourself.

The technical advances here really put AO far beyond other smart contract systems. This has been true before, and now it's increasingly more like a decentralized supercomputer than a traditional smart contract network. But it has all the characteristics of a smart contract network. So we're very excited to share this with you. Without further ado, let's get into the demo, and then we'll have a discussion, and we'll build something live together.

Hi everyone, thanks for coming today. We're very excited to announce three major technical updates to the AO protocol. Together, they achieve a big goal, which is to support large language models running in a decentralized environment as part of smart contracts. These are not just toy models, small models, or models that are compiled into their own binary.

This is a complete system that allows you to run almost all of the major models that are currently open source and available. For example, Llama 3 runs in smart contracts on the chain, so does GPT, and Apple's model, etc. This is the result of the joint efforts of the entire ecosystem, and there are three major technical advances that are also part of this system. So I am very excited to introduce all of this to you.

The general situation is that now LLM (large language model) can be run in smart contracts. You may have heard about decentralized AI and AI cryptocurrency many times. In fact, almost all of them, except for one system we're going to talk about today, are AI as an oracle, which means running AI off-chain and then putting the execution results on-chain for some downstream use.

We're not talking about that. We're talking about doing large language model inference as part of the smart contract state execution. This is all made possible by the AO hard disk that we have and the super parallel processing mechanism of AO, which means you can run a lot of calculations and it won't affect the different processes I'm using. We think this will allow us to create a very rich decentralized autonomous agent financial system.

So far in decentralized finance (DeFi), we have basically been able to make the execution of raw transactions trustless. Interactions in different economic games, like lending and borrowing and swapping, are all trustless. That's just one side of the problem. If you think about the global financial markets.

Yes, there are all sorts of different economic primitives that play out in different ways. There are bonds, stocks, commodities, derivatives, and so on. But when we really talk about markets, it's not just that, it's actually the intelligence layer. It's the people who make the decisions to buy, sell, borrow, or participate in various financial games.

So far in the decentralized financial ecosystem, we have successfully moved all of these primitives into a trustless state. So you can do an exchange on Uniswap without trusting the operator of Uniswap. In fact, fundamentally, there is no operator. The intelligence layer of the market is left off-chain. So if you want to participate in cryptocurrency investment, but don't want to do all the research and participation yourself, you have to find a fund.

You can trust their funds, and then they go and execute the intelligent decisions and pass it downstream to the basic primitive execution of the network itself. We think that in AO, we actually have the ability to move the intelligent part of the market, the intelligence that leads to the decision, to the network itself. So an easy way to understand this might be to imagine this.

A hedge fund or portfolio management application that you can trust to execute a set of intelligent instructions within the network, thus transferring the trustlessness of the network to the decision-making process. This means that an anonymous account, such as Yolo 420 Trader Number One (a bold, random trader), can create a new interesting strategy and deploy it on the network, and you can invest capital in it without actually trusting it.

You can now build autonomous agents that interact with large statistical models. And the most common large statistical models are large language models that can process and generate text. This means that you can put these models into smart contracts as part of a strategy developed by someone with a novel idea and execute them intelligently within the network.

You can imagine doing some basic sentiment analysis. Like you read the news and decide this is a good time to buy or sell this derivative. This is a good time to do this or that. You can have human-like decisions executed in a trustless way. This is not just theory. We created an interesting meme coin called Llama Fed. Basically the idea is that it's a fiat currency simulator where a herd of llamas are represented by the Llama 3 model.

They're like a combination of a llama and the chairman of the Federal Reserve, and you can go to them and ask them to give you some tokens, and they will evaluate your request. The large language model itself operates monetary policy, completely autonomously and trustlessly. We built it, but we don't control it. They operate the monetary policy, deciding who should get tokens and who shouldn't. It's a really interesting little application of this technology that will hopefully inspire all the other possible applications in the ecosystem.

To make this happen, we had to create three new foundational capabilities for AO, some at the base protocol layer and some at the application layer. This is not only useful for the execution of large language models, but it's much more broadly and exciting for AO developers. So I'm excited to introduce these to you today.

The first of these new technologies is Web Assembly 64-bit support. It sounds a bit like technical jargon, but I have a way to make everyone understand what it means. Basically, Web Assembly 64 support allows developers to create applications that use more than 4GB of memory. We’ll get to the new limits later, and they’re pretty staggering.

If you’re not a developer, think of it this way: someone asks you to write a book, and you’re excited about the idea, but they say you can only write 100 pages. No more, no less. You can express the ideas in the book, but you can’t do it in a natural and normal way because there’s an external limit, and you have to cater to it and change the way you write to fit it.

If you’re not a developer, think of it this way: someone asks you to write a book, and you’re excited about the idea, but they say you can only write 100 pages. No more, no less. You can express the ideas in the book, but you can’t do it in a natural and normal way because there’s an external limit, and you have to cater to it and change the way you write to fit it.

In the smart contract ecosystem, it’s not just about the 100 page limit. I would say it’s a bit like building in an early version of AO. Ethereum has a 48KB memory limit, which is like someone asking you to write a book that’s only one sentence long, and you can only use the top 200 most popular English words. It’s extremely difficult to build really exciting applications in this system.

Then with Solana, you have access to 10MB of working memory. That’s obviously an improvement, but we’re basically talking about a page of paper. ICP, the Internet Computer Protocol, allowed for 3GB of memory. It was theoretically complete, but they had to go down to 3GB. Now with 3GB of memory, you can run a lot of different applications, but you certainly can’t run large AI applications. They need to load a lot of data into main memory for fast access. That can’t be done efficiently in 3GB of memory.

When we released AO in February of this year, we also had a 4GB memory limit, which actually came from Web Assembly 32. Now, this memory limit has completely disappeared at the protocol level. Instead, the protocol-level memory limit is 18EB (exabytes). That's a huge amount of storage.

It's going to take quite some time until this is used for computation in memory instead of long-term storage media. At the implementation level, the computational units in the AO network now have access to 16GB of memory, but it will be relatively easy to replace this with larger amounts of memory in the future without changing the protocol. 16GB is more than enough to run large language model computations, which means you can download and execute 16GB models on AO today. For example, the unquantized version of Llama 3, Falcon 3, and many other models.

This is a core component that is necessary to build intelligent language-based computation systems. Now it's fully supported on-chain as part of smart contracts, which we think is very, very exciting.

This removes a major computational limitation of AO and subsequent smart contract systems. When we launched AO in February of this year, you might have noticed in the video that we mentioned several times that you have unlimited computational power, but there is a limit, which is that you can't exceed 4GB of memory. So that's the lifting of that limit. We think that's a very exciting advancement, 16GB is enough to run almost any model you want to run in the current AI space.

We were able to lift the 16GB limit without changing the protocol, which will be relatively easy in the future, and it's a big improvement compared to running Web Assembly 64 initially. So that in itself is a huge improvement in the capabilities of the system. The second major technology that enables large language models to run on AO is WeaveDrive.

WeaveDrive allows you to access Arweave data within AO like a local hard drive. This means you can open any transaction ID in AO that has been certified by the scheduling unit and upload it to the network. Of course, you can access this data and read it into your program, just like a file on your local hard drive.

As we all know, there are about 6 billion transaction data stored on Arweave at present, so this is a huge dataset to start with. This also means that in the future when building applications, the motivation to upload data to Arweave will increase, because this data can also be used in AO programs. For example, when we made the large language model run on Arweave, we uploaded about $1,000 worth of models to the network. But this is just the beginning.

With a smart contract network with a local file system, the number of applications you can build is huge. So this is very exciting. Even better, the system we built allows you to stream data into the execution environment. It's a technical nuance, but you can imagine going back to the book analogy.

Someone says to you, I want to access a piece of data in your book. I want to get a graph in this book. In a simple system, or even in the current smart contract network, this would be a huge improvement, you would give the entire book. However, this is obviously inefficient, especially if that book is a large statistical model with thousands of pages.

This is extremely inefficient. Instead, what we do in AO is allow you to read the bytes directly. You go directly to the location of the graph in the book, just copy the graph into your application and execute it. This makes the system extremely efficient. This is not only a minimum viable product (MVP), it is a fully functional, well-built data access mechanism. So you have an infinite computing system and an infinite hard drive, combine them together, and you have a supercomputer.

This has never been built before, and now it is available to everyone at minimal cost. This is the current state of AO, and we are very excited about it. The implementation of this system is also at the operating system level. So we made WeaveDrive a subprotocol of AO, which is a compute unit extension that anyone can load. It's interesting because it's the first of its kind. AO has always had the ability for you to add extensions to the execution environment. Just like if you have a computer and you want to plug in more memory, or plug in a graphics card, you physically put a unit into the system. You can do that with compute units in AO, and that's what we've done here. So at the OS level, you now have a hard drive, which is just a file system that represents data storage. What that means is that not only can you access that data in AO, build applications the way you normally would, but you can actually access it from any application that you bring to the network. So it's a broadly applicable capability that's accessible to everyone building in the system, no matter what language they're writing in, Rust, C, Lure, Solidity, whatever, as if it were native to the system. In the process of building this system, it also forced us to create subprotocols, to create ways for other compute unit extensions so that in the future other people can also build exciting things.

Now that we have the ability to run computations in an arbitrarily sized memory set, and can load data from the network into processes within the AO, the next question is how to do inference itself.

Since we chose to build the AO on Web Assembly as its primary VM, it was relatively easy to compile and run existing code in that environment. Since we built WeaveDrive to expose it as an OS-level file system, it was actually relatively easy to run Llama.cpp (an open source large language model inference engine) on the system.

This is very exciting because it means that you can run not only this inference engine, but many others with ease. So the last component to enable large language models to run inside AO is the large language model inference engine itself. We ported a system called Llama.cpp, which sounds a little mysterious, but it's actually the leading open source model execution environment right now.

Running directly inside the AO smart contract, it's actually relatively easy once we have the ability to have arbitrary amounts of data in the system and then load arbitrary amounts of data from Arweave.

To enable this, we're also working with something called SIMD (Single Instruction Multiple Data) compute extensions, which allow you to run these models much faster. So we've enabled that as well. What that means is that currently these models run on the CPU, but it's pretty fast. If you have asynchronous compute, it should work for your use case. Things like reading news signals and then deciding which trades to execute, that works well with the current system. But we also have some exciting upgrades that we'll talk about soon, about other acceleration mechanisms, like using GPUs to accelerate large language model inference.

Llama.cpp allows you to load not only Meta's leading model Llama 3, but many other models, in fact about 90% of the models you can download from the open source model website Hugging Face can be run inside the system, from GPT-2 if you want, to 253 and Monet, Apple's own large language model system and many other models. So we now have the framework to upload any model from Arweave, use the hard disk to upload the model that I want to run in the system. You upload them, they're just normal data, and then you can load them into AO's process and execute, get the results and work in the way you like. We think this is a package that enables applications that were not possible in the smart contract ecosystem before, and even if it were possible now, the amount of architectural changes in existing systems like Solana are just unpredictable and not on their roadmap. So to show you this and make it real and understandable, we created a simulator, Llama Fed. The basic idea is that we get a committee of Fed members, and they are llama, both in the sense of being a meta-llama 3 model and in the sense of being the chairman of the Fed.

We also tell them that they are llama, like Alan Greenspan or the chairman of the Fed. You can go into this little environment.

Some people will be familiar with this environment, and it's actually like Gather, which we're working on today, where you can talk to the llama and ask them to give you some tokens for a very interesting project, and they decide whether to give you tokens based on your request. So you burn some Arweave tokens, wAR tokens (provided by the AOX team), and they give you tokens based on whether they think your proposal is good or not. So this is a meme coin, and the monetary policy is completely autonomous and intelligent. It's a simple form of intelligence, but it's still interesting. It will evaluate your proposals and others' proposals, and run monetary policy. By analyzing news headlines and making intelligent decisions or interacting with customer support and returning value, all of this can now be achieved inside a smart contract. Elliot will now show you.

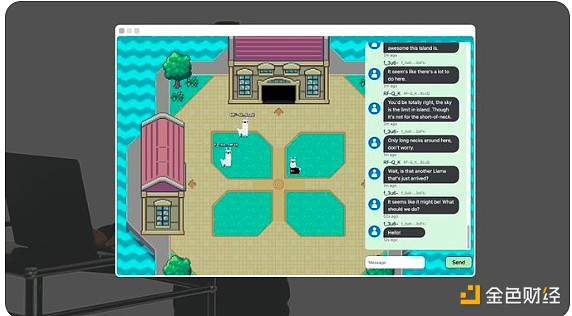

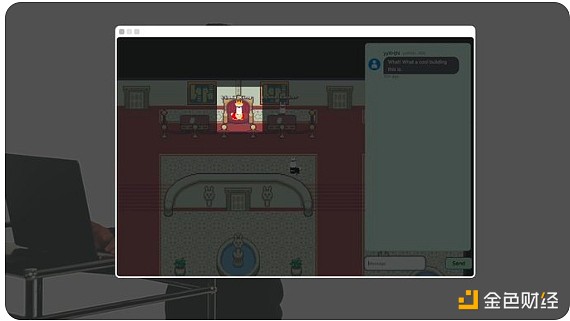

Hi everyone, my name is Elliot, and today I'm going to show you Llama Land, an on-chain autonomous world running inside the AO, powered by Meta's open source Llama 3 model.

The conversations we see here are not just between players, but also with completely autonomous digital llama.

The conversations we see here are not just between players, but also with completely autonomous digital llama.

For example, this llama is human.

But this llama is on-chain AI.

This building contains the Llama fed. It's like the Federal Reserve, but for llama.

Llama fed runs the world's first AI-driven monetary policy and mints Llama tokens.

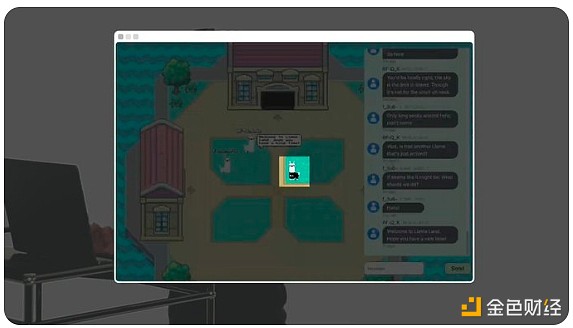

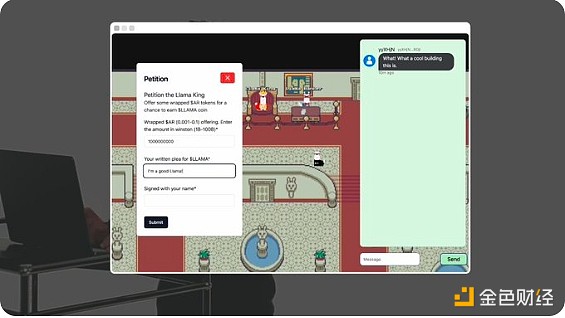

This guy is the Llama King. You can offer him wrapped Arweave tokens (wAR) and write a request to get some Llama tokens.

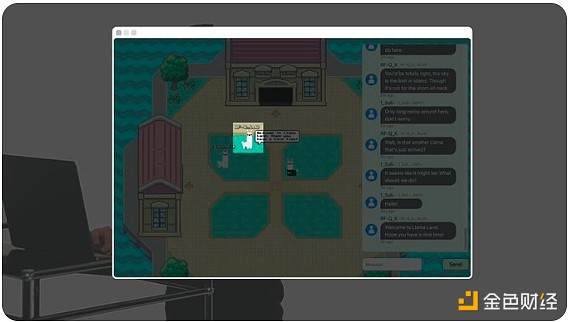

The Llama King AI will evaluate and decide whether to award Llama tokens. Llamafed’s monetary policy is completely autonomous with no human oversight. Every agent and every room in the world is itself an on-chain process on the AO.

It looks like King Llama has granted us some tokens and if I check my ArConnect wallet I can see they are already there. Not bad. Llama Land is just the first AI-powered world to be implemented on the AO. It’s a framework for a new protocol that allows anyone to build their own autonomous world, the only limit is your imagination. All of this is 100% implemented on-chain, which is only possible on the AO.

Thank you, Elliot. What you just saw is not only a large language model participating in financial decisions and running an autonomous monetary policy system. There are no backdoors, we can't control it, all of this is run by the AI itself. You also see a little universe, a place where you can walk in physical space, you can go to that place and interact with financial infrastructure. We think that this is not just a fun little demo.

There's actually something very interesting here, these places bring together different people who use financial products. We see in the DeFi ecosystem that if someone wants to participate in a project, they first look on Twitter, then go to the website, participate in the basic primitives in the game.

Then they join a Telegram group or a Discord channel or talk to other users on Twitter. The experience is very decentralized, and we are all jumping between different applications. One interesting idea we're trying out is if you have a UI for these DeFi apps that allows their communities to come together and co-manage this autonomous space that they collectively access because it's a persistent web app that can join the experience.

Imagine you can go to a place that looks like an auction house and hang out with other users who like the protocol. You can basically chat with other users when there's activity on the financial mechanism process that's happening on AO. The community and social aspect is combined with the financial part of the product.

We think this is really interesting and has even broader implications. You can build an autonomous AI agent here that wanders around in this Arweave world, interacting with different applications and users that it finds. So if you're building a metaverse, when you create an online game, the first thing you do is create NPCs (non-player characters). Here, NPCs can be generic.

You have an intelligent system that's wandering around, interacting with the environment, so you don't have the user cold start problem. You can have autonomous agents that are trying to make money for themselves, trying to make friends, interacting with the environment like normal DeFi users. We think this is really interesting, even if it's a little weird. We'll see.

Looking forward, we also see opportunities to accelerate large language model execution in AO. Earlier I talked about the concept of computational unit extensions. That's what we used to build WeaveDrive.

It's not just WeaveDrive, you can build any type of extension for AO's computational environment. There's a very exciting ecosystem project that's solving this problem for GPU accelerated large language model execution, which is Apus Network. I'll let them explain.

Hi, I'm Mateo. Today I'm excited to introduce Apus Network. Apus Network is committed to building a decentralized, trustless GPU network.

We provide an open source AO extension that provides a deterministic execution environment for GPUs by leveraging Arweave's permanent on-chain storage, and provide an economic incentive model for decentralized AI using AO and APUS tokens. Apus Network will use GPU mining nodes to competitively execute the best, trustless model training running on Arweave and AO. This ensures that users can use the best AI models at the most cost-effective price. You can follow our progress on X (Twitter) @apus_network. Thank you.

This is the current state of AI on AO today. You can try Llama Fed and try to build your own smart contract application based on large language models. We think this is the beginning of introducing market intelligence to decentralized execution environments. We are very excited about this and look forward to seeing what happens next. Thank you all for participating today and look forward to communicating with you again.

JinseFinance

JinseFinance

JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance