The Dencun testnet version of the Ethereum network upgrade was launched on the Goerli testnet on January 17, 2024, and the Sepolia testnet was successfully launched on January 30th. The Dencun upgrade is far away from us. Coming closer.

After another Holesky testnet upgrade on February 7, it will be the mainnet upgrade. At present, the Cancun upgrade mainnet has been officially launched. Confirmed on March 13th.

Almost every Ethereum upgrade will be accompanied by a wave of theme prices. The last time Ethereum was upgraded was the Shanghai upgrade on April 12, 2023. , POS-related projects have been sought after by the market.

If previous experience is followed, this Dencun upgrade will also have the opportunity to be laid out in advance.

Since the technical content behind the Dencun upgrade is relatively obscure, it cannot be summed up in one sentence like "Ethereum shifts from PoW to PoS" like the Shanghai upgrade. , it is difficult to grasp the focus of the layout.

Therefore, this article will use easy-to-understand language to explain the technical details of Dencun upgrade, and sort out this upgrade and data availability DA and Layer 2 competitions for readers. The connections between the ways.

EIP 4484

EIP-4844 is the most important proposal in this Dencun upgrade, marking a practical and important step for Ethereum to expand in a decentralized manner.

In lay terms, the current second layer of Ethereum needs to submit transactions that occur on the second layer to the calldata of the Ethereum main network for node verification. The effectiveness of block generation on the second-layer network.

The problem caused by this is that although the transaction data has been compressed as much as possible, the huge transaction volume on the second layer is multiplied by the high storage cost of the Ethereum main network. The cost base is still a considerable expense for second-tier nodes and second-tier users. The price factor alone will cause the second layer to lose a large number of users to side chains.

EIP 4484 established a new and cheaper storage area BLOB (Binary Large Object, binary large object), and used a method that can point to the BLOB storage space A new transaction type called "BLOB-Carrying Transaction" is used to replace the transaction data that needs to be stored in calldata before the upgrade, helping the second layer of the Ethereum ecosystem to save Gas costs.

The reason why BLOB storage is cheap

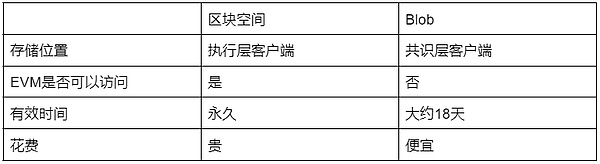

It is well known that cheapness comes at a price, and the reason BLOB data is cheaper than ordinary Ethereum Calldata of similar size is that the Ethereum execution layer (EL, EVM) does not actually have access to the BLOB data itself.

In contrast, EL can only access references to BLOB data, and the data of the BLOB itself can only be accessed by Ethereum's consensus layer (CL, also known as beacon node) Download and storage, the amount of memory and calculation consumed by storage is much less than that of ordinary Ethereum Calldata.

And BLOB also has a characteristic that it can only be stored for a limited period of time (usually about 18 days) and will not expand infinitely like the size of the Ethereum ledger. .

The storage validity period of BLOB

Contrary to the permanent ledger of the blockchain, BLOBs are temporary storage that are available for 4096 epochs, or approximately 18 days.

After expiration, most consensus clients will be unable to retrieve specific data in the BLOB. However, proof of its previous existence will remain on the mainnet in the form of KZG commitments and will be permanently stored on the Ethereum mainnet.

Why choose 18 days? This is a trade-off between storage cost and effectiveness.

First of all, we must consider the most intuitive beneficiaries of this upgrade, Optimistic Rollups (such as: Arbitrum and Optimism,), because according to the setting of Optimistic Rollups, there are 7 days Fruad Proof time window.

The transaction data stored in the blob is exactly what Optimistic Rollups needs when launching a challenge.

Therefore, the validity period of the Blob must ensure that the Optimistic Rollups fault proof is accessible. For the sake of simplicity, the Ethereum community chose the 12th power of 2 (4096 epochs) Derived from 2^12, one epoch is approximately 6.4 minutes).

BLOB-Carrying Transaction and BLOB

Understanding the relationship between the two is very important to understand the role of BLOB in data availability (DA).

The former is the entire EIP-4484 proposal and a new type of transaction, while the latter can be understood as a temporary storage location for layer 2 transactions.

The relationship between the two can be understood as that most of the data in the former (layer2 transaction data) is stored in the latter. The remaining data, that is, the BLOB data commitment (Commitment), will be stored in the calldata of the main network. In other words, promises can be read by the EVM.

You can think of Commitment as constructing all transactions in the BLOB into a Merkle tree, and then only the Merkle root, which is the Commitment, can be accessed by the contract.

This can be achieved cleverly: although the EVM cannot know the specific content of the BLOB, the EVM contract can verify the authenticity of the transaction data by knowing the Commitment. Purpose.

The relationship between BLOB and Layer2

Rollup technology achieves data availability (DA) by uploading data to the Ethereum main network, but this is not intended to allow L1's smart contracts to directly read or verify these uploaded data.

The purpose of uploading transaction data to L1 is simply to allow all participants to view the data.

Before the Dencun upgrade, as mentioned above, Op-rollup will publish transaction data to Ethereum as Calldata. Therefore, anyone can use these transaction information to reproduce the state and verify the correctness of the second-layer network.

It is not difficult to see that Rollup transaction data needs to be cheap + open and transparent. Calldata is not a good place to store transaction data specifically for the second layer, but BLOB-Carrying Transaction is. It is tailor-made for Rollup.

After reading this, you may have a question in your mind. This kind of transaction data does not seem important. What is its use?

In fact, transaction data is only used in a few cases:

- < p style="text-align: left;">For Optimistic Rollup, based on the assumption of trust, there may be dishonesty issues. At this time, the transaction records uploaded by Rollup come in handy. Users can use this data to initiate transaction challenges ( Fraud proof);

For ZK Rollup, zero-knowledge proof has proven that the status update is correct, and uploading data is only for users to Calculate the complete state and enable the escape hatch mechanism when the second-level node cannot operate correctly (Escape Hatch requires a complete L2 state tree, which will be discussed in the last section).

This means that the scenarios in which transaction data is actually used by contracts are very limited. Even in the Optimistic Rollup transaction challenge, it is only necessary to submit evidence (status) proving that the transaction data "existed" on the spot, without the need for the transaction details to be stored in the main network in advance.

So if we put the transaction data in the BLOB element, although the contract cannot access it, the mainnet contract can store the Commitment of this BLOB.

If the challenge mechanism requires a certain transaction in the future, we only need to provide the data of that transaction, as long as it can be matched. This convinces the contract and provides the transaction data for the challenge mechanism to use.

This not only takes advantage of the openness and transparency of transaction data, but also avoids the huge gas cost of entering all data into the contract in advance.

By only recording Commitment, transaction data is verifiable while greatly optimizing costs. This is a clever and efficient solution for uploading transaction data using Rollup technology.

It should be noted that in the actual operation of Dencun, the Merkle tree method similar to Celestia is not used to generate Commitment, but the ingenious KZG (Kate -Zaverucha-Goldberg, Polynomial Commitment) algorithm.

Compared with Merkle tree proof, the process of generating KZG Proof is relatively complex, but its verification volume is smaller and the verification steps are simpler, but the disadvantage is that it requires It has a trustworthy setting (ceremony.ethereum.org is now closed) and does not have the ability to prevent quantum computing attacks (Dencun uses the Version Hash method, and other verification methods can be replaced if necessary).

For the now popular DA project Celestia, it uses the Merkle tree variant. Compared with KZG, it depends on the integrity of the nodes to a certain extent. However, it helps to reduce the threshold requirements for computing resources between nodes and maintain the decentralized characteristics of the network.

Opportunities for Dencun

Eip4844 reduces costs and increases efficiency for the second layer, but it also introduces security risks, which also brings new opportunities.

To understand the reason, we need to talk back to the escape hatch mechanism or forced withdrawal mechanism mentioned above.

When the Layer 2 node becomes disabled, this mechanism can ensure that user funds are safely returned to the main network. The prerequisite for activating this mechanism is that the user needs to obtain the complete state tree of Layer 2.

According to normal circumstances, users only need to find a Layer2 full node to request data, generate merkle Proof, and then submit it to the main network contract to prove the legitimacy of their withdrawals. sex.

But don’t forget that the user wants to activate the escape cabin mechanism to exit L2 precisely because the L2 node does evil. If all nodes do evil, then there is a high probability that the node will not exit. Where to get the data you want.

This is the data withholding attack that Vitalik often refers to.

Before EIP-4844, permanent Layer2 records were recorded on the mainnet. When no Layer2 node can provide complete off-chain status, users can Deploy a full node yourself.

This full node can obtain all historical data released by the Layer 2 sequencer on the main network through the Ethereum main network, and users can construct the required Merkle proofs , and by submitting the proof to the contract on the mainnet, the withdrawal of L2 assets can be completed safely.

After EIP-4844, Layer 2 data only exists in the BLOB of the Ethereum full node, and historical data 18 days ago will be automatically deleted.

Therefore, the method in the previous paragraph to obtain the entire state tree by synchronizing the main network is no longer feasible. If you want to obtain the complete state tree of Layer 2, you can only Mainnet nodes that store all Ethereum BLOB data (which should be automatically deleted in 18 days) through a third party, or Layer 2 native nodes (very few).

After 4844 goes online, it will become very difficult for users to obtain the complete Layer 2 status tree in a completely trustworthy way.

If users have no stable way to obtain the Layer 2 state tree, they cannot perform forced withdrawal operations under extreme conditions. Therefore, 4844 has caused Layer 2 security shortcomings/deficiencies to a certain extent.

To make up for this security gap, we need to have a trustless storage solution with a positive economic cycle. Storage here mainly refers to retaining data in Ethereum in a trustless manner, which is different from the storage track in the past because there is still the keyword "trustless".

Ethstorage can solve the problem of no trust and has received two rounds of funding from the Ethereum Foundation.

It can be said that this concept can really cater to/make up for Dencun's upgraded track, and it is very worthy of attention.

First of all, the most intuitive significance of Ethstorage is that it can extend the available time of DA BLOB in a completely decentralized manner, making up for the shortest security of Layer 2 after 4844 plate.

In addition, most existing L2 solutions mainly focus on expanding Ethereum’s computing power, that is, increasing TPS. However, the need to securely store large amounts of data on the Ethereum mainnet has surged, especially due to the popularity of dApps such as NFTs and DeFi.

For example, the storage needs of on-chain NFTs are very obvious, because users not only own the tokens of the NFT contract, but also own the on-chain images. Ethstorage can solve the additional trust issues that come with storing these images in a third party.

Finally, Ethstorage can also solve the front-end needs of decentralized dApps. Currently existing solutions are primarily hosted by centralized servers (with DNS). This setup leaves websites vulnerable to censorship and other issues such as DNS hijacking, website hacking, or server crashes, as evidenced by incidents such as Tornado Cash .

Ethstorage is still in the initial network testing stage, and users who are optimistic about the prospects of this track can experience it.

JinseFinance

JinseFinance

JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance Edmund

Edmund JinseFinance

JinseFinance JinseFinance

JinseFinance Sanya

Sanya