Author: Josie; Source: NewGeek

Two days ago, foreign media conducted an exclusive interview with the Sora core team. After watching the original video, it is approximately Nothing was said. The scene looked like a speech by Section Chief Ma of the National Development and Reform Commission.

In the words of netizens, it's like there is a lawyer pointing a gun at these people outside the camera.

Sora has been released for almost a month. When it was first released, Sora was shocking and brought people unlimited imagination. Many people even said that AGI was coming.

However, only a few people have used Sora so far. No matter how good the product is, people will lose interest over time.

Just when people were turning Sora upside down, everything that should be talked about was finished, and it seemed that they were really dead, OpenAI sent a few people out for an interview.

In the 16-minute exclusive interview, Sora's core team members talked about a lot of content, but it was all known content and nothing new. The information did not seem to be as much as Sora's technical documentation.

Let’s see how foreigners do Tai Chi.

The three core members of Sora interviewed in this interview are Bill Peebles, Tim Brooks and Aditya Ramesh.

First of all, the question that everyone is most concerned about is, when can we use Sora?

"Don't worry, ordinary people won't be able to use it in the short term ."

Sora members said that Sora is not yet open to the public and there is no specific timetable. OpenAI is in the stage of collecting user feedback and hopes to further chat about how people use Sora and what security work needs to be done.

Since it can't be used, let's explore how Sora is implemented.

Sora team said: Sora is a video generation model that works by analyzing a large amount of video data and learning to generate videos. The specific working method combines the technologies of diffusion models (such as DALL-E) and large-scale language models (such as the GPT series). Architecturally, Sora is similar to between the two. The training method is similar to DALL-E, but it is more like GPT in structure.

The fact that the structure is more like GPT has been analyzed by many people when Sora first appeared. This is also a major technical feature of Sora.

Next, it is equally curious, where does Sora's training data come from?

In the official Sora generation video, whether it is a pirate ship in a coffee cup or a woman walking on the streets of Tokyo, it is said that Sora seems to understand many worlds physical laws.

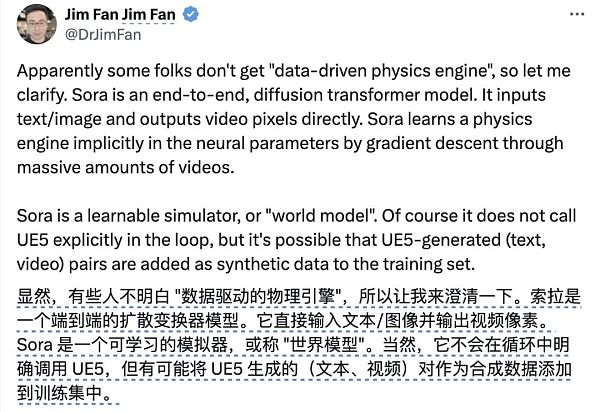

There have been many folk discussions before, and Sora is very likely to have added text and videos generated with UE5 as synthetic data in the data set.

Faced with such a question, Sora member Tim Brooks did not respond clearly. He did Tai Chi and expressed that it was inconvenient to go into too much detail. However, he revealed that generally he used public data and data that OpenAI is authorized to use, and Shared a "technological innovation".

In the past, whether it was an image or video generation model, it was usually trained with a very fixed size, such as a video with only one resolution.

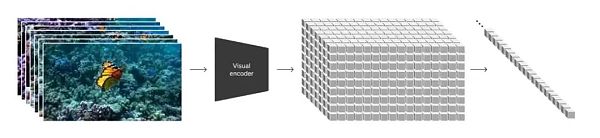

During Sora's training, they segmented various pictures and videos, regardless of aspect ratio, length, high-definition or low-definition, into A small piece of it. Researchers can train the model to recognize different numbers of small patches based on the size of the input video, which also allows Sora to learn from various data more flexibly and generate content of different resolutions and sizes.

This technology is also mentioned in Sora technical documents, which is the so-called patch.

When modeling a large language model, the text is split into tokens as the smallest unit, and the token in the large video model is a patch.

This technology was not created by OpenAI. When OpenAI announced its use of this technology, it caused discussion: Why can OpenAI create good AI products using other people's technology.

The host asked again: What do you think Sora is good at? What aspects are still lacking? For example, I saw a video in which a hand actually had six fingers.

The Sora team first praised and then suppressed that Sora is good at realistic videos and can generate 1-minute long videos, which is very powerful. But there are still some problems, such as hand details (the nightmare of all AI) camera trajectories, changes in physical phenomena, etc.

In addition, the Sora team also introduced some other cool features, such as generating videos through video synthesis in addition to prompts. This enables seamless transitions between videos composed of completely different themes and scenes.

On OpenAI's Tiktok, there is a video of a drone turning into a butterfly flying in the coral reef transformed by the Colosseum.

It is completely different from the original video generation model in terms of technology and experience. Aditya Ramesh even said that what they do is to imitate nature first and then surpass it!

So far, OpenAI’s AI-generated videos on Tiktok have all used dubbing, rather than AI seamlessly generating sounds. The Sora team said that AI sound is not something they are considering for the time being. The top priority is still to generate video to achieve longer time, better image quality and frequency.

But I don’t know if the addition of sound to Sora will be around the corner with the release of Pika Sound Effects function.

When asked by the host, Sora's next development direction. Sora member Tim Brooks said that Sora still has two aspects of work to complete before it is actually released:

The first is to obtain feedback from more users and understand how Sora works. Bring value to people. For example, some users hope to have more detailed and direct control over the generated videos, not just prompts.

On the other hand, Sora's security work needs to be strengthened, and OpenAI will fully consider the various possible impacts. Currently, a traceability classifier applied to videos is being trained to identify whether a video is generated by AI, and a watermark is added to each Sora-generated video.

In addition, the Sora team said that AI-generated videos also bring many opportunities. It can significantly reduce the cost from creativity to finished film. It is entirely possible for one person to make a movie.

What excites them even more is that with the emergence of new AI tools, someone will create brand new things and continue to push the boundaries of creativity, which will be impossible become possible.

But stop, this is just a beautiful fantasy of scientists. After all, it will take a long time for ordinary people to actually use Sora.

Moreover, Sora members revealed. AI will not only play a role in video creation by learning from video data. Models like GPT, while smart, are missing some information if they can't "see" the world like we do. Models like Sora are solving this problem.

Is this confirmation that AGI is coming?

Finally, the host asked an interesting question, how long does it take for Sora to generate a video?

"Depends on the situation, but you can leave, go get a coffee, come back and it's still being processed, which is a long time anyway."

The above is the interview content of the Sora team. A brief summary is:Sora is very powerful and can see the world. Because of this, we cannot make ordinary people very Use it soon, there is still a lot of safety work to do.

Umm, you can bite the lighter if you don't have a job, there is no need to force it.

JinseFinance

JinseFinance

JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance Huang Bo

Huang Bo